Managing OCP Infrastructures Using GitOps (Part 3)

In the first part of this series, I showed you how the to install the Assisted Service/CIM (Central Infrastructure Management) and how a cluster can be deployed in a declarative manner using approximately 9 YAML files.

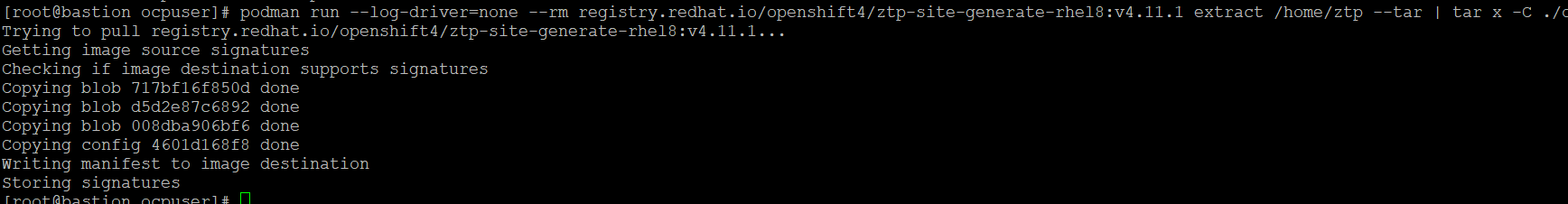

In the second part of this series, we could see how that YAML files could be condensed into a single SiteConfig file. The directory structure of the ztp-site-generator container was used to create the following GitHub repository.

Note: A new branch of this repo is now available is based on v4.11.3-9 of ztp-site-generator image. The branch is called v4.11.3-9. For information on how to get the files from this new container, see section called "Steps to Parse Site Config" from my previous article.

In this article, I will show how the SiteConfig can be parsed as part of a ZTP pipeline using Openshift Gitops. These features can also be used to have objects (such as clusters) be automatically modified based on updates being made to the source GitHub repo.

Some assumptions being made for this article are as follows:

-ACM is installed on a hub cluster

-Assisted-Service/CIM is configured on this hub cluster

-A libvirt-based VM will be deployed (called example-sno) as a result of this exercise

When using the GitHubrepo, you will see a README file (at parent of argocd directory), a deployment, and example directory. The README will be referenced for a majority of this article.

The deployment directory contains YAML files that will be applied to enable Openshift Gitops/ArgoCD to manage the entire lifecycle of clusters including the applications and policies (using policygentemplates) that get pushed to the resulting cluster.

For an idea on how policygentemplates work, see this article

This article will cover the following topics:

A. Installing Openshift Gitops Operator on Hub Cluster

B. Installing Topology Aware Lifecycle Manager on Hub Cluster

C. Configuring ArgoCD

D. Deploying ZTP-SPOKE Cluster

E. Troubleshooting

Installing Openshift Gitops Operator on Hub Cluster

- In the Openshift Web Console of Hub, go to Operators --> OperatorHub

- Search for GitOps and click on the "Red Hat Openshift GitOps" which is provided by Red Hat

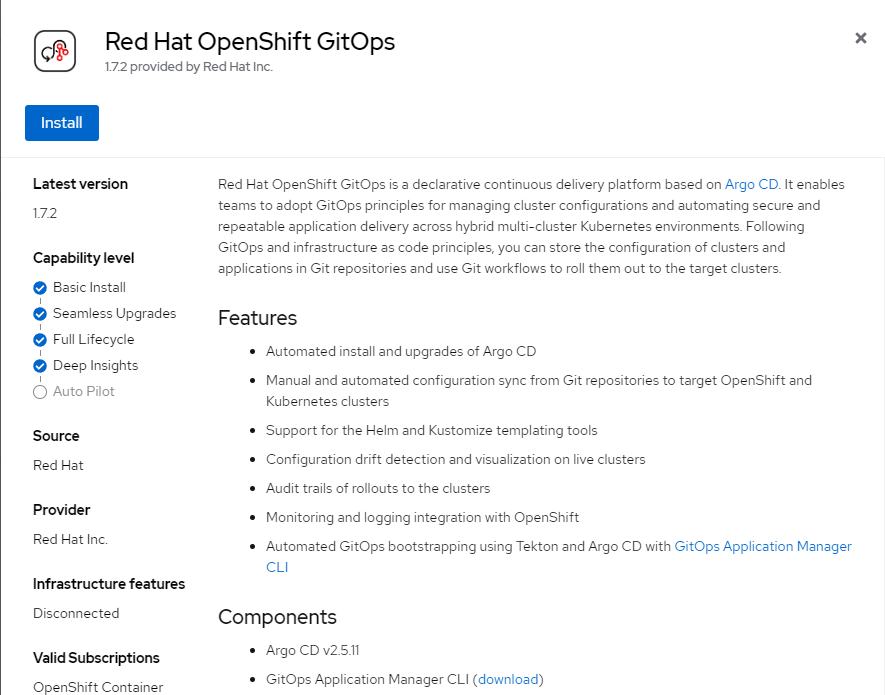

3. Click on "Install"

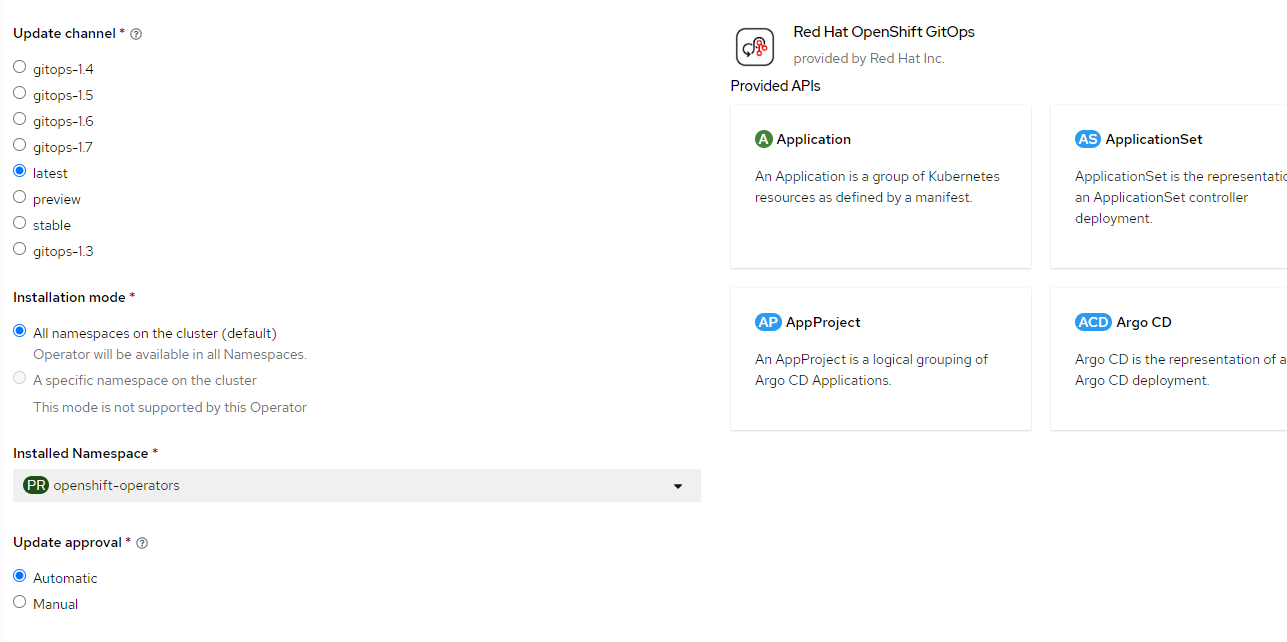

4. On the next page, choose latest (1.7.2 as of this writing) and click on Install.

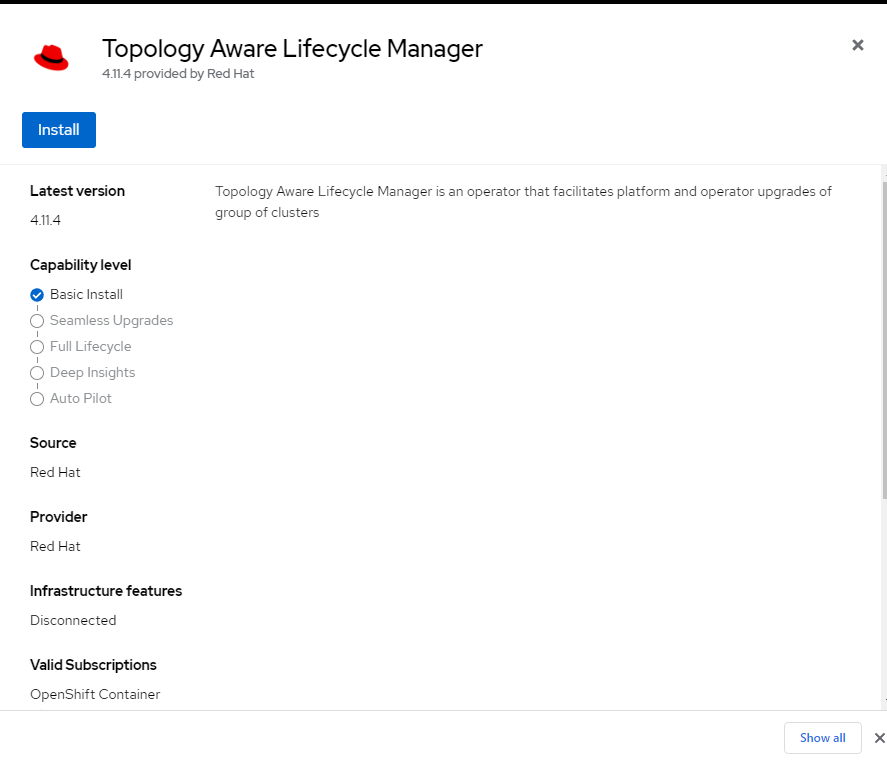

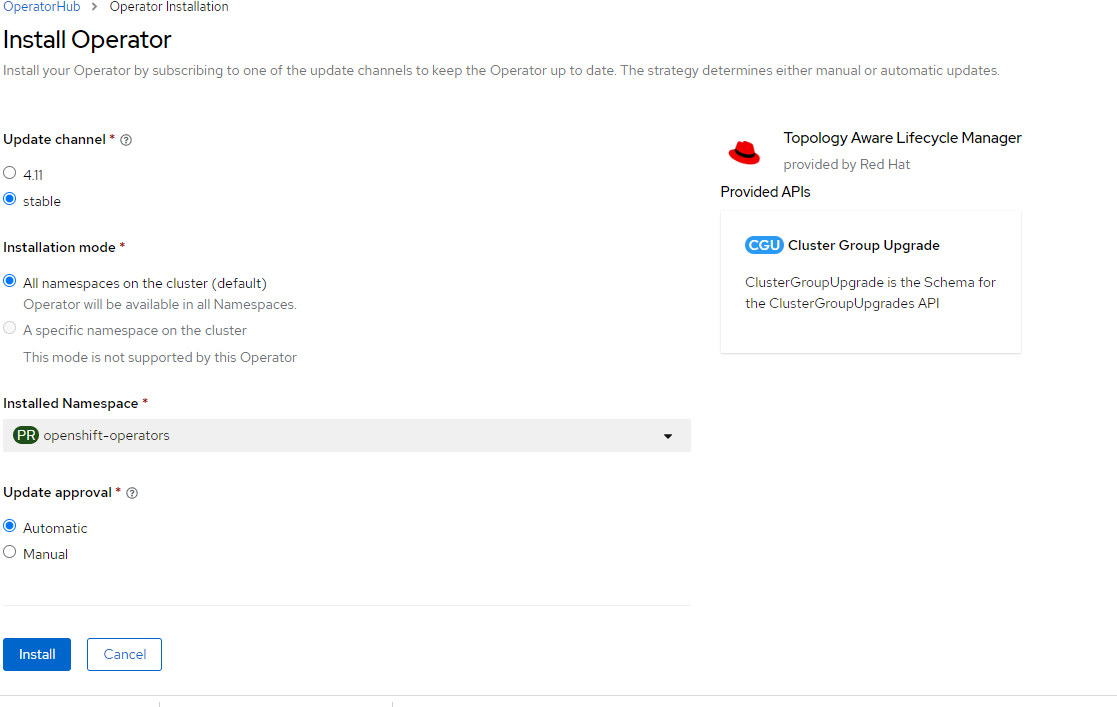

Installing Topology Aware Lifecycle Manager on Hub Cluster

- In the Openshift Web Console of Hub, go to Operators --> OperatorHub

- Search for GitOps and click on the "Topology Aware Lifecycle Manager" which is provided by Red Hat

3. Click on "Install"

4. Accept the defaults and click "Install"

Configuring ArgoCD

If you have not already done so, I would recommend doing a git clone and re-push my GitHub repo to your own repo. Here is the repo again for reference

These instructions come from the README at https://github.com/kcalliga/ztp-example/tree/v4.11.3-9/argocd

- On your hub cluster, let's patch the Openshift GitOps. CD to parent directory of GitHub repo to do this.

oc patch argocd openshift-gitops -n openshift-gitops --type=merge --patch-file argocd/deployment/argocd-openshift-gitops-patch.json

In the openshift-gitops project/namespace, the openshift-gitops-application-controller-0 will restart and openshift-gitops-repo-server-<random-guid> will show up. Once these show as Running, proceed to next step.

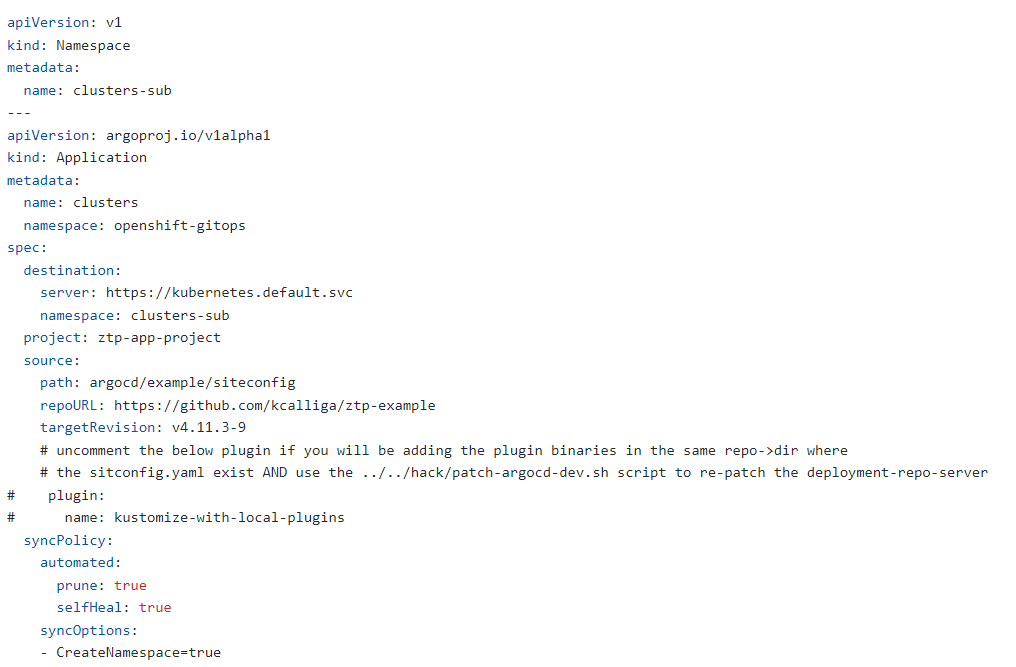

2. Modify deployment/clusters-app.yaml and deployment/policices-app.yaml to point to your repository.

The clusters-apps is the Gitops application that handles the synching of Siteconfigs.

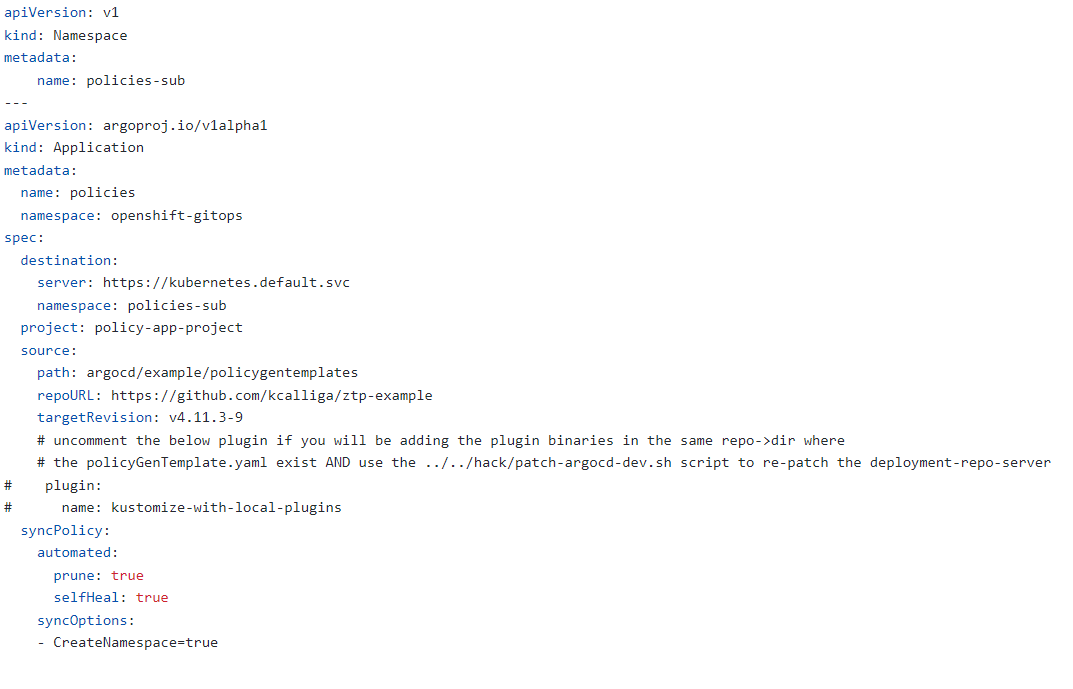

The policies-apps is the Gitops application that handles the linking of clusters to polcies that will be applied to them using PolicyGenTemplates.

In clusters-app.yaml, change the source path, repoURL, and targetRevision (branch) to match your own.

In policies-app.yaml, change the source path, repoURL, and targetRevision (branch) to match your own.

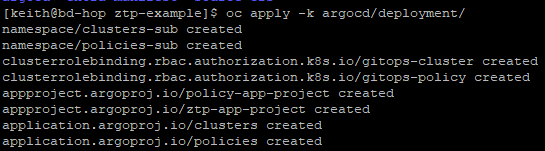

3. After making these edits, apply the YAMLs in deployment directory based on kustomization.yaml

oc apply -k argocd/deployment/

Ensure that all of the objects (as shown above are created)

3. Find the route for Openshift-Gitops

oc get routes -n openshift-gitopsThe route should start with openshift-gitops-server-openshift-gitops. Open this in your web browser. I chose to login via Openshift with the kubeadmin user.

Note: There may be an issue if trying to create the application via the GUI when logging in with an admin user who is not kubeadmin. This can be disregarded since these steps have us doing the oc apply on the command-line.

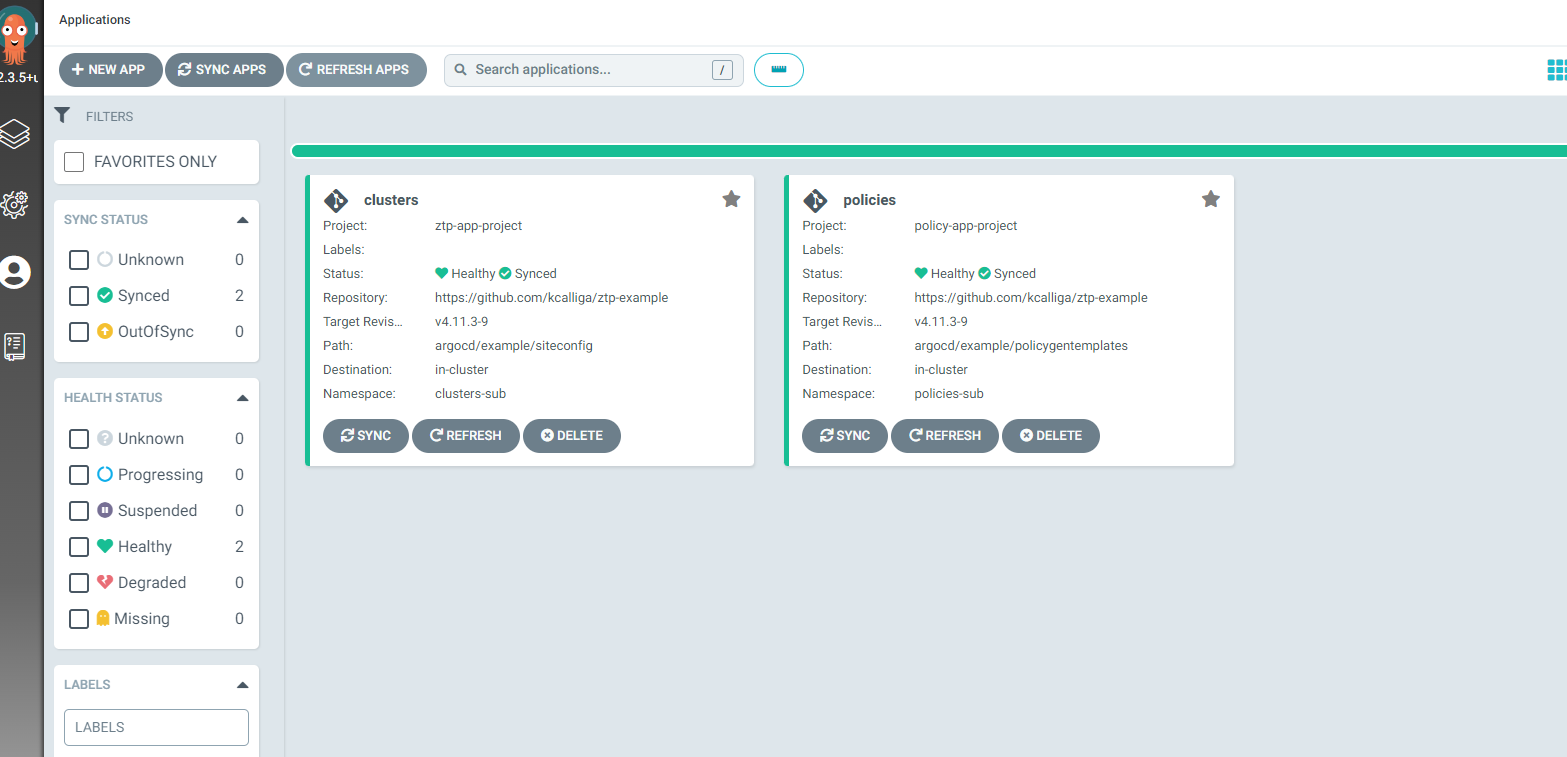

4. When logging into the GitOps GUI, you should see both apps. They should be in a Health/Synced state.

5. I will change the example-sno SiteCR for this cluster-build to match the example. This is best for now to keep things simple.

6. Create the project/namespace for example-sno. This may already exist unless you've already cleaned this up.

export CLUSTERNS=example-sno

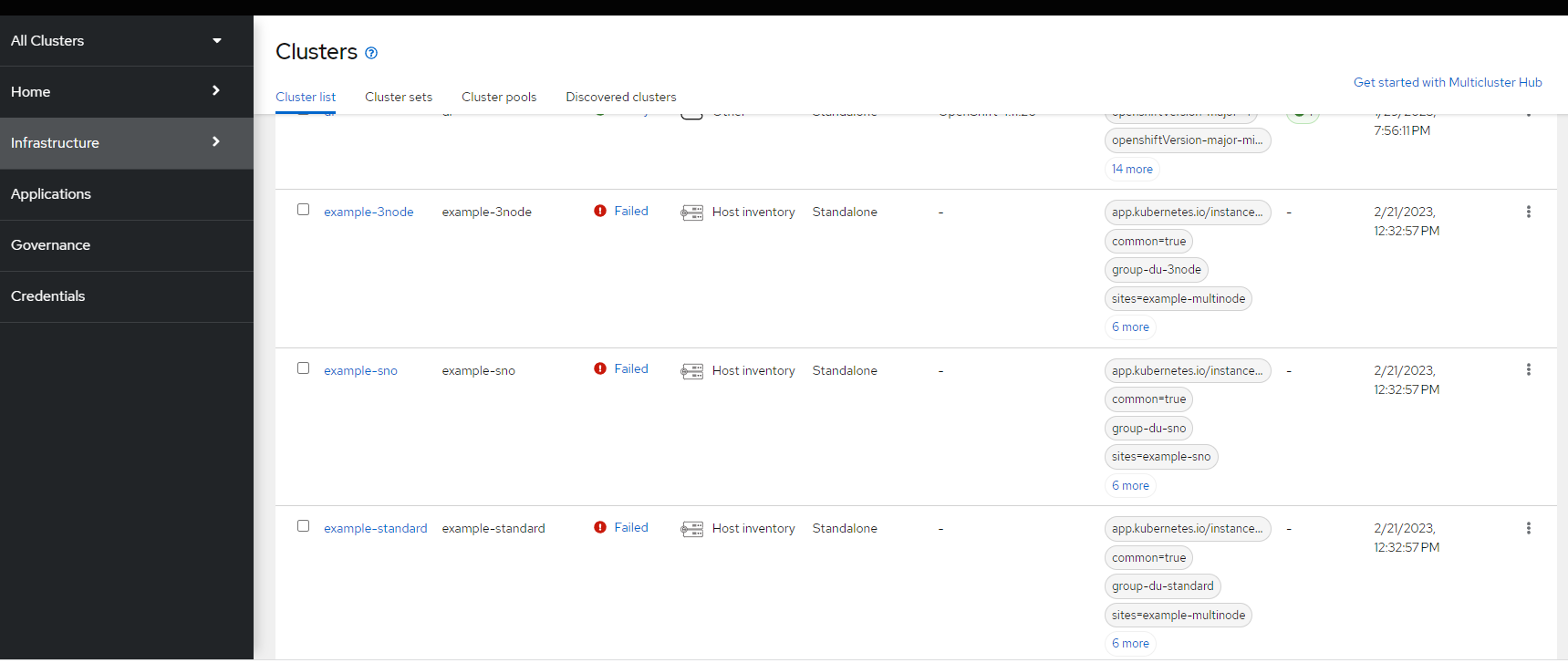

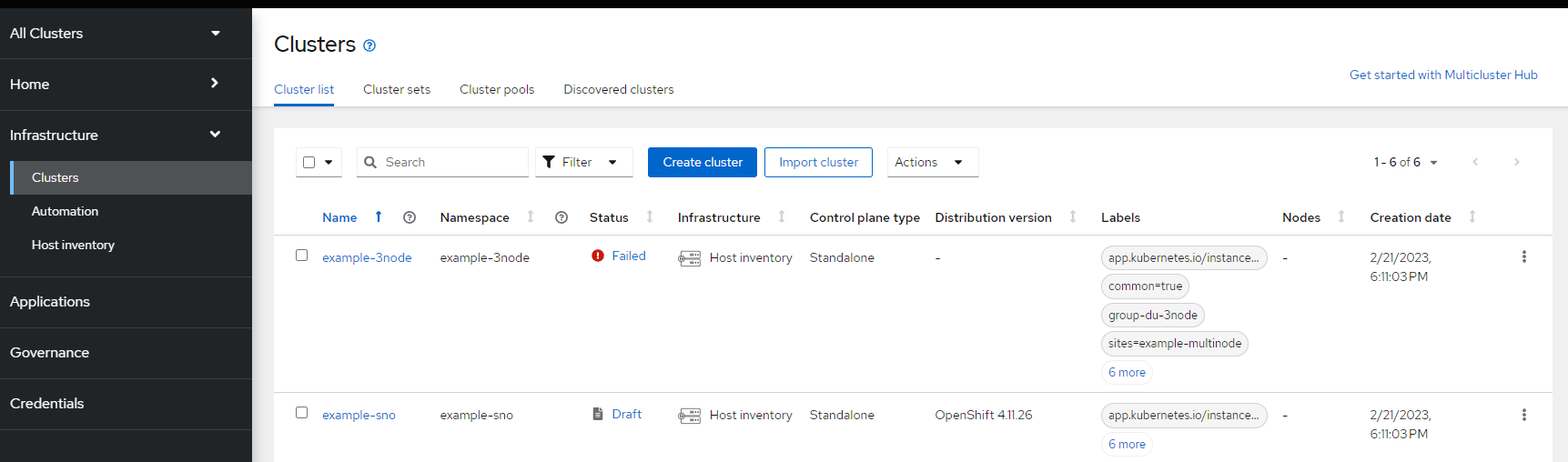

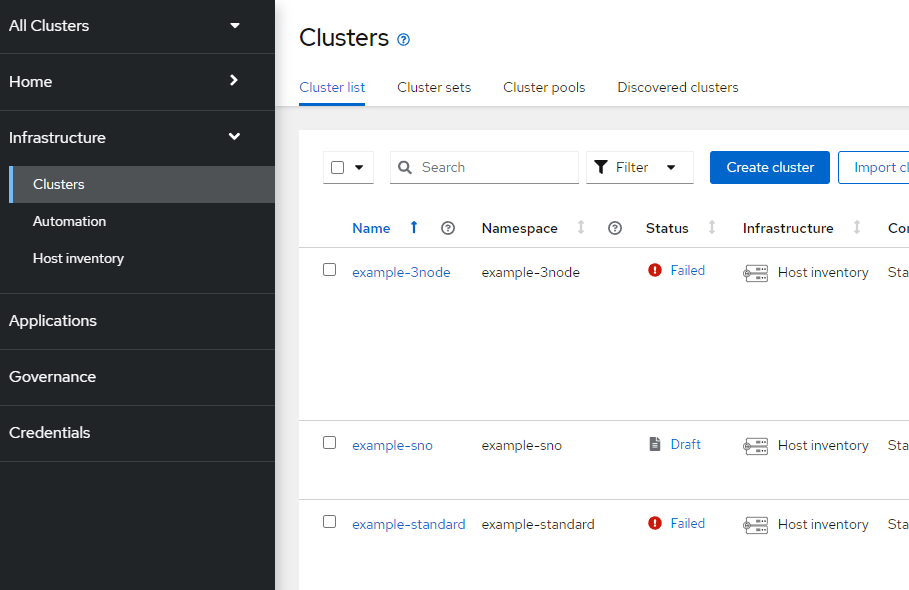

oc create namespace $CLUSTERNS7. Since some of these objects already exist based on the defaults in the GitRepo, you may see some cluster deployments in failed state in the ACM GUI as shown below. Don't worry, we will make changes to the GitHub repo to get rid of the example-3node and example-standard. The example-sno will be modified shortly.

8. Create a pull-secret in the example-sno project/namespace called assisted-deployment-pull-secret. You will need to save your pull secret to PWD as pull-secret.json for this to work.

oc apply -f - <<EOF

apiVersion: v1

kind: Secret

metadata:

name: assisted-deployment-pull-secret

namespace: $CLUSTERNS

type: kubernetes.io/dockerconfigjson

data:

.dockerconfigjson: $(base64 -w0 <pull-secret.json)

EOF9. Create the bare-metal secret. My environment is libvirt/redfish so any username/password combination will work. I used test/test and encoded it with base64.

oc apply -f - <<EOF

apiVersion: v1

kind: Secret

metadata:

name: bmh-secret

namespace: $CLUSTERNS

type: Opaque

data:

username: dGVzdAo=

password: dGVzdAo=

EOF10. Here is a sample SiteConfig YAML that will be used to configure a Libvirt-based machine in my environment as a SNO cluster.

This is based on the example at https://github.com/kcalliga/ztp-example/blob/v4.11.3-9/argocd/example/siteconfig/example-sno.yaml

apiVersion: ran.openshift.io/v1

kind: SiteConfig

metadata:

name: "example-sno"

namespace: "example-sno"

spec:

baseDomain: "calligan.name"

pullSecretRef:

name: "assisted-deployment-pull-secret"

clusterImageSetNameRef: "img4.11.26-x86-64-appsub"

sshPublicKey: "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCuZJde1Y4zxIjaq6CXxM+zFTWNF2z3LMnxIUAGtU7InyMPEmdstTNBXQ5e27MbZjiLkL7pxYl/LFMOs7UARJ5/GWG4ijSD35wEwohsDDGoTeSTf/j5Dsaz3Wl5NDEH4jRUvxU5TOtTgNBx/aBIMPw/GWKAKEwxKOMGU0mLA4v9e06oX1PbhX9Y/WQ/6+fNmX/wSX0UlIQ1R3DakW/ocH1HI3x1rWWdBzDa/8DPYMSMy5hxr4XpKYqzn9+5uPfozfejcfEAdqV5yCQOnP1XO55PWt4r7uIkqc3a9wCskwbW81nGsYKb6n8c/MaSu6S9UUxM+nx+/GRB/BzpxFpYzdWsx0/J2LWqGJ16lvjLdWwOliBvfKSxxbwP8tGVK5nWSAHAQGLXnvK+/uP9AjhKQeCahn859mq4bLfoWl6Q/0pkUA5XRJG/M/59djrUXoqBDMFguFE80JQqcrgDvGbpkZnAHrm+d4Am6ZkPri/R0V/alsdScWyeG2GBv52lENhz220= ocpuser@bastion"

clusters:

- clusterName: "example-sno"

networkType: "OVNKubernetes"

clusterLabels:

# These example cluster labels correspond to the bindingRules in the PolicyGenTemplate examples in ../policygentemplates:

# ../policygentemplates/common-ranGen.yaml will apply to all clusters with 'common: true'

#common: true

# ../policygentemplates/group-du-sno-ranGen.yaml will apply to all clusters with 'group-du-sno: ""'

#group-du-sno: ""

# ../policygentemplates/example-sno-site.yaml will apply to all clusters with 'sites: "example-sno"'

# Normally this should match or contain the cluster name so it only applies to a single cluster

#sites : "example-sno"

clusterNetwork:

- cidr: 10.128.0.0/14

hostPrefix: 23

machineNetwork:

- cidr: 192.168.150.0/24

serviceNetwork:

- 172.30.0.0/16

#crTemplates:

# KlusterletAddonConfig: "KlusterletAddonConfigOverride.yaml"

nodes:

- hostName: "example-sno"

role: "master"

bmcAddress: redfish-virtualmedia+http://198.102.28.26:8000/redfish/v1/Systems/88158323-03ff-44c6-a28a-4d9ab894fc6f

bmcCredentialsName:

name: "bmh-secret"

bootMACAddress: "52:54:00:9a:d2:5b"

bootMode: "UEFI"

nodeNetwork:

interfaces:

- name: enp1s0

macAddress: "52:54:00:9a:d2:5b"

config:

interfaces:

- name: enp1s0

type: ethernet

state: up

macAddress: "52:54:00:9a:d2:5b"

dhcp: true

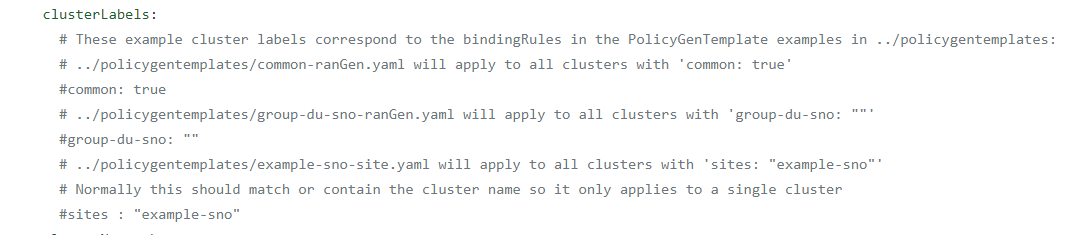

enabled: trueThe clusterLabels section is commented out (above) because the default policies that get applied based on these labels is too much for these purposes. The default settings assume a RAN-type setup.

If I were to keep these labels, the following would happen

common: true - All policies in argocd/example/policygentemplates/common-ranGen.yaml would get applied.

group-du-sno: "" - All policies in argocd/example/policygentemplates/group-du-sno-ranGen.yaml would get applied.

sites: example-sno - All policies in argocd/example/policygentemplates/example-sno-site.yaml would get applied.

I also edited the clusterImageSetRef to be img4.11.26-x86-64-appsub. This is version 4.11.26 of OCP (current at time of this writing). To get list of imagesets, run the following:

oc get clusterImageSets11. After editing the SiteConfig as appropriate for your environment, let's look to see what is happening.

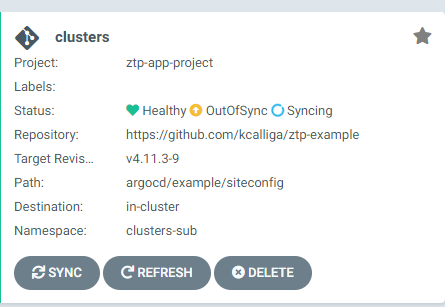

Log back into Openshift Gitops and hit "Refresh" under the ztp-app-project.

It will show out of sync for a period of time and then it should sync back up.

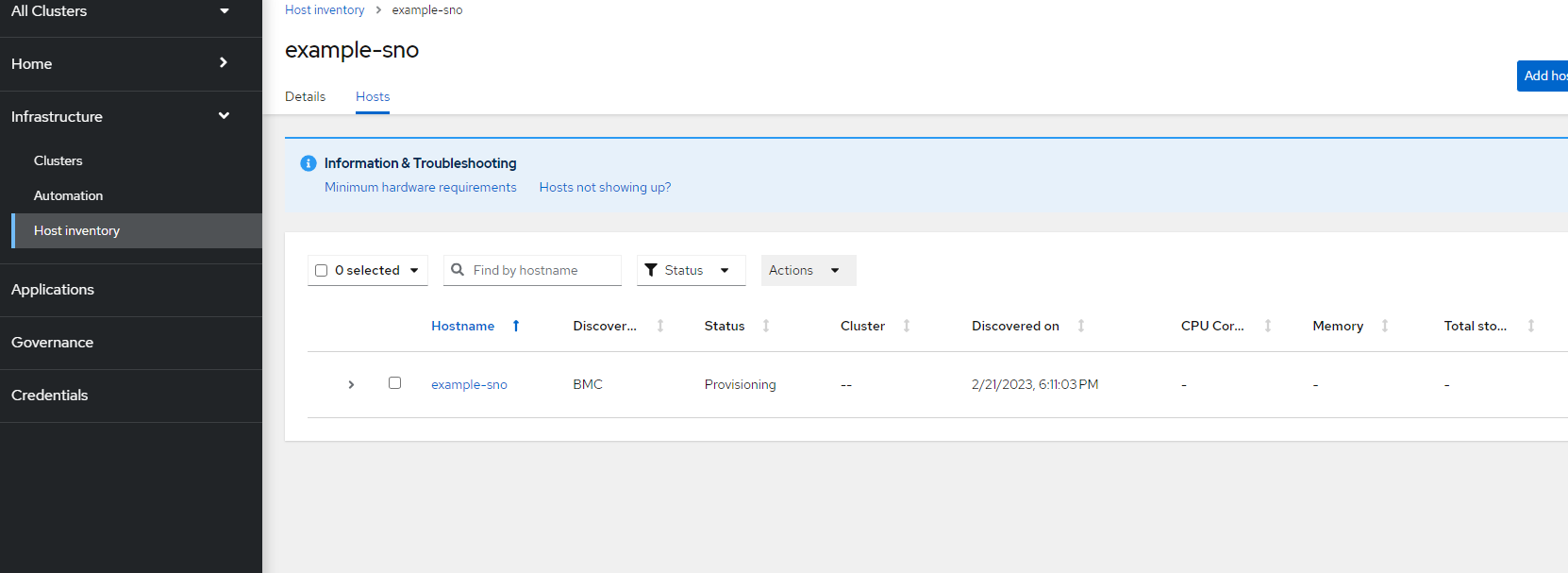

12. In ACM, the host should show as provisioning

and the cluster will be in a draft state waiting for this host to become ready

Troubleshooting

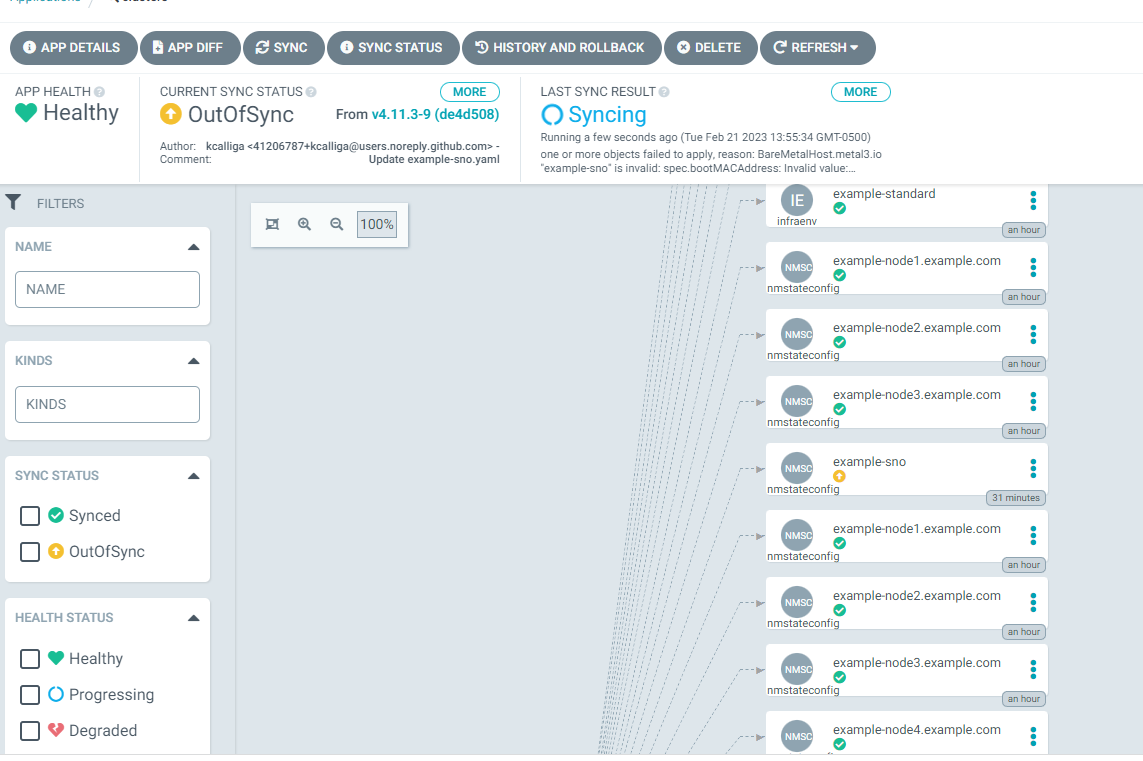

In the case of a sync issue

Click on the ztp-app-project box.

Within this box, on the right-side, you will see that example-sno nmstateconfig is in an out-of-sync state.

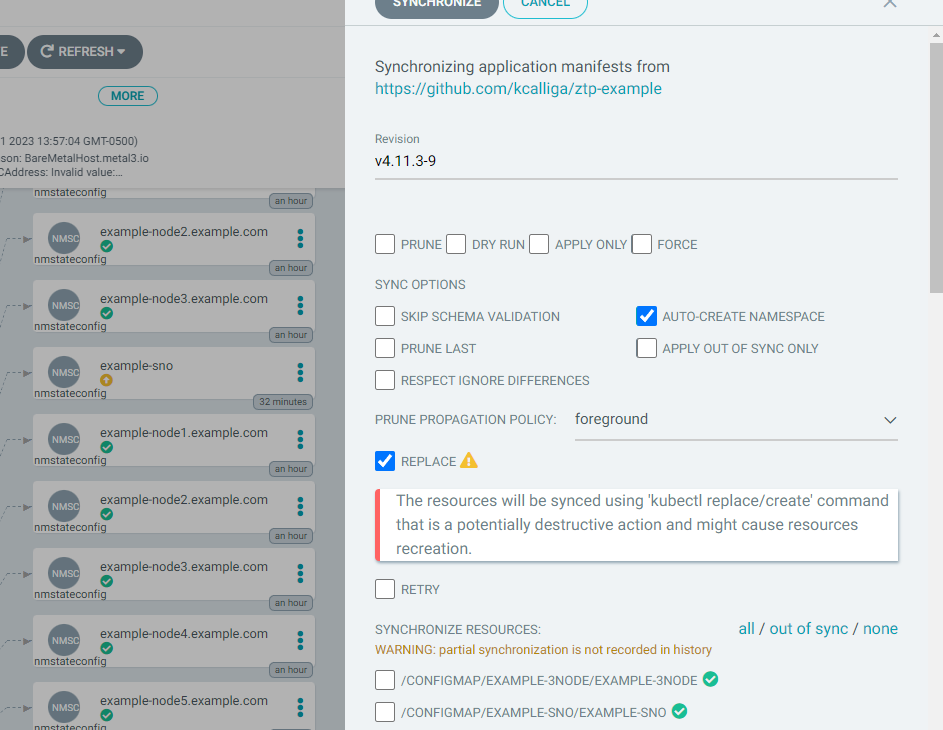

Let's first try to recreate the object. Click on the three dots.

On the resulting screen, choose replace

Click Synchronize.

You may get the following warning. Just click "OK"

There may be some other yellow warnings. Do the same operation on the rest of these objects if that is the case.

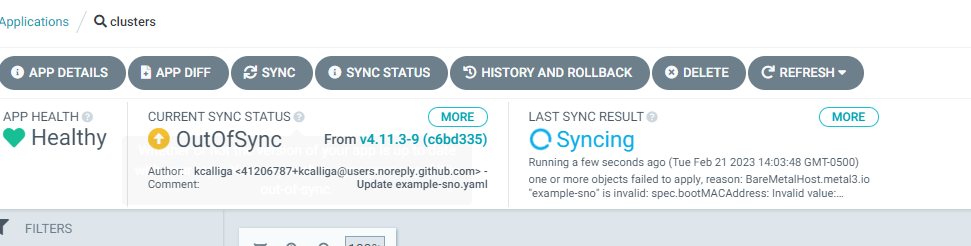

In some cases, more warning messages may show up by clicking "More" for either "Current Sync Status" or "Last Sync Results"

Some other places to look for errors are in ACM

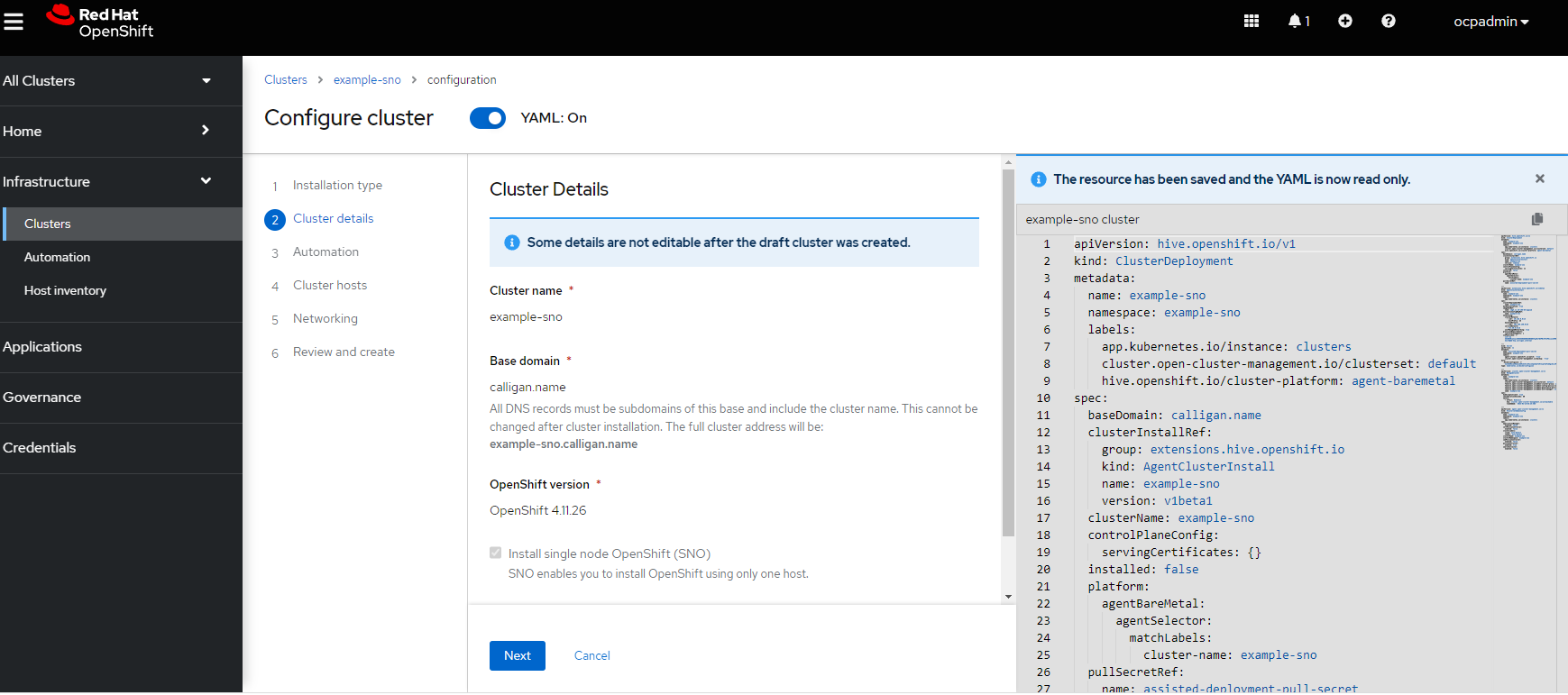

Check Infrastructure --> Clusters – example-sno. This shows draft which is good at this point.

After clicking on example-sno, look at details on resulting screen. Here you can see some of the settings that are getting applied to the cluster

I was originally going to conclude the series with this article, but one more article will be coming which will apply a few policies and talk about the Topology Aware LifeCycle Manager Operator.