Enabling Nvidia GPU in Red Hat Openshift AI

If you are encountering issues following these steps and the Nvidia driver pod shows "Not Ready", please ensure that "Secure-Boot" is disabled in the BIOS of your system.

Disabling this allowed the driver pod to finish installation and for the GPU to be detected in RHOAI

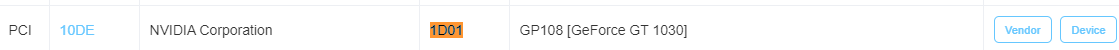

This will be a quick post in order to show how to utilize an Nvidia GPU in RHOAI. My test cluster is running Openshift 4.15. Openshift AI operator has already been installed. In my environment, I have a Single-Node Openshift (SNO) cluster with an Nvidia GeForce GTX 1030. This is just a video card for all purposes but it serves its purpose for this tutorial.

The first step will be to enable the Node Feature Discovery Operator.

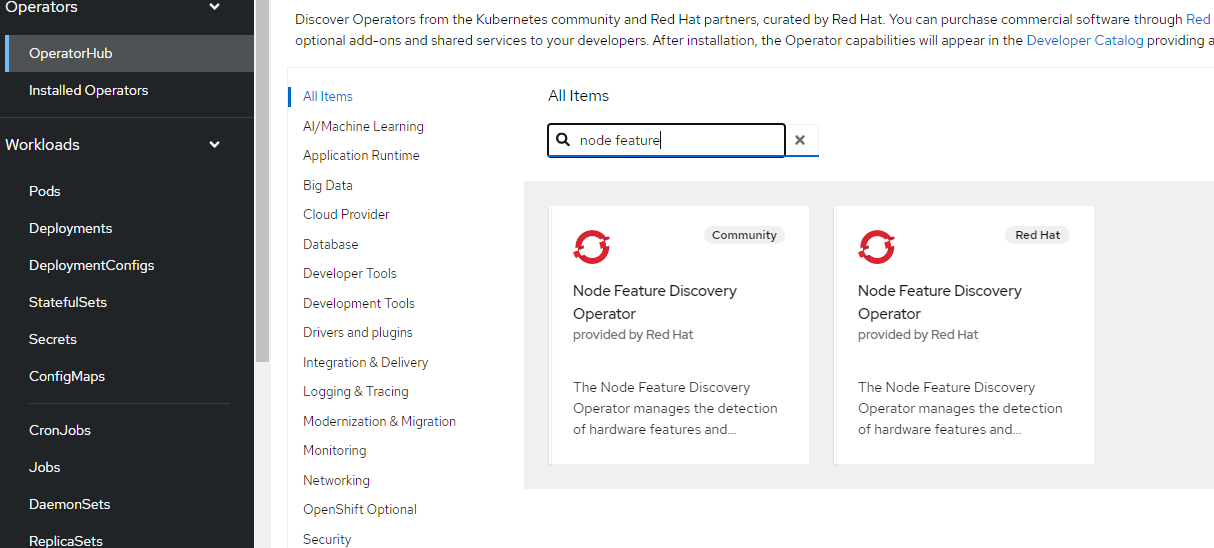

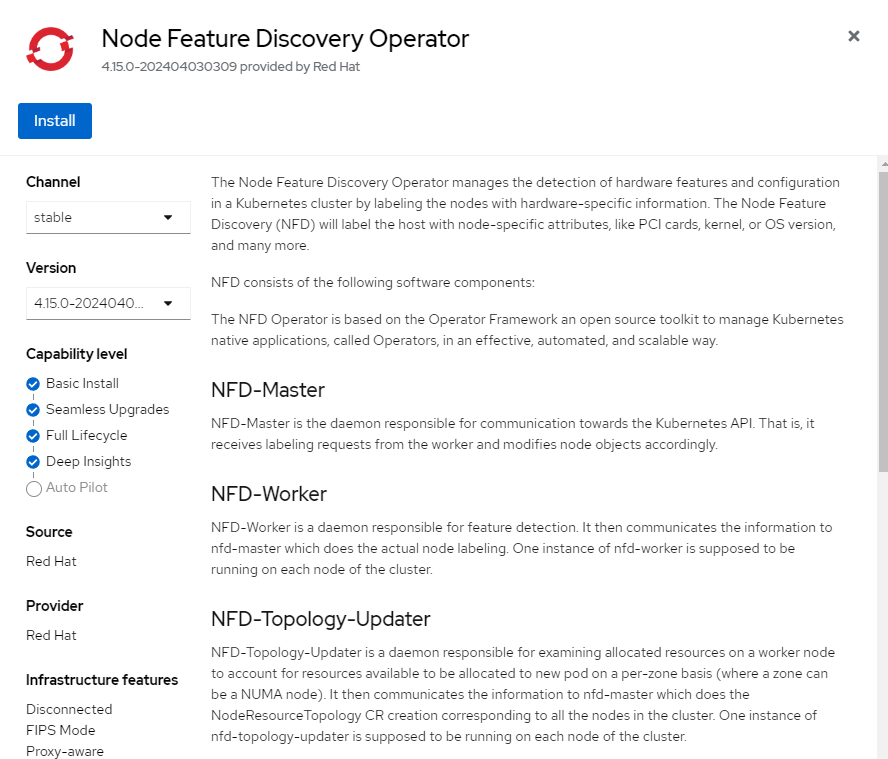

Installing Node Feature Discovery Operator

- In the Openshift console, go to Operators --> OperatorHub and search for "node feature." There will be a community version of the operator and a Red-Hat supported version. Choose the Red Hat one.

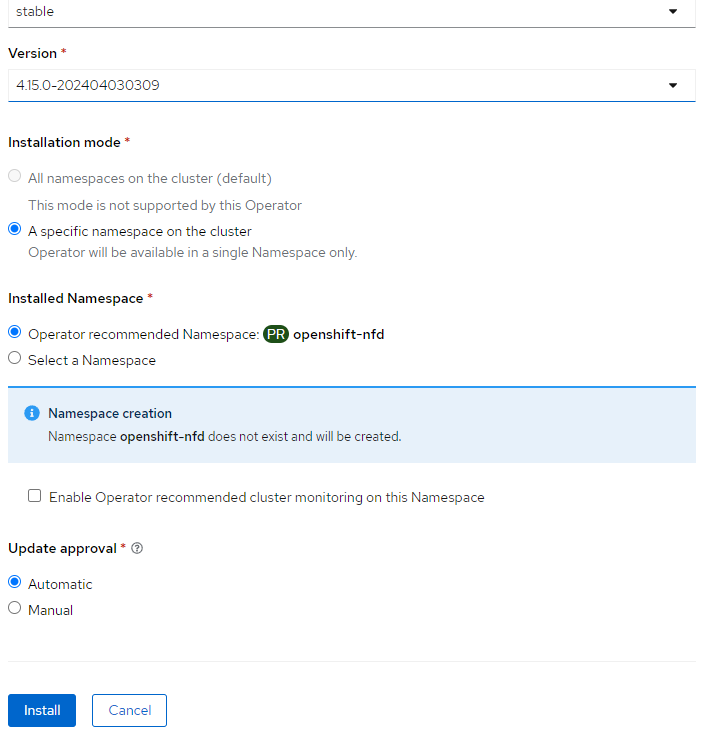

- Accept the defaults for the install. Click "Install"

- On the resulting screen, also accept the defaults and click "Install."

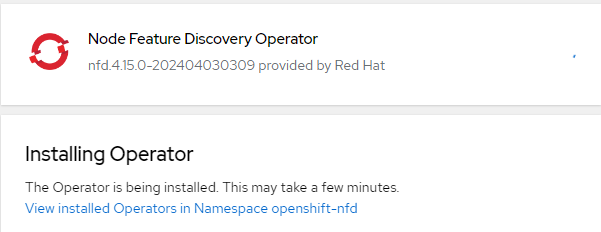

- The operator will install. Wait for this process to finish.

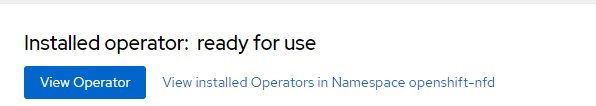

- The operator will show "ready for use." Now click "View Operator."

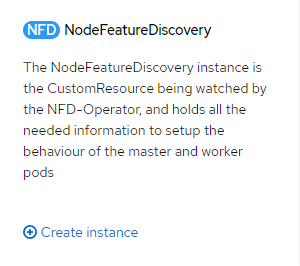

- Create an instance of the NodeFeatureDiscovery CRD (custom resource definition).

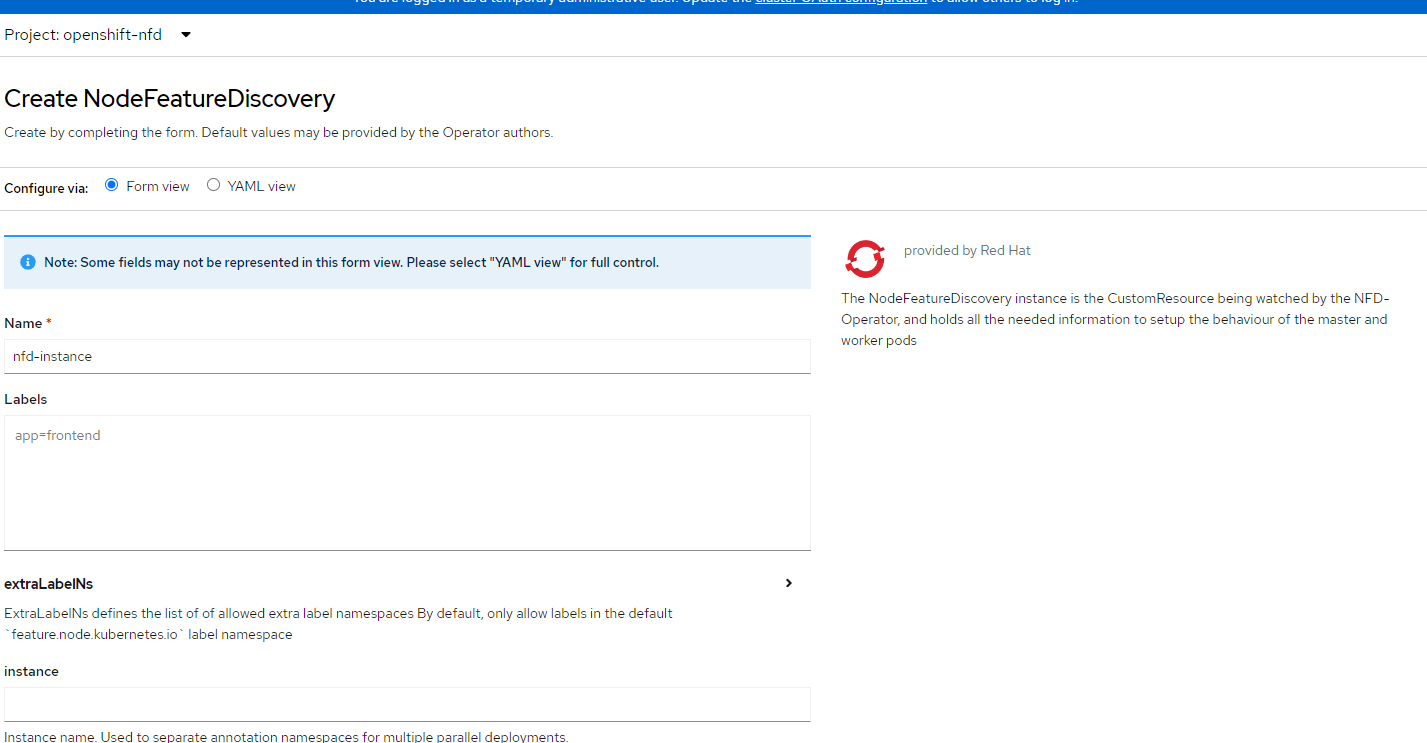

- Accepting the defaults on the resulting screen is sufficient for this purpose. Click "Create."

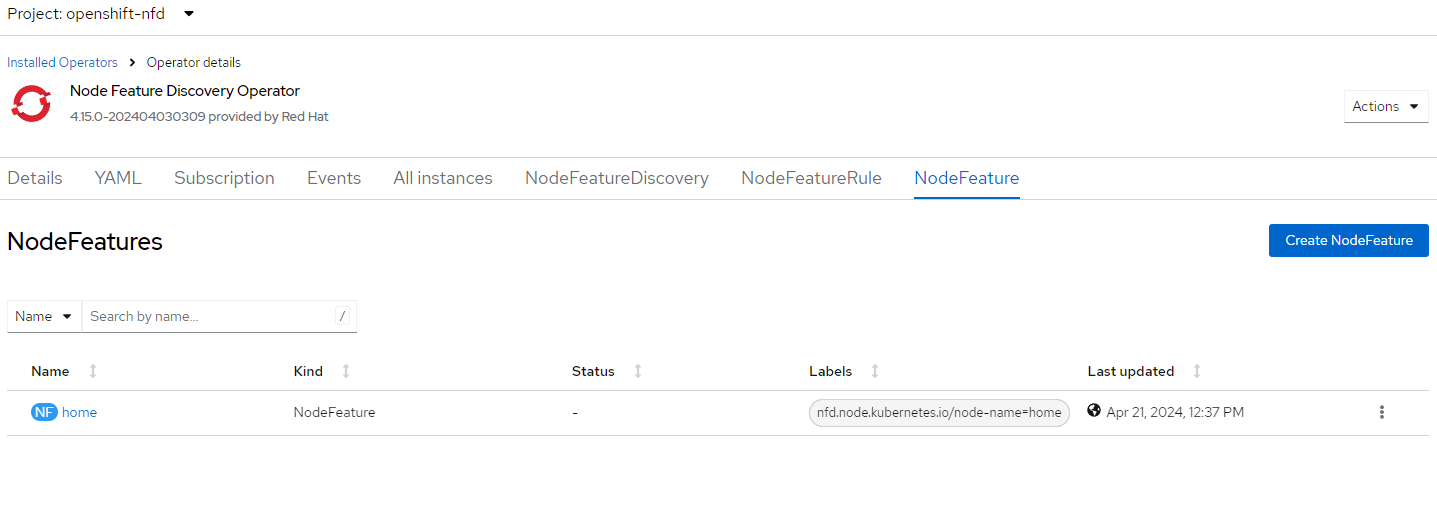

- We are not completely ready yet. Creating the NodeFeatureDiscovery object allows various devices to be discovered via the NodeFeature definition as shown in the GUI.

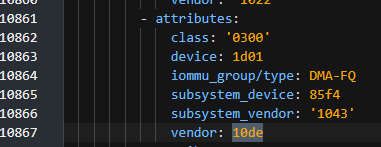

If you look at the YAML definition of this object, you will see a few devices with vendor id "10de" which is Nvidia.

A quick search on device hunt says that "1D01" is the device. Here is it is shown in the YAML of the NodeFeature object.

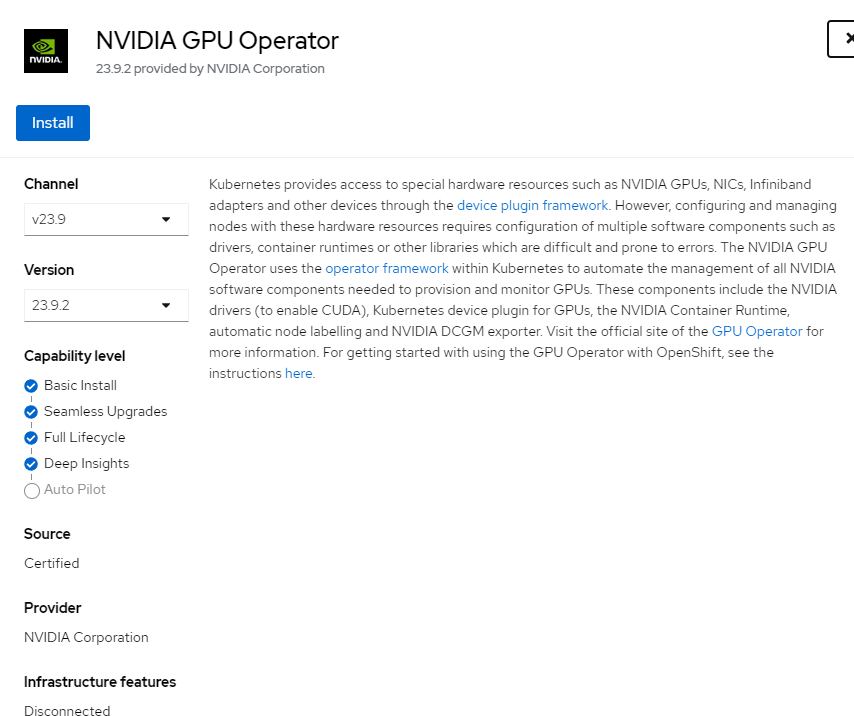

Installing Nvidia GPU Operator

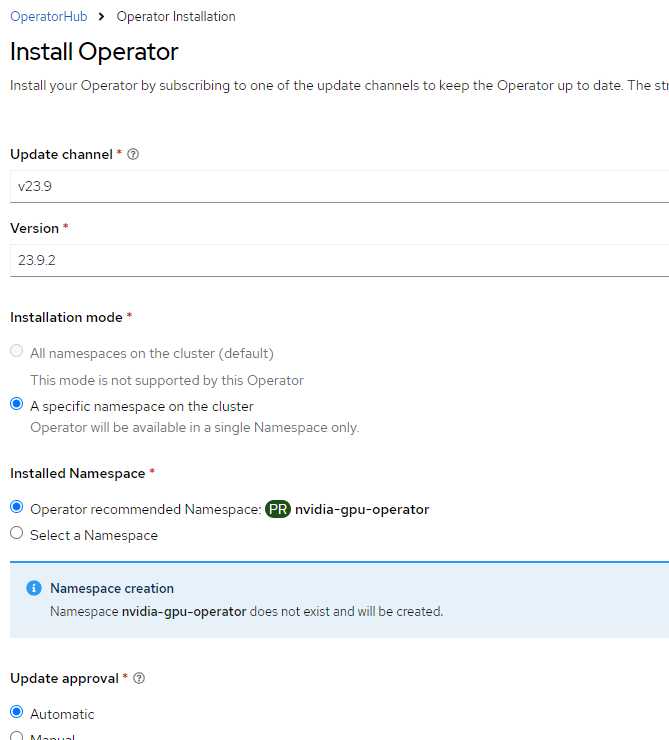

- In the Openshift console, go to Operators --> OperatorHub and search for "Nvidia." Choose the "Nvidia GPU Operator."

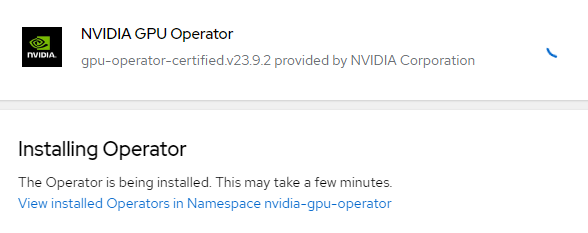

- Install with the default options.

- The operator will get installed.

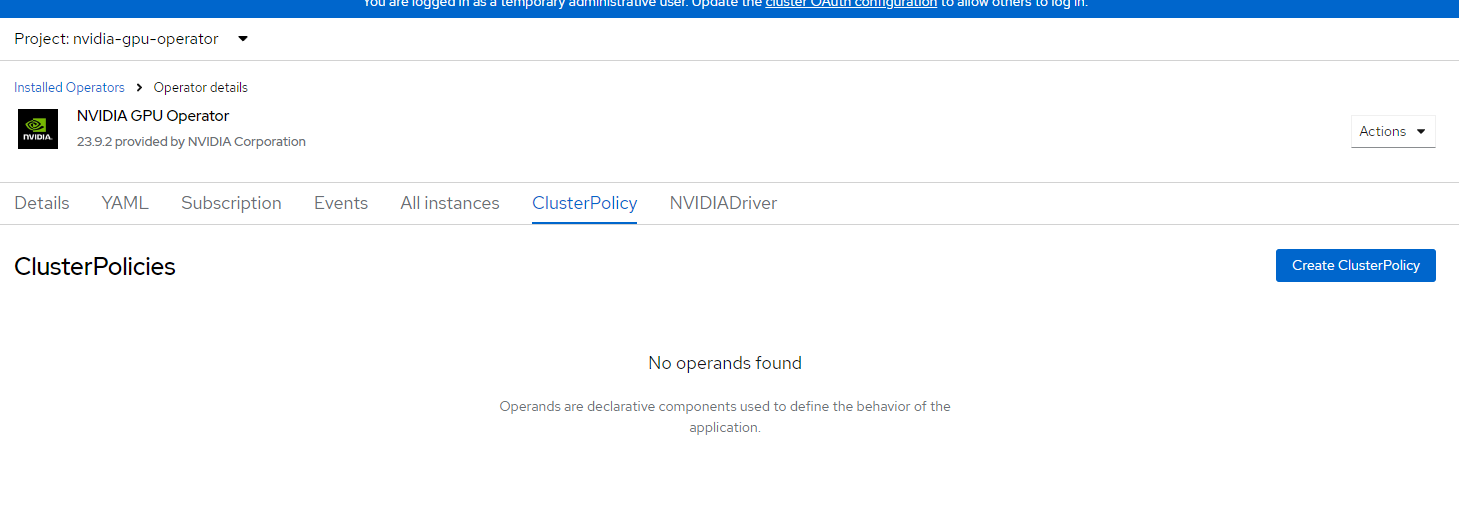

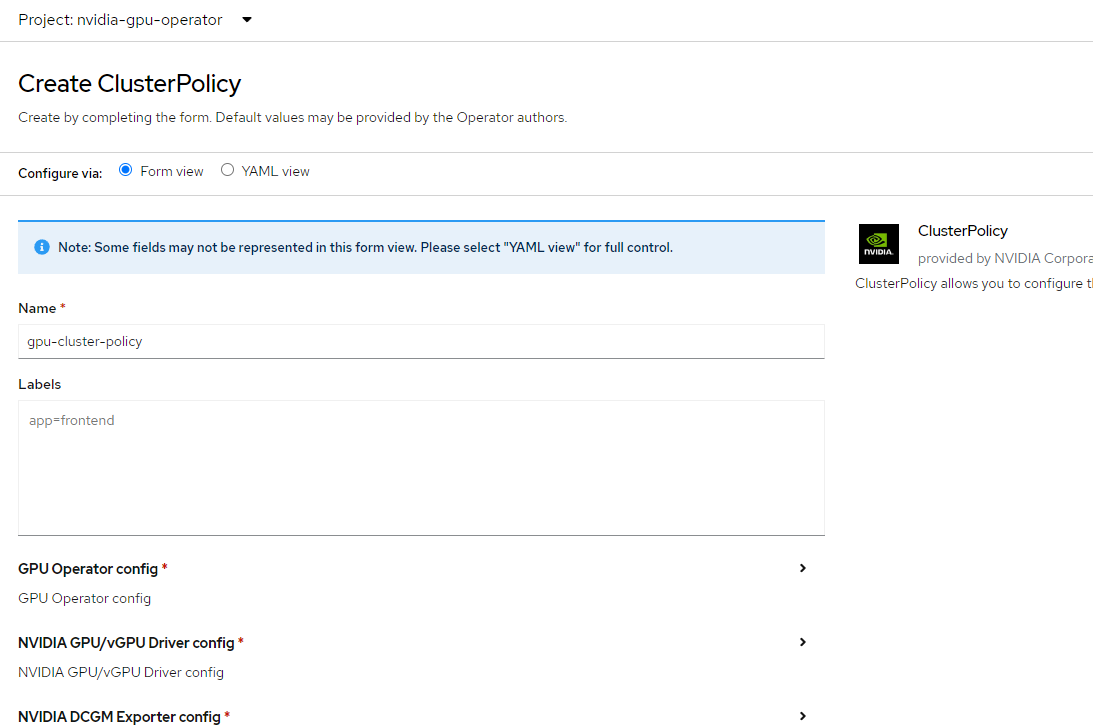

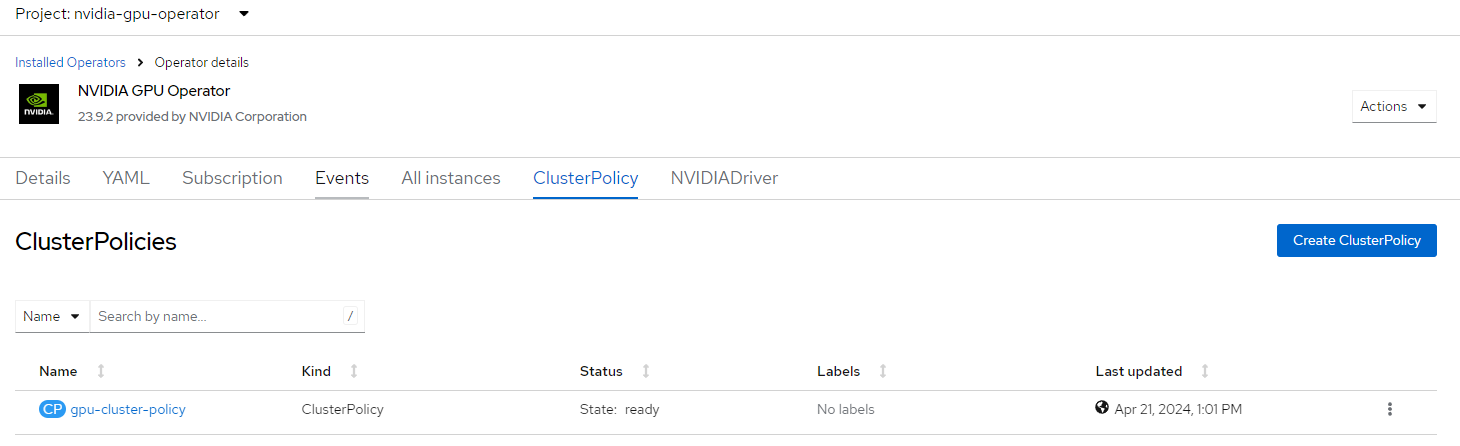

- After the operator is installed, create an instance of "ClusterPolicy" CRD. Accept all of the default settings.

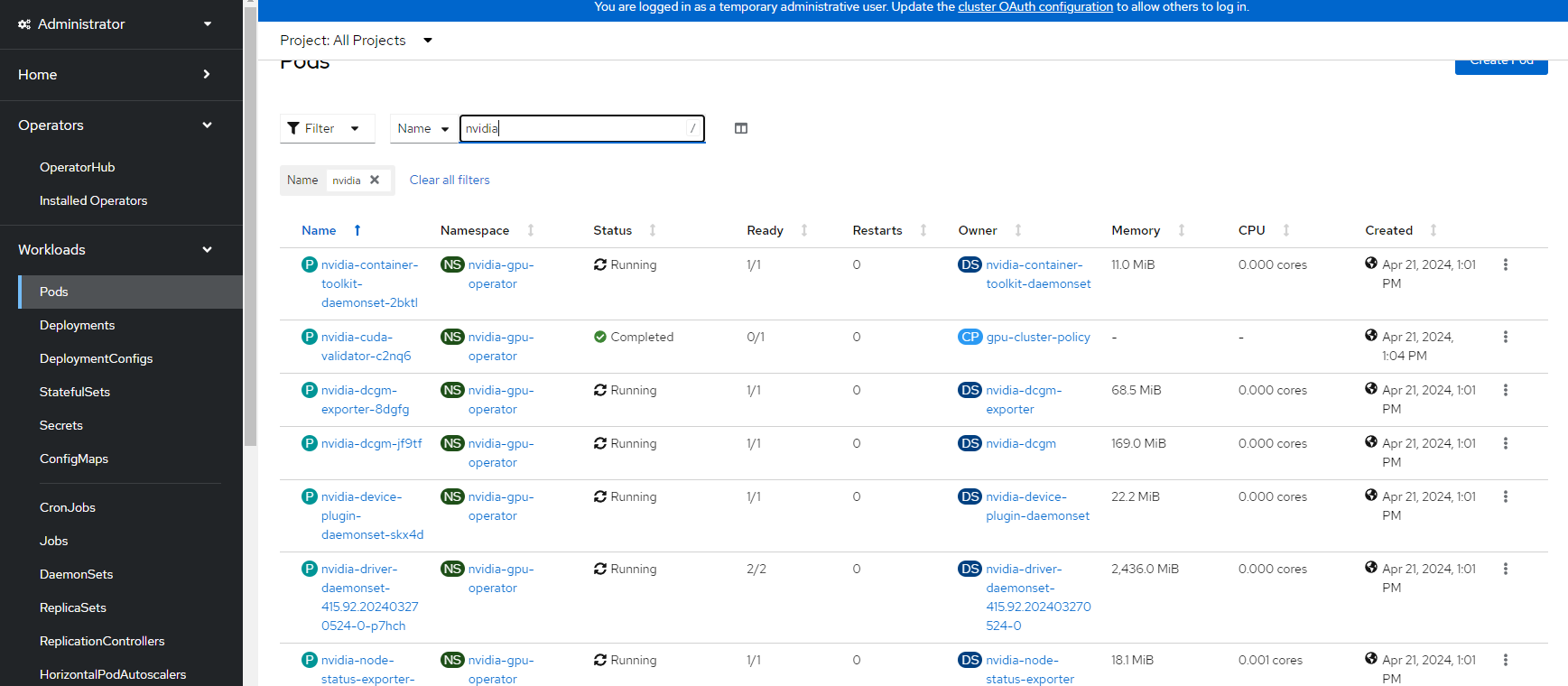

- This will take some time to become ready. In the meantime, you can monitor the progress by searching for any Nvidia pods in the cluster by searching for Nvidia pods.

- After all of these pods come up, you should see the "gpu-cluster-policy" become "Ready."

Testing the GPU

In my RHOAI cluster, I created a workbench with the CUDA image. See my previous article on how to create a workbench which houses the Jupyter Notebook:

This article references ODS but the product has now been changed to Red Hat Openshift AI (RHOAI). There are still references to both names in some of the objects that are created in the cluster for the time-being. Both of these names refer to the same product.

The information below is taken from the following website:

- Create a test project

- In this project create a pod using the following command:

cat << EOF | oc create -f -

apiVersion: v1

kind: Pod

metadata:

name: cuda-vectoradd

spec:

restartPolicy: OnFailure

containers:

- name: cuda-vectoradd

image: "nvidia/samples:vectoradd-cuda11.2.1"

resources:

limits:

nvidia.com/gpu: 1

EOFThis pod will run once and exit.

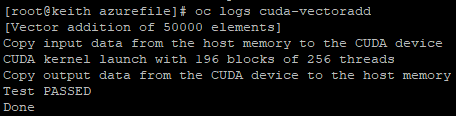

- To look at the output of the pod, run the following:

oc logs cuda-vectoraddIf everything is working, you should see the following output:

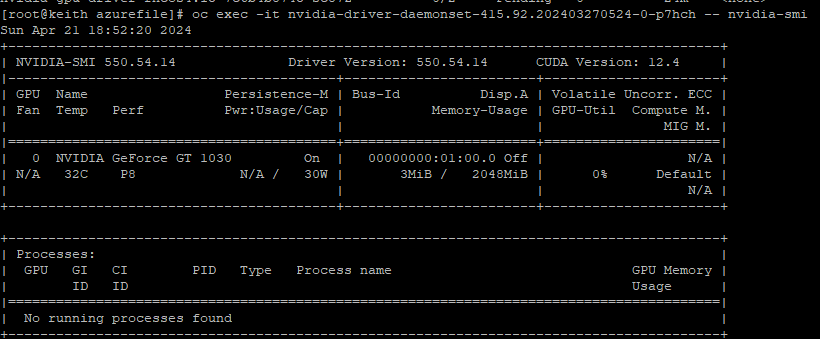

- Lastly, to see some more information about the GPU, run the following commands:

oc project nvidia-gpu-operator

oc get pod -owide -lopenshift.driver-toolkit=true

# Take note of the name of the nvidia-driver-daemonset and replace it in the following command

oc exec -it nvidia-driver-daemonset-<fromyouroutput> -- nvidia-smi- When this command finishes, you should see information about the GPU in the output

Here, we see the GeForce GT 1030 that is on my system.

I hope you liked this article. Much more to come soon about RHOAI.