Setting Up S3‑Bucket Storage on a Single‑Node OpenShift (SNO) Cluster

This article will show you briefly how to install the LVM operator on your SNO cluster, Installing ODF (Openshift Data Foundation) with the MultiCloud Object Gateway funtionality (to provide S3 buckets), and (finally) how to reference this in your workload(s).

A. Installing the LVM Operator for Storage

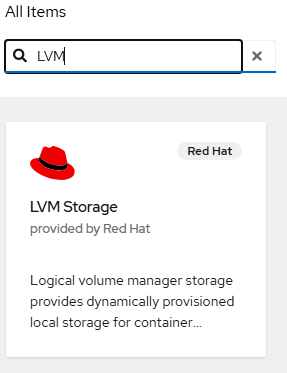

- Go to Operators --> OperatorHub and search for "LVM"

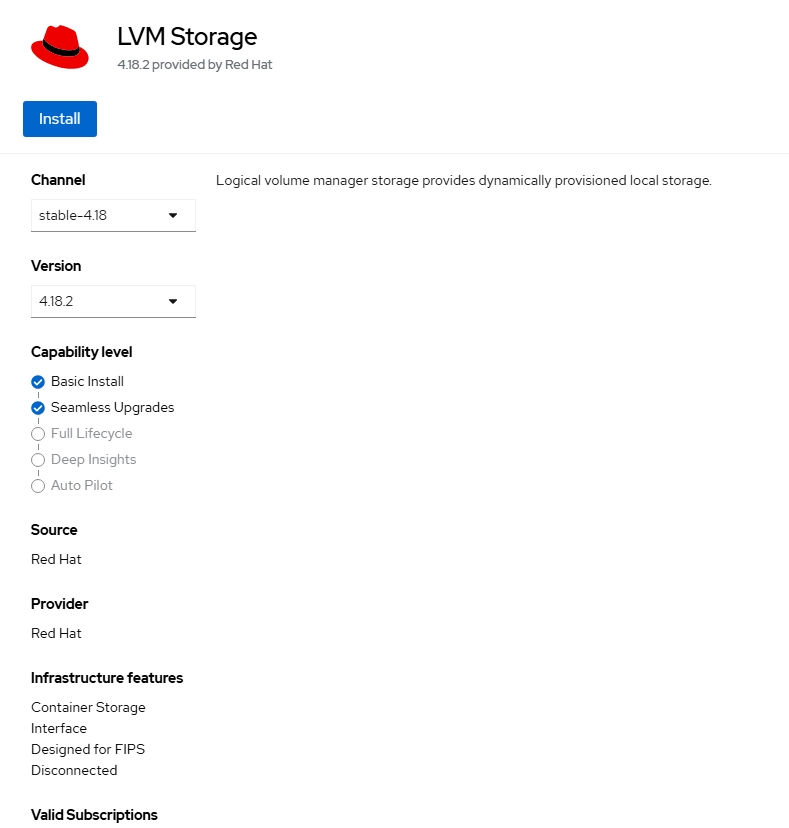

Click on this tile, click "Install" on the resulting page.

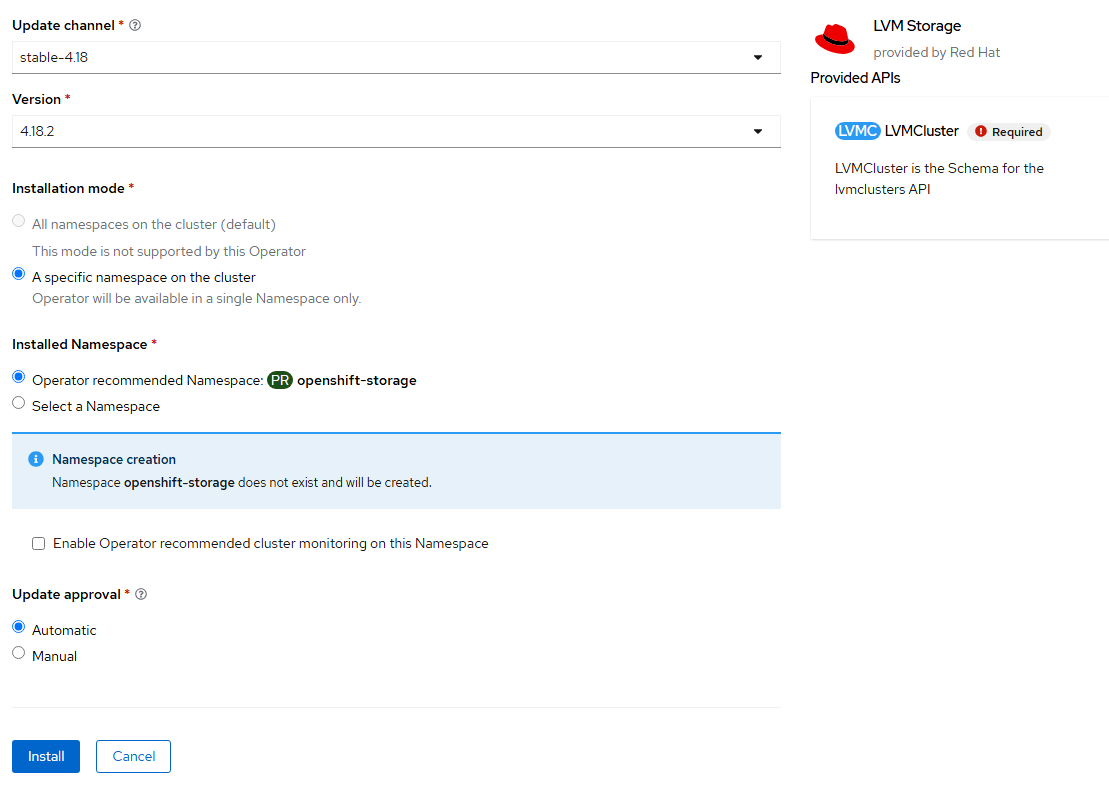

- Click "Install" again on the final confirmation page.

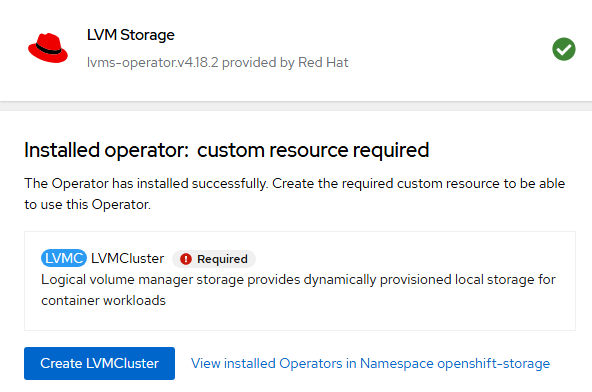

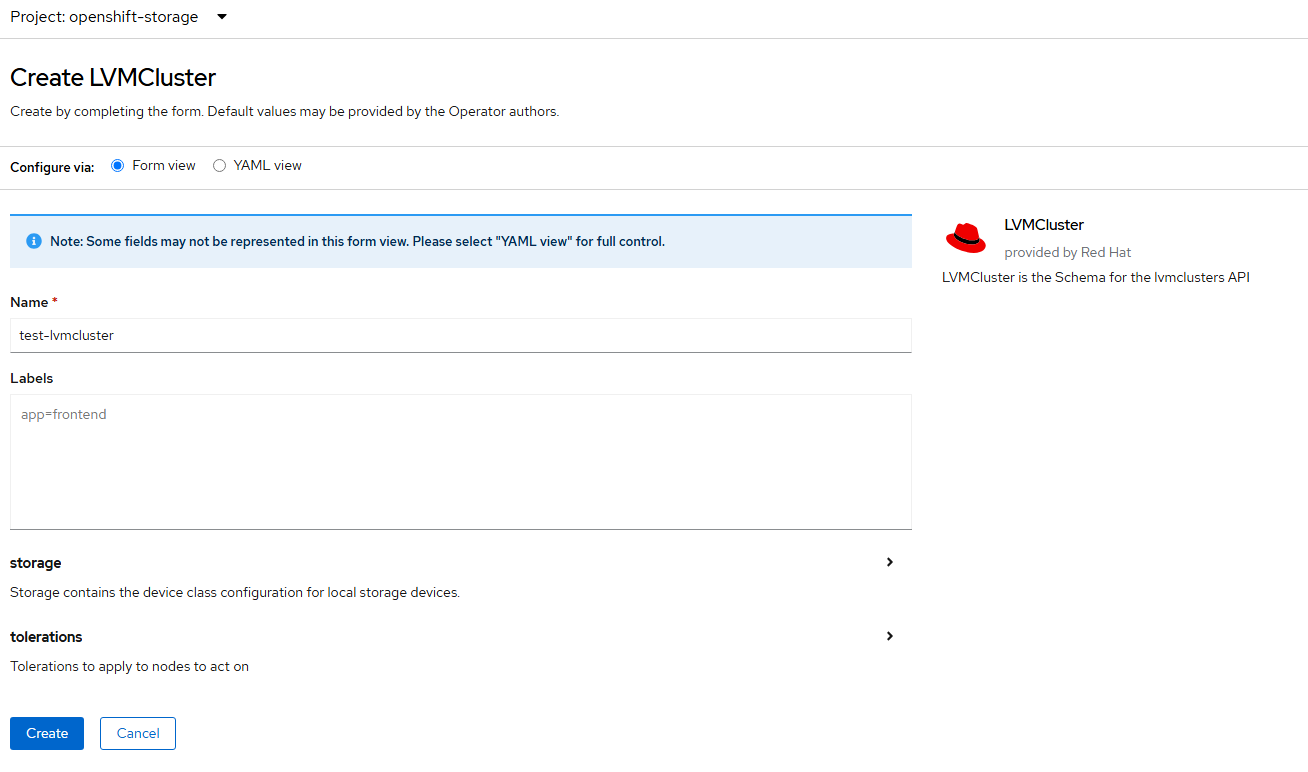

- Once the operator is installed, click on "Create LVMCluster"

- On this page, also accept the defaults and click "Create".

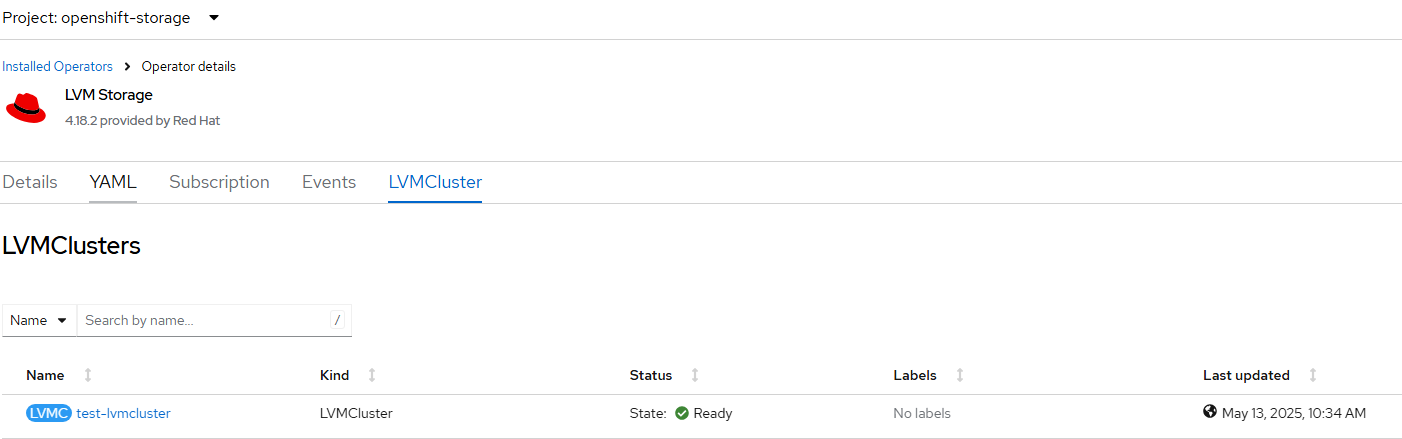

- After install, the status should show "Ready".

- I want to check a few things to ensure that the additional block device was added correctly.

Click on the test-lvm cluster resource.

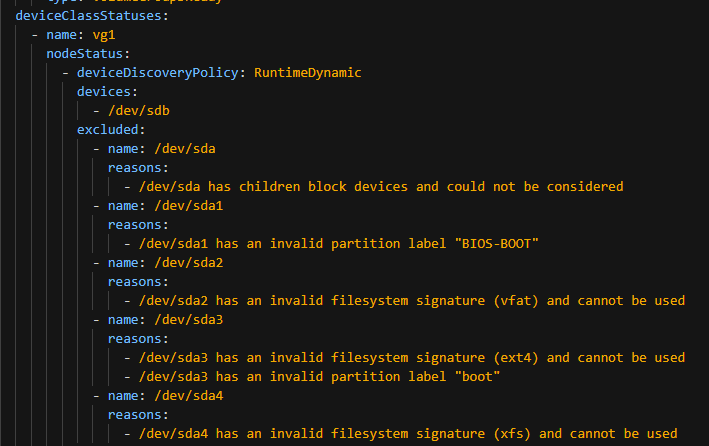

View the YAML definition.

In the screenshot below, /dev/sdb is showing as added. Notice that everything on /dev/sda is excluded due to RHCOS/node operating system already being installed here.

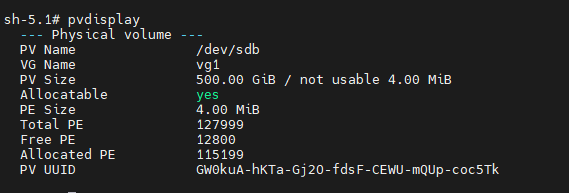

oc debug node/<nodename>

chroot /host

pvdisplay

See that /dev/sdb was added to LVM and shows up as a physical volume.

We are good to go from an LVM perspective.

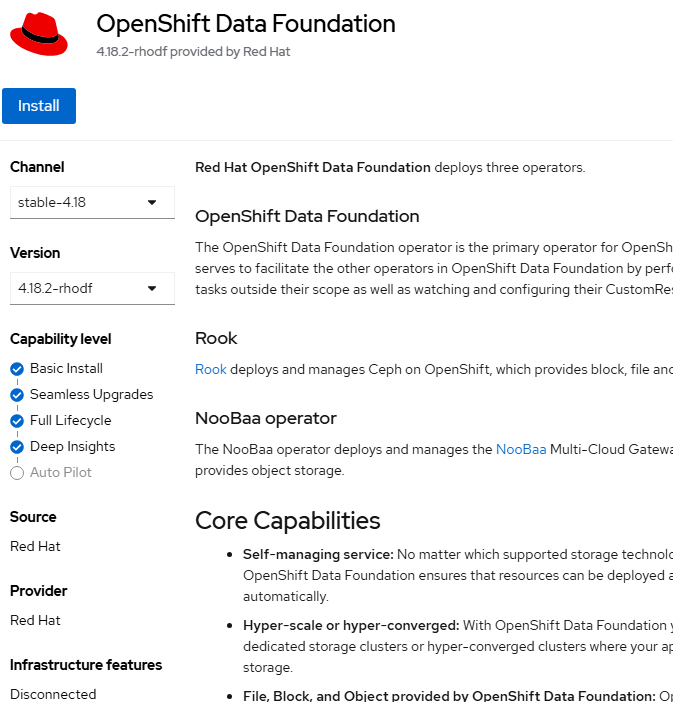

B. Install Openshift Data Foundation for S3 Storage

Now that LVM is setup, an ODF instance with S3/NooBaa/MultiCloud Object Gateway will be deployed.

- Go to OperatorHub --> Operators and search for ODF. Ensure you click on the one that says "Openshift Data Foundation" as shown below and not the client or multi-cluster orchestrator one.

Click on the tile.

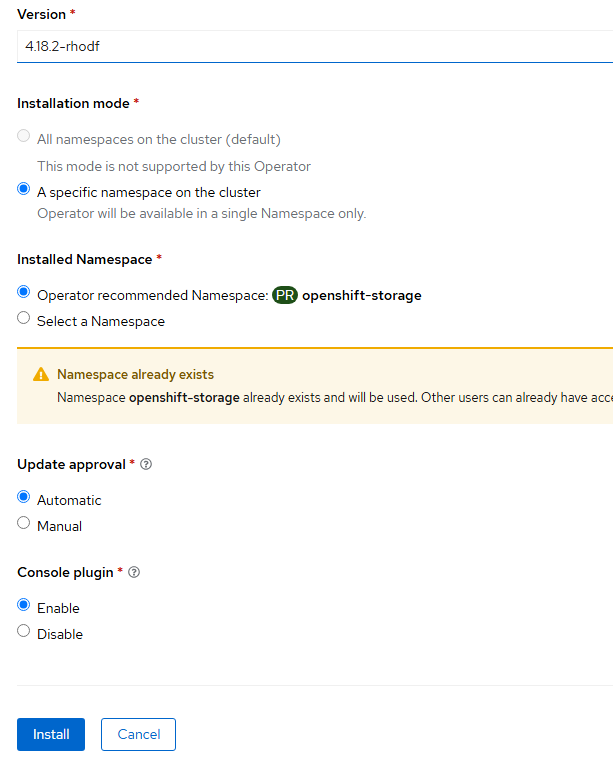

- Click "Install" on the next page. Accept all default settings.

- Click "Install" again on the final page. Accept all default settings.

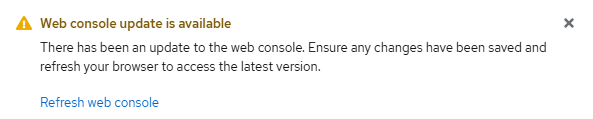

- After the operator installs, the web console will need to be refreshed as shown here:

Some new menus will be added on the left-hand side.

Under Storage, there will be an "Openshift Data Foundation" item.

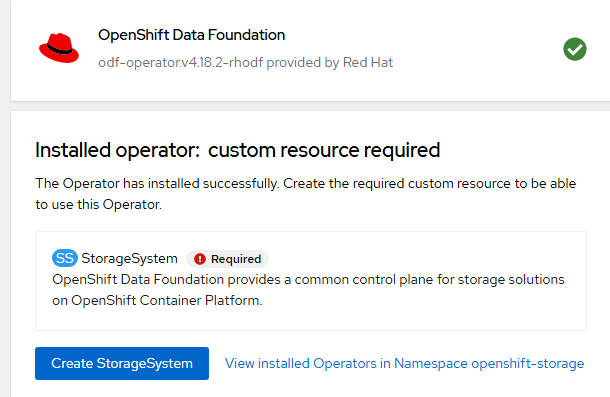

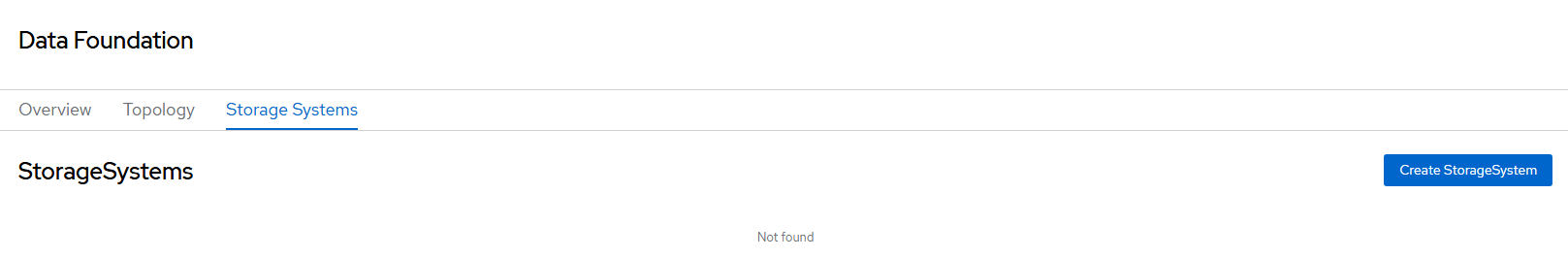

- Once the ODF operator is installed, click "Create Storage System".

Alternatively, you can do this from the Storage --> Openshift Data Foundation --> Storage Systems menu.

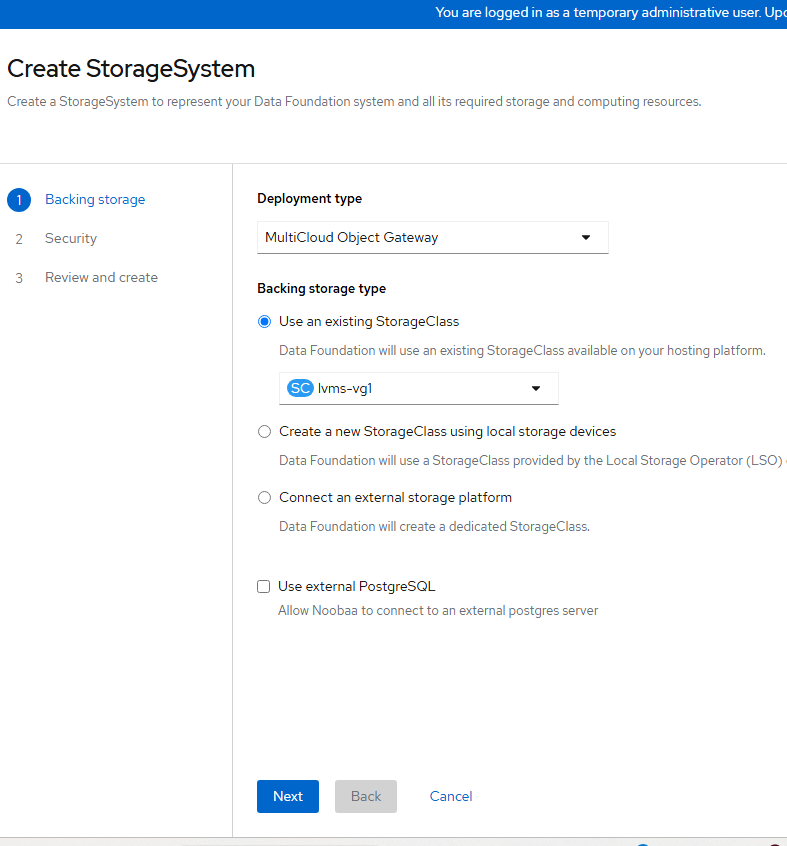

- On the resulting screen, change the "Deployment Type" to MultiCloud Object Gateway and use the "lvms-vg1" storage class and click "Next".

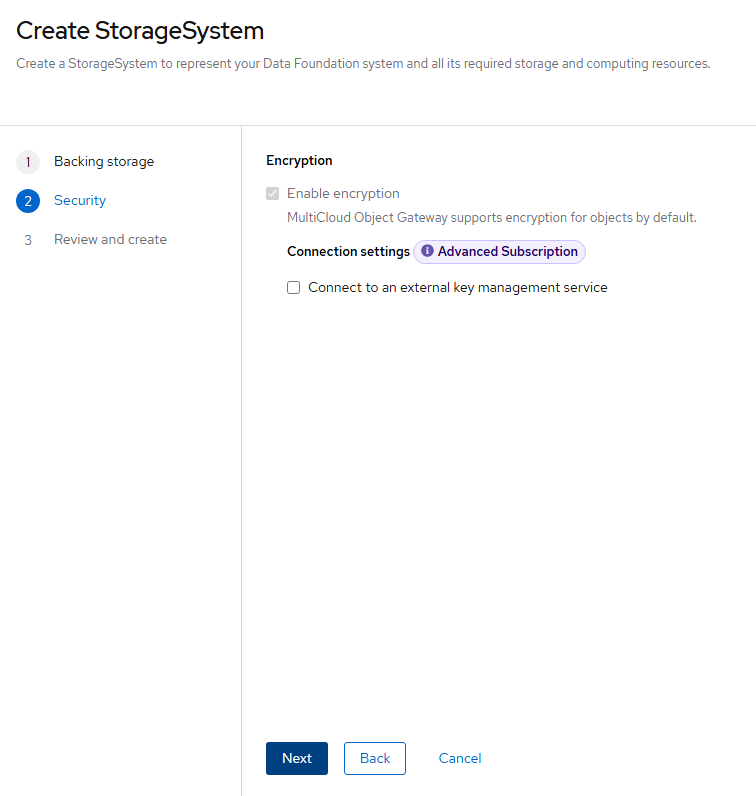

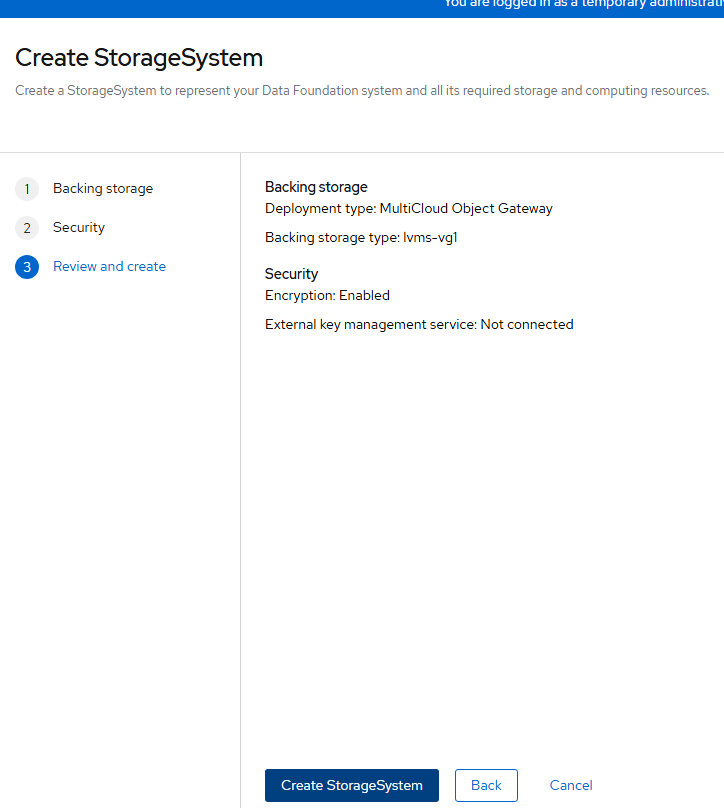

- Encryption will not be used. Click "Next" again.

- On the final screen, click "Create StorageSystem".

- At this point, you can sit back and relax or have a coffee or beer 😄as this will take some time (about 5-10 minutes).

Otherwise, you can watch what happens during this install.

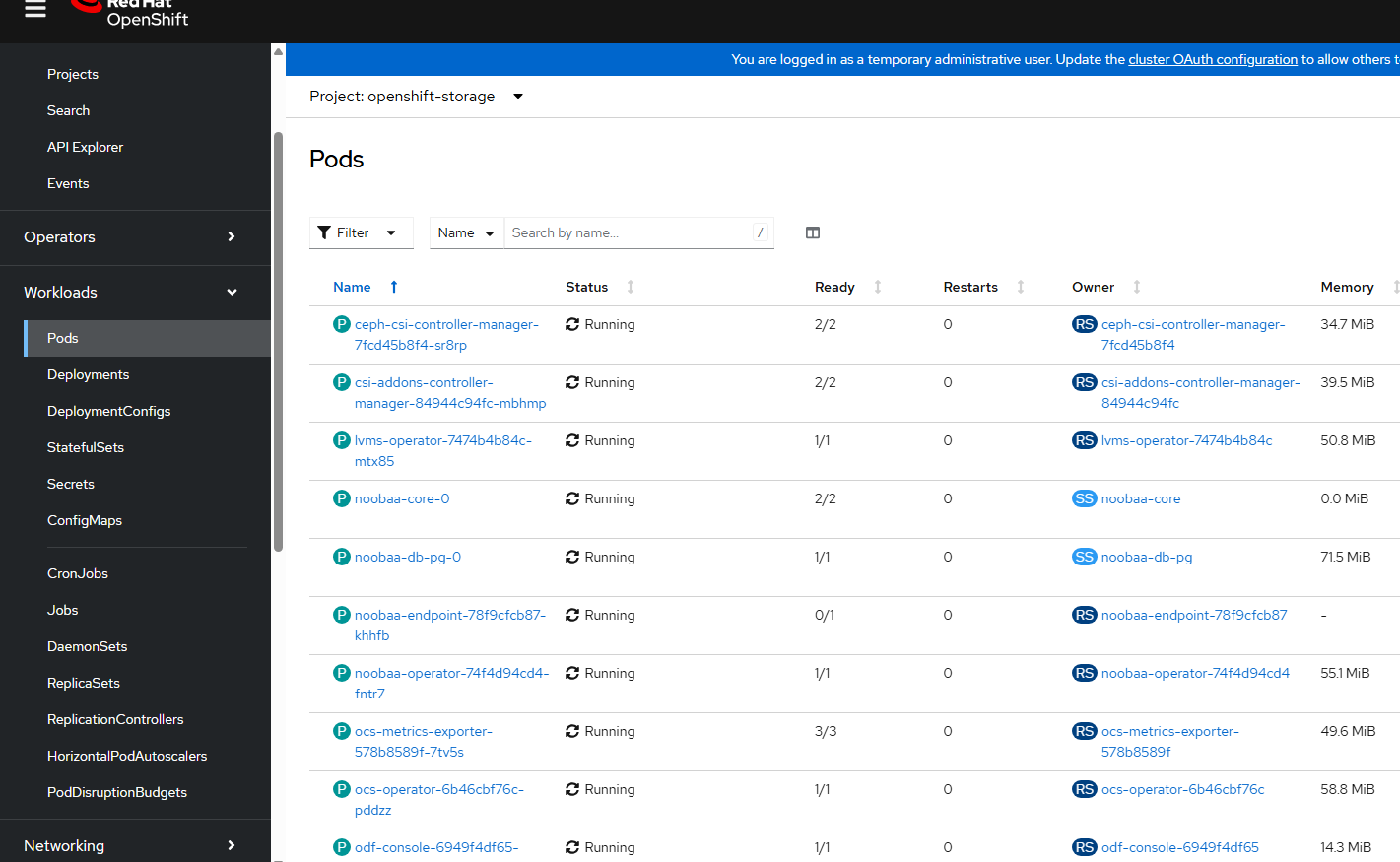

If you want to do this, go to Workloads -->Pods --> and select the "openshift-storage" namespace/project to see new pods get created.

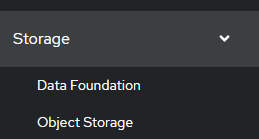

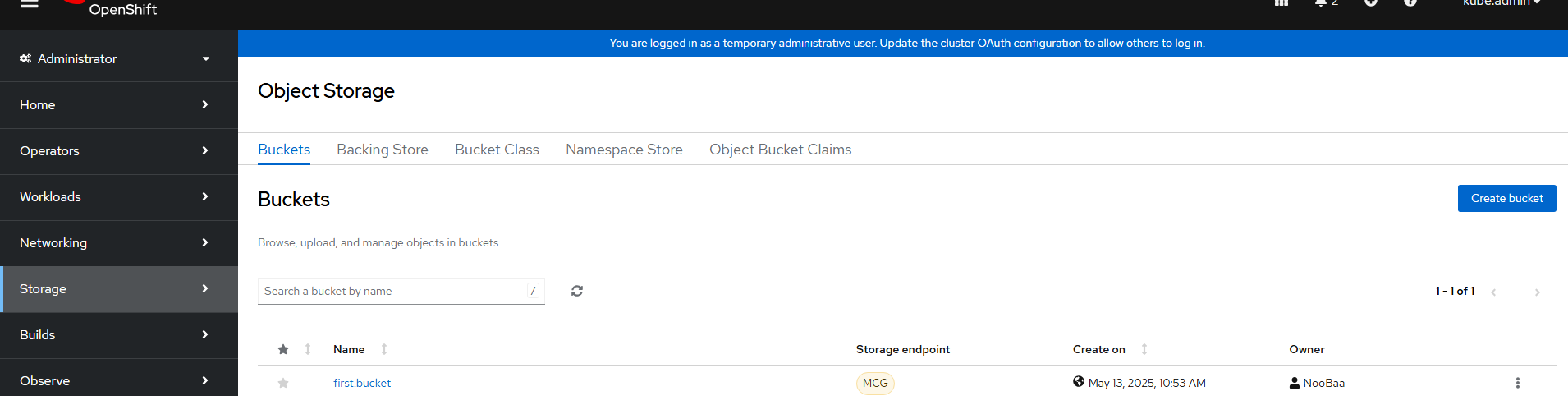

- A little bit into the install, a new menu item will appear. You may need to hit refresh on your browser to see this. The menu is called "Object Storage" and is listed under Storage on the left-hand side right under "Data Foundation".

- This will take some time to populate but eventually there will be a bunch of options available on this "Object Storage" menu such as buckets, backing store, bucket class, namespace store, and object bucket claims.

C. Referencing S3 Bucket Storage for your Workloads

- Create an S3 bucket in your project and name it accordingly. Typically, this could be used for Loki logging or an RHOAI model/pipeline.

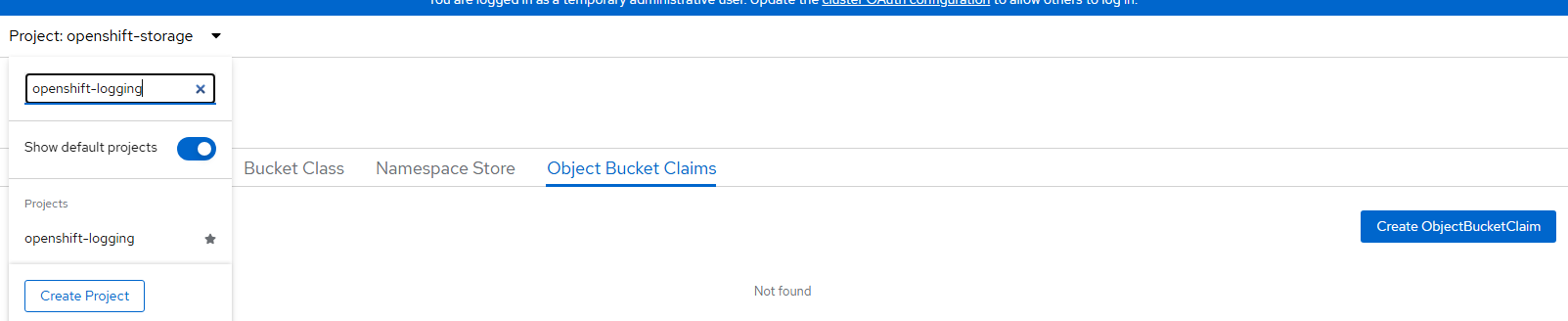

Go to Storage --> Object Storage --> Object Bucket Claims

Change to the appropriate project/namespace

Click "Create Object Bucket Claim".

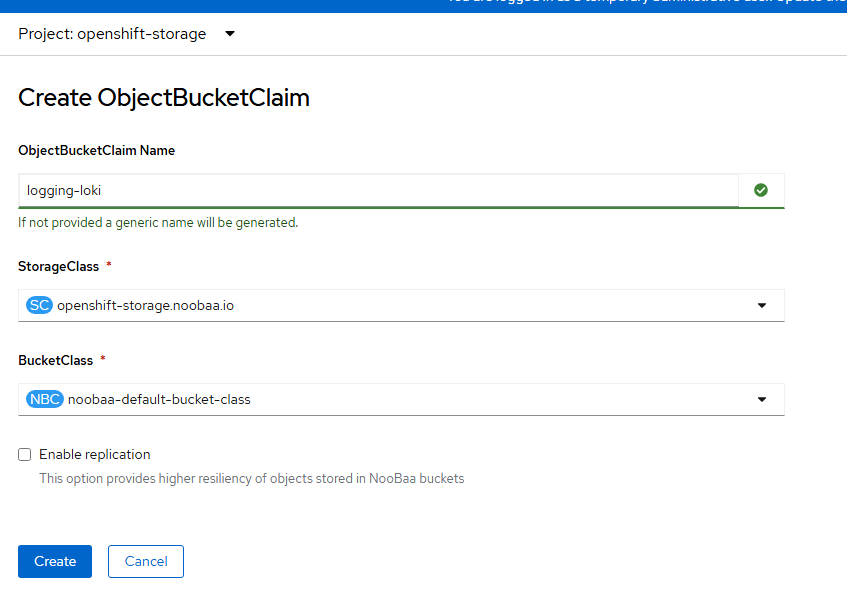

- Object bucket claim name is called "logging-loki" in this example. Select the only storageclass that is available (openshift-storage.noobaa.io) and bucket class (noobaa-default.bucket-class) and click "Create".

- When this is created, some data will appear on the bottom of the screen. Reveal these values. These will be used to create secret for (A) Openshift-logging (Loki) or for (B) some RHOAI (Red Hat Openshift AI) workload.

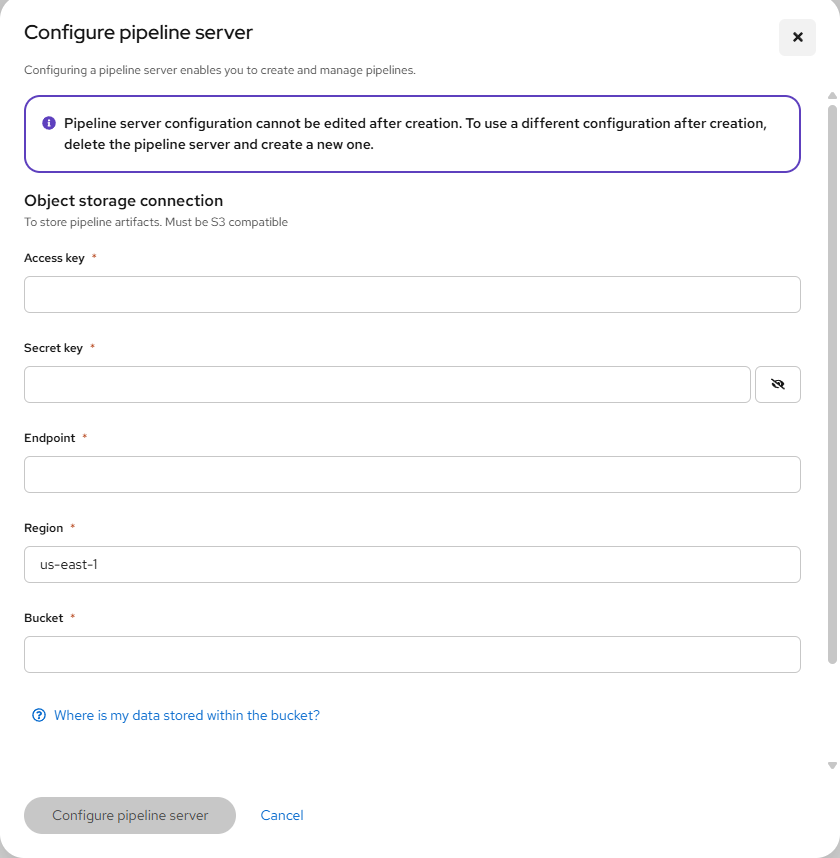

A. Fill in the values in the logging-loki-s3-secret.yaml file.

apiVersion: v1

kind: Secret

metadata:

name: logging-loki-s3

namespace: openshift-logging

stringData:

access_key_id: <access_key_id from console>

access_key_secret: <access_key_secret_from_console>

bucketnames: loki-logging-<random id from console>

endpoint: https://s3.openshift-storage.svc:443B. RHOAI Pipeline S3 Reference (Example)

Most of this information was taken from my https://myopenshiftblog.com/single-node-openshift-sno-observability/ article but I wanted to re-post as its own separate set of instructions since it is applicable in mutlple cases as mentioned here.