Openshift Virtualization: Networking 101, Routes, and MetalLB to Load Balance VMs

In this post, I will walk you through the basic networking constructs of Openshift/Kubernetes to include pod networking and services (from a virtual machine perspective). At the end, this will be expanded to include the MetalLB load-balancer that will be used to load-balance 2 webservers (each running on separate virtual machines).

Creating Two VMs in Same Namespace

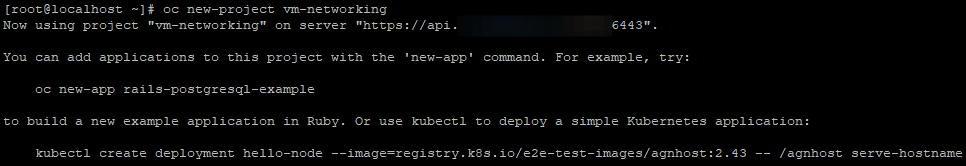

- Create a namespace/project called vm-networking

oc new-project vm-networking

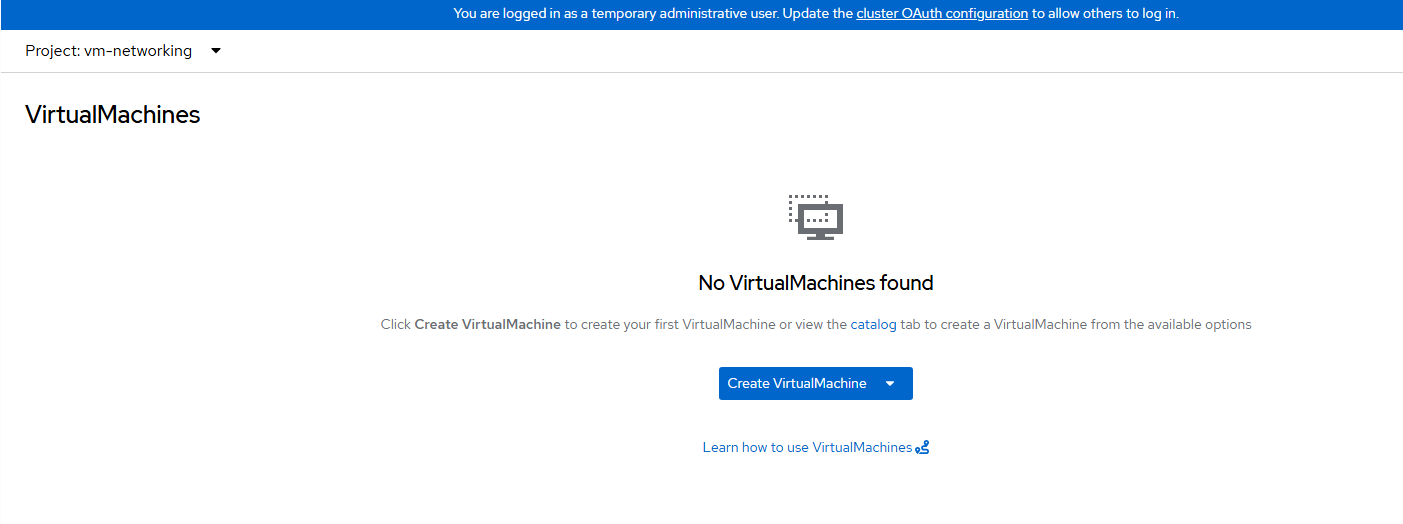

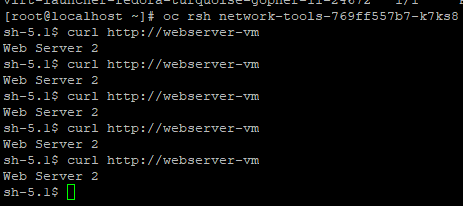

- In the Openshift web-console, let's create two VMS. Both of these based on the Fedora.

Go to Virtualization --> Virtual Machines

- Change to the new project that was just created called "vm-networking".

Click on "Create VirtualMachine".

- Choose "From Template".

On the resulting screen, choose "Fedora VM".

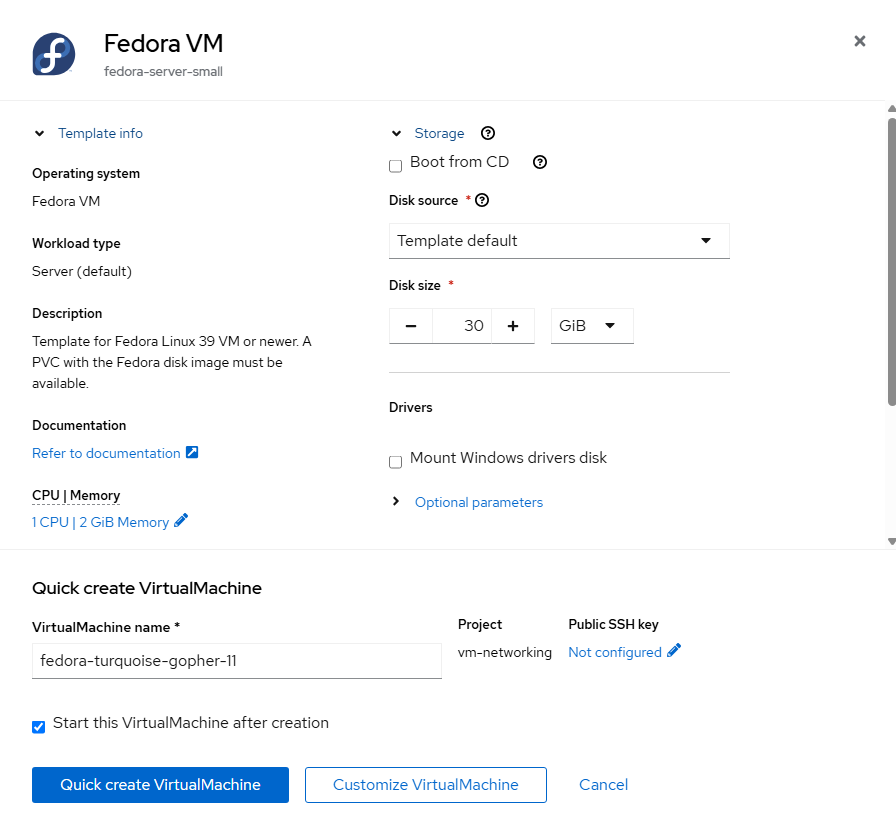

- Accept the defaults and click "Quick create VirtualMachine".

The name of the VM is random, so your demonstration will have different names as compared to here.

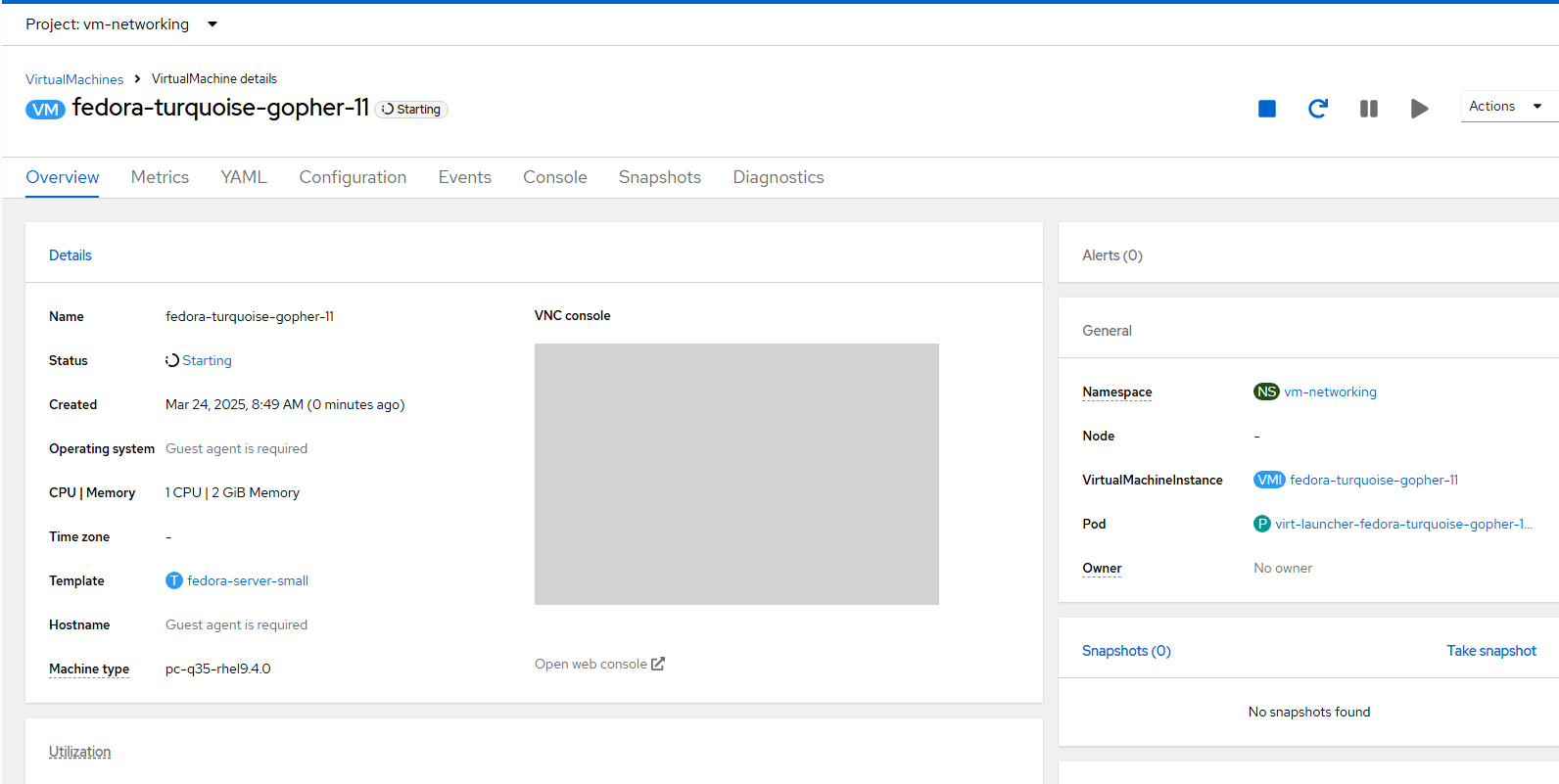

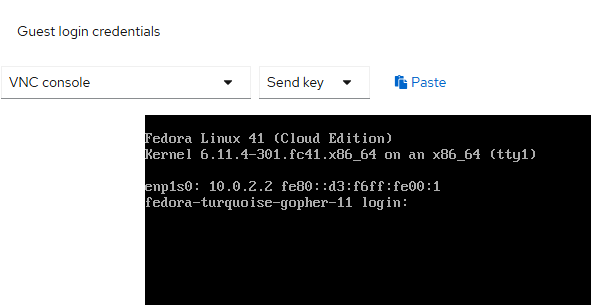

- Wait for the virtual machine to start. Once it is started the status will go to "Running" and a login prompt will appear in the VNC console.

- Take note of the name of this VM. In this case, it is called "fedora-turqoise-gopher-11".

Go to the "Console" tab.

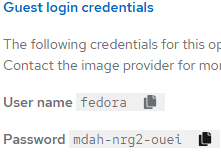

- By clicking on the "Guest login credentials" link on this page, you will get the username and password to login to this VM.

There is also a paste button which allows you to paste the password in the console. Click on the two sheets of paper icon to the right of the password to copy this to your clipboard.

Repeat these steps for one additional VM.

Getting IP Address Information

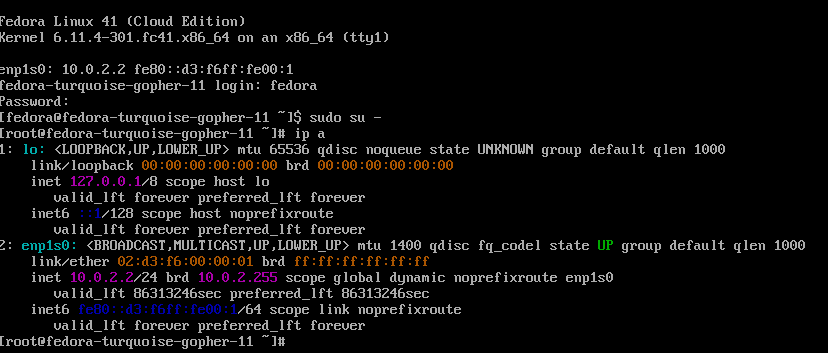

- Run the "ip a" command. You will see an IP adddress of 10.0.2.2. Each VM will have this same IP address (assuming the default pod networking setup was configured).

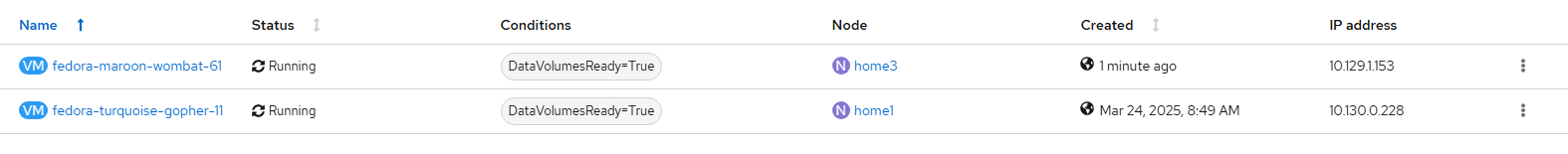

- The IP address that is used for this demo will be based on the virt-launcher pod IP. To get this, go Virtualization --> Virtual Machines menu in the console if you are not already there.

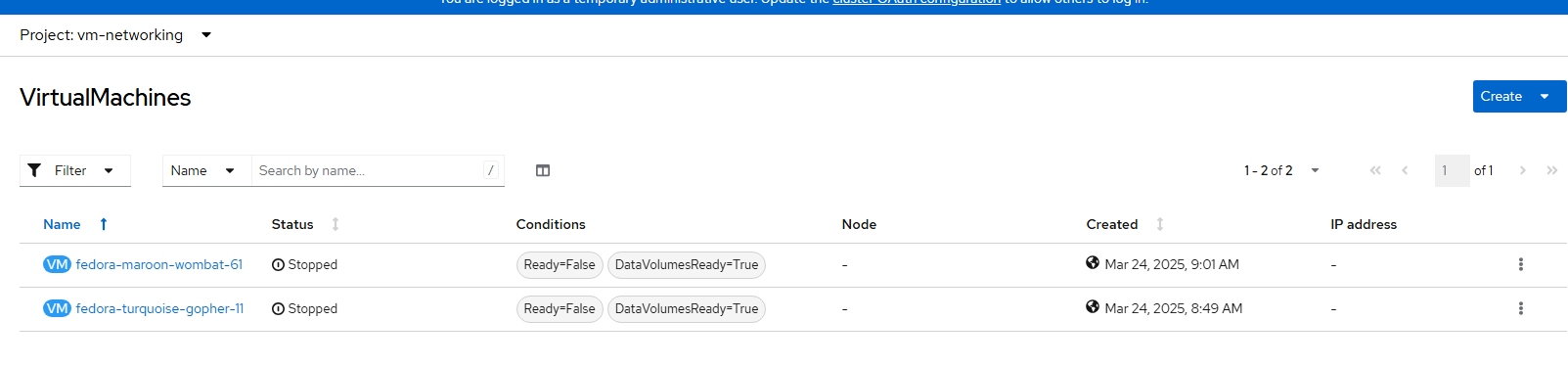

This screen will appear as follows:

Take note of the 2 IP addresses listed here. These will be used throughout this demonstration.

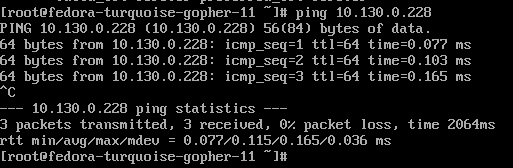

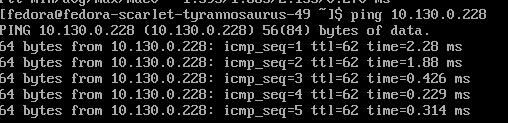

- From the first VM that was created (fedora-turquoise-gopher-11), try pinging the 10.130.0.228 IP which is associated with the other VM (fedora-maroon-wombat-61).

The ping should work.

Creating a VM in Second Namespace

- Create a project/namespace called vm-networking-2

oc new-project vm-networking-2- Follow the same steps listed above to create a VM in this project/namespace.

Do a ping to the IP that was used earlier for one of the VMs in vm-networking project/namespace.

You will see that the ping between a VM in one namespace/project can ping a VM in another project/namespace by default

Restricting Network Access

This demonstration is not about network-policy objects but a basic example will help make this article complete. These rules will be applied to show the result but removed for the rest of the demonstration.

- To only allow access to virtual machines in the same namespace, apply the following. In this example, the YAML is called allow-same-namespace.yaml and it will restrict all incoming traffic that is outside of the vm-networking namespace/project.

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: allow-same-namespace

spec:

podSelector: {}

ingress:

- from:

- podSelector: {}- Apply this policy

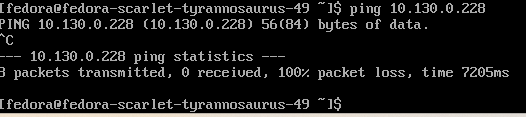

oc apply -f allow-same-namespace.yaml -n vm-networking- Now, attempt the ping again from the VM in vm-networking-2 namespace/project.

This ping will timeout as shown here.

- Now remove this object since it will not be used for the rest of this demonstration.

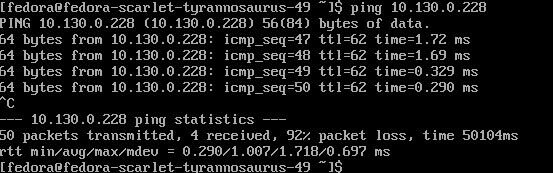

oc delete -f allow-same-namespace.yaml -n vm-networking- If the ping was still running from earlier, it should start to ping again after removing this rule.

Setting up Apache Web Server

For the next part of this demo, an Apache web server will be installed for the 2 virtual machines in the vm-networking project/namespace.

- Install httpd daemon.

sudo dnf install -y httpd- Create an edit the /var/www/html/index.html on the server using VI or your favorite editor.

Put some random text that is unique to this webserver such as "Web Server 1"

- Enable httpd and start it.

sudo systemctl start httpd

sudo systemctl enable httpd

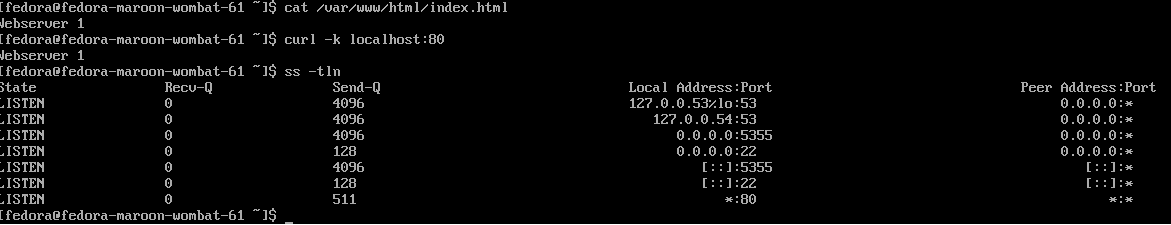

Verify contents of /var/www/html/index.html file.

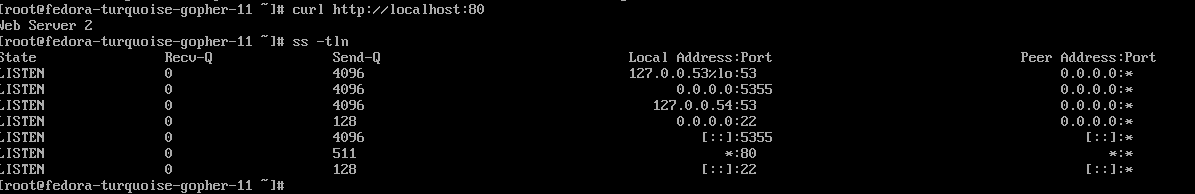

cat /var/www/html/index.htmlVerify localhost connectivity and see the webpage text

curl -k localhost:80Make sure port 80 on all interfaces is listening

ss -tlnThis will show *:80 if it is setup properly.

- Follow the same steps for the second VM/webserver but change the text label to something different and do the same verification (step 3 above).

Set up Apache web server on Two VMs, Make web server with different default web pages

Label the VMs

In the next part of this demonstration, labels will be added to the 2 VMs that were setup in the vm-networking namespace/project. These labels will be used to attach a service and other OCP/Kubernetes networking constructs to these two VMs.

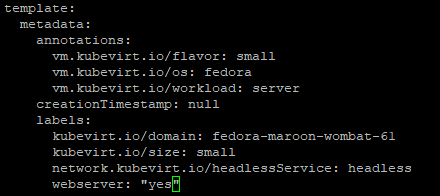

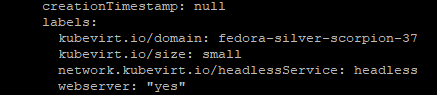

- Edit each VM object. Go into the template --> metadata --> labels section and add the "webserver: yes" label.

- Do this for each VM and then stop the VM after applying these changes.

- Now start the VMs back up.

Create the Service

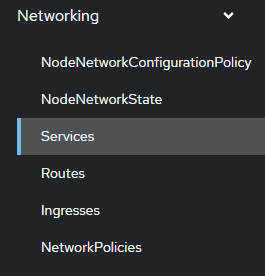

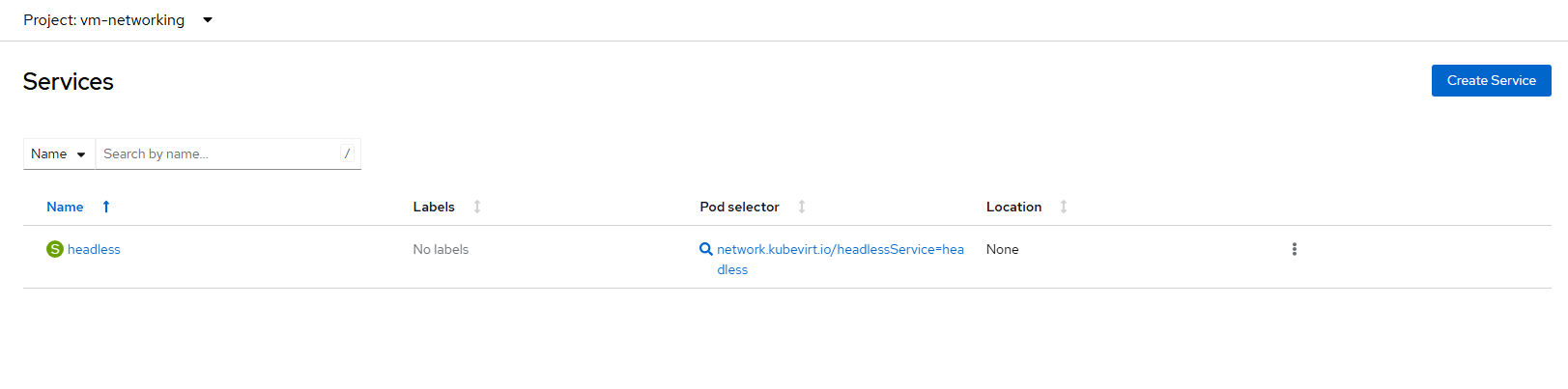

1. On the web-console, go to Networking --> Services

- Ensure, you are in the vm-networking project.

Click "Create Service".

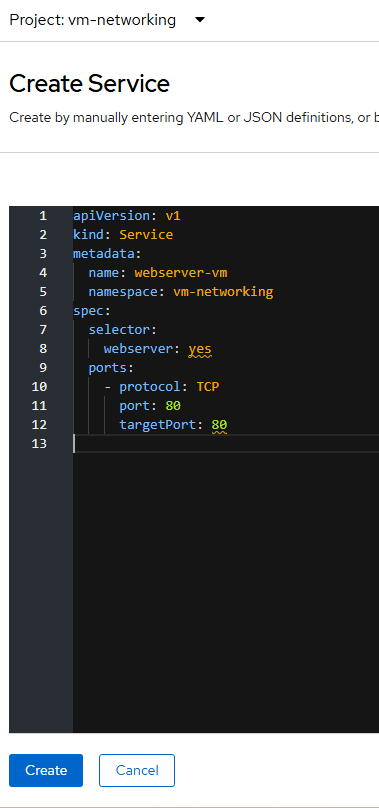

- Use the following YAML definition. This will create a service which makes it routable by name from any other workload in the cluster (by default). This is based on no network policies being in place at the moment.

apiVersion: v1

kind: Service

metadata:

name: webserver-vm

namespace: vm-networking

spec:

selector:

webserver: yes

ports:

- protocol: TCP

port: 80

targetPort: 80

The name here (webserver-vm) gives the service a name so one doesn't have to remember individual IP addresses. This name will be used later as a short-name when in the same namespace and as an FQDN name when used from the same cluster but in a different namespace.

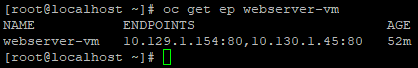

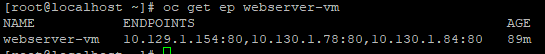

Finding End-Points

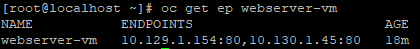

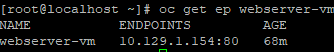

To verify that the service was created and is detecting the VM endpoints, run the following command:

oc get ep webserver-vm

You will notice the pod IPs have changed after the restart we did earlier. This does not matter. The label "webserver: yes" will always pick up the correct IPs. Right now, it is targeting port 80 on each of the VMs.

Verifying Connectivity by Hostname/FQDN

When the service was created earlier, we named it "webserver-vm". This name is import for reaching these load-balanced webservers from other workloads in the cluster.

- Let's create a new namespace/project called network-tester.

oc new-project network-tester- Create a YAML file called network-toolbox.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: network-tools

spec:

replicas: 1

selector:

matchLabels:

app: network-tools

template:

metadata:

creationTimestamp: null

labels:

app: network-tools

deploymentconfig: network-tools

spec:

containers:

- name: network-tools

image: quay.io/openshift/origin-network-tools:latest

command:

- sleep

- infinity

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: Always

restartPolicy: Always

terminationGracePeriodSeconds: 30

dnsPolicy: ClusterFirst

securityContext: {}

schedulerName: default-scheduler

imagePullSecrets: []

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

revisionHistoryLimit: 10

progressDeadlineSeconds: 600

paused: falseApply it

oc apply -f network-toolbox.yaml -n network-testerLet's also create one in the in the "vm-networking" namespace/project

oc apply -f network-toolbox.yaml -n vm-networking

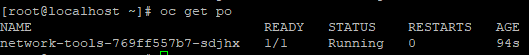

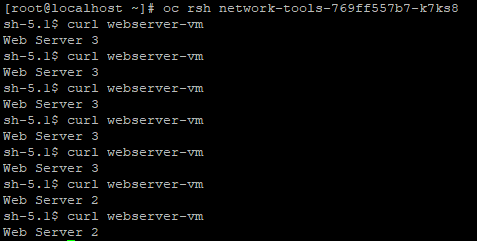

- Let's go to the RSH terminal of the network-tools container that is running in network-tester namespace.

oc project network-tester

Show the pods

oc get po

- RSH into it

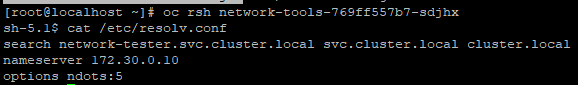

oc rsh <pod-name>cat /etc/resolv.conf

Here, you will see that the default search suffix by default is your current-namespace (network-tester).svc.cluster.local.

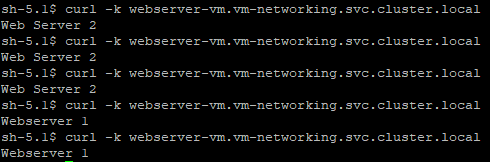

The webserver-vm FQDN name (which needs to be used here) is webserver-vm.vm-networking.svc.cluster.local. Let's try to curl against that name.

curl -k http://webserver-vm.vm-networking.svc.cluster.localCurl this a few times. You should see some responses coming from webserver 1 (VM 1) and others from webserver 2 (VM 2).

The responses from the multiple-endpoints mean the load-balancer is working correctly.

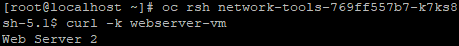

- To connect to this service from the same namespace, you can use the short-name.

Go to "vm-networking" project.

oc project vm-networking

oc get pooc rsh <network-tools-pod>Use the short-name since you are in the same project

curl -k http://webserver-vm

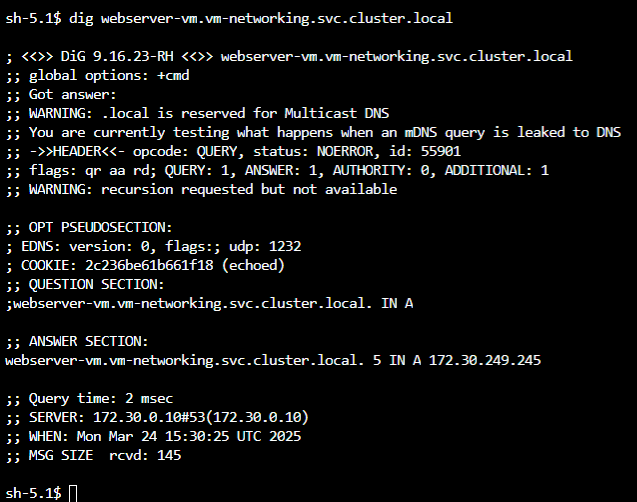

- Let's see what the IP address is for this.

dig webserver-vm.vm-networking.svc.cluster.local

The IP of this service is 172.30.249.245.

Load-Balancer Automatically Updating Endpoints

The default service type (the one we created) uses ClusterIP which assigns an IP address in the 172.30.0.0/16 subnet by default.

Let's shutdown one of the VMs to see what happens.

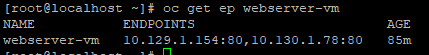

- See which endpoints show up currently.

oc get ep webserver-vmRight now, there are two endpoints corresponding to the two VMs. You will see the pod IPs and port 80 (webserver).

- Turn off one of the VMs in the "vm-networking" project by stopping it.

- Check the endpoints again.

oc get ep webserver-vm

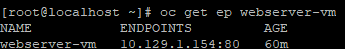

Now, the http requests will only go to the endpoint with POD IP 10.129.1.154. Let's see which one this is by going back into the network-tools pod.

- RSH into the network-tools pod and run the curl again against http://webserver-vm

It looks like the VM that corresponds to "Web Server 2" is the only one up now.

Adding a new VM

Let's quickly create a third VM in this namespace the same as we did earlier but we want to ensure that we assign the label "webserver: yes" as it is getting created.

- Use Fedora template as we did earlier but click "Quick create VirtualMachine".

Now, click "Create Virtual Machine".

- Notice that even when the VM is created, the endpoint does not get updated even after the VM powers on.

oc get ep -n webserver-vm

We will need to install the webserver and enable it on the third VM. Here are the same steps but abbreviated. These would be run from the VM console.

sudo dnf install httpd -y

# Add Web Server 3 text here

sudo vi /var/www/html/index.html

sudo systemctl enable httpd

sudo systemctl start httpd- Add the label under spec-->templates-->metadata-->label--> webserver: "yes"

- Stop and start the third VM.

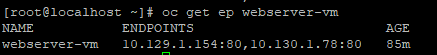

- Get the endpoints again.

oc get ep webserver-vm

Web server 3 (VM 3) will show up as an endpoint now.

Let's do the continuous curls again from the network-tools pod. See it is switching between webserver 2 and webserver 3 which are the current VMs active.

- If I start VM 1 back up, it will show in the output of the "oc get ep" as well.

oc get ep webserver-vm

Service Types

In this demonstration so far, ClusterIP resources were covered which are only reachable from within the cluster.

If you want to reach these endpoints from outside the cluster, there are a few options. You can use the following types of Services instead of ClusterIP.

NodePort: Will make a specific open port on all of the nodes respond to the web server request.

LoadBalancer: This allows direct integration with a cloud-based providers load-balancer (IE: AWS elasticlb), operator-based integration with other vendors load-balancers (IE: F5), or MetalLB which is a way to accomplish layer 2 and BGP route announcement for an end-point.

I won't cover NodePort here we will continue with MetalLB.

MetalLB

A simple example will be shown here which uses layer 2 mode of MetalLB. BGP is available as well but won't be covered here.

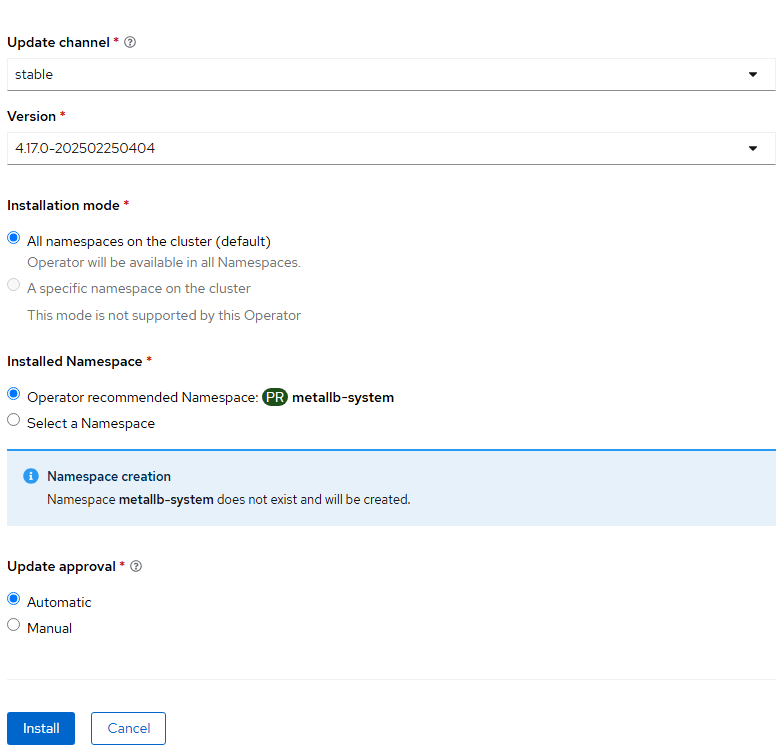

- Install the MetalLB operator.

Go to Operators --> OperatorHub and search for "MetalLB"

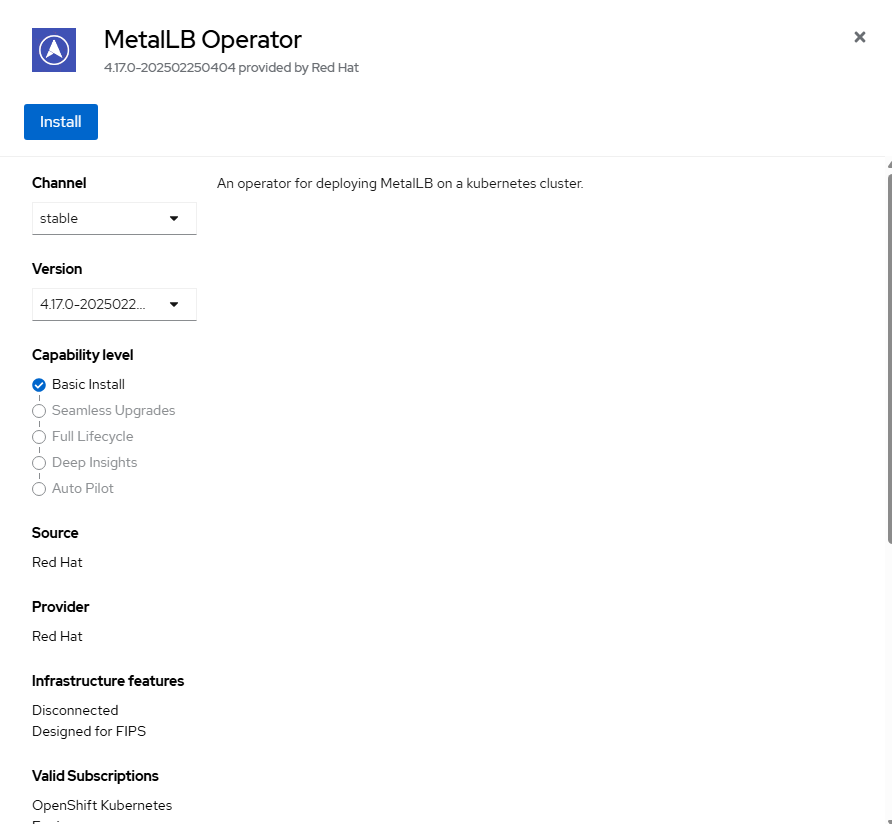

- Accept the default options and click "Install".

- Accept the defaults and click "Install".

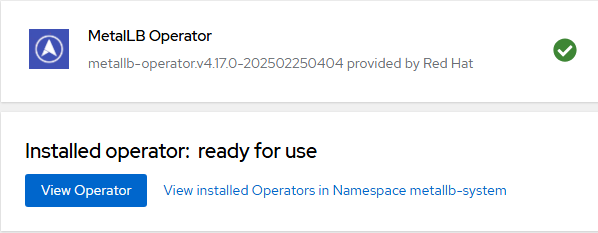

- Click "View Operator".

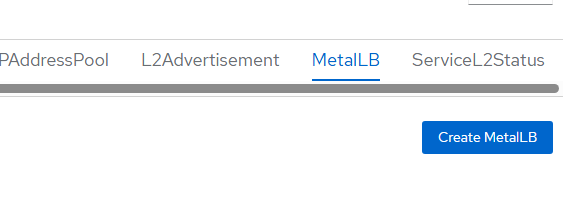

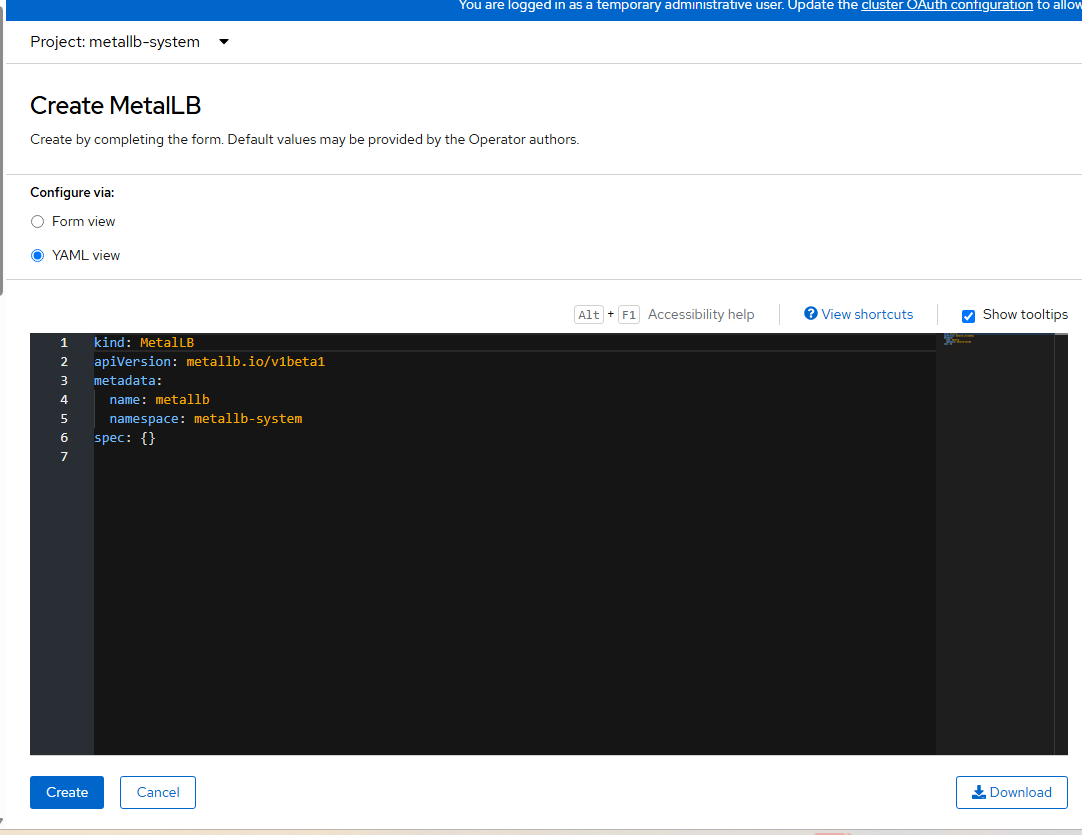

- On MetalLB tab, click "Create MetalLB".

- Accept the defaults for now and click "Create".

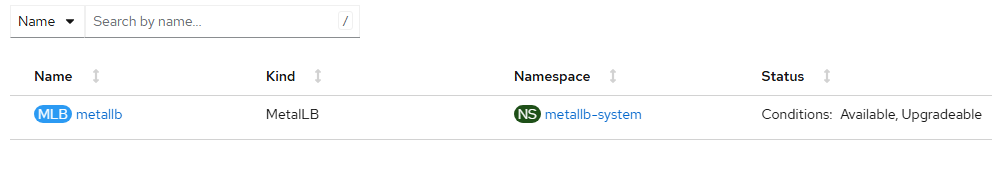

- Wait for the status to show "Available".

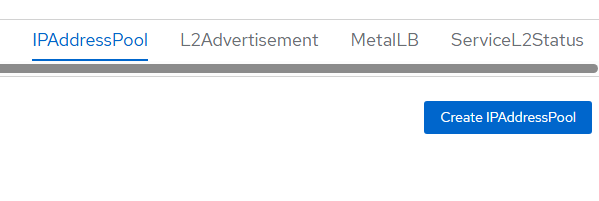

- Click "IPAddressPool" tab and "Create IPAddressPool".

- In my home lab, my machine network is on 192.168.4.0/24 network so I will find a few free IPs to assign.

kind: IPAddressPool

apiVersion: metallb.io/v1beta1

metadata:

name: ip-addresspool-sample1

namespace: metallb-system

labels:

webserver: "yes"

spec:

addresses:

- 192.168.4.100/32

- 192.168.4.101/32Click "Create".

- Create layer 2 announcements for ARP.

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: l2advertisement-label

namespace: metallb-system

spec:

ipAddressPoolSelectors:

- matchExpressions:

- key: webserver

operator: In

values:

- "yes"- Assign the webserver-vm service to this. Create a file called metallb-service.yaml.

apiVersion: v1

kind: Service

metadata:

name: webserver-metallb-vm

annotations:

metallb.io/address-pool: ip-addresspool-sample1

spec:

selector:

webserver: "yes"

ports:

- port: 80

targetPort: 80

protocol: TCP

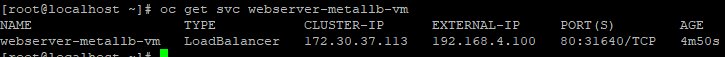

type: LoadBalanceroc apply -f metallb-service.yaml -n vm-networking12. List the service.

oc get svc webserver-metallb-vm

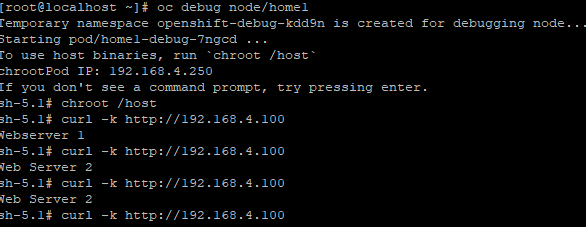

- Now, you should be able to curl this from one of your OCP nodes using that public IP or any network where this IP is reachable.

In this example, I use one of my OCP nodes.

oc debug node/<nodename>

chroot /host

curl -k http://<metallb-ip>

Openshift Route

I know this article was long but stick with me for one more. Openshift adds another layer on top of the Kubernetes Ingress construct which uses a layer7 load-balancer. This uses the host header from an incoming http request to automatically direct a web-browser to the correct end-point.

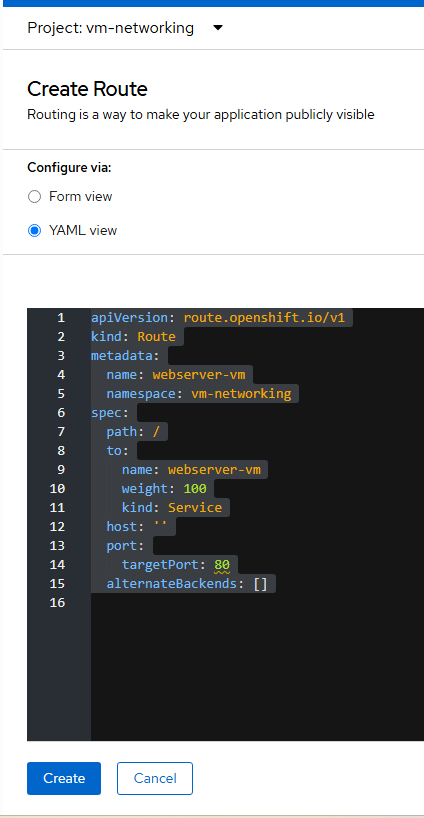

In this example, I will just do something simple and attach the route to the existing "webserver-vm" service as shown below.

- Go to Networking --> Routes in the console. Ensure you are in the "vm-networking" project. Click "Create Route".

apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: webserver-vm

namespace: vm-networking

spec:

path: /

to:

name: webserver-vm

weight: 100

kind: Service

host: ''

port:

targetPort: 80

alternateBackends: []

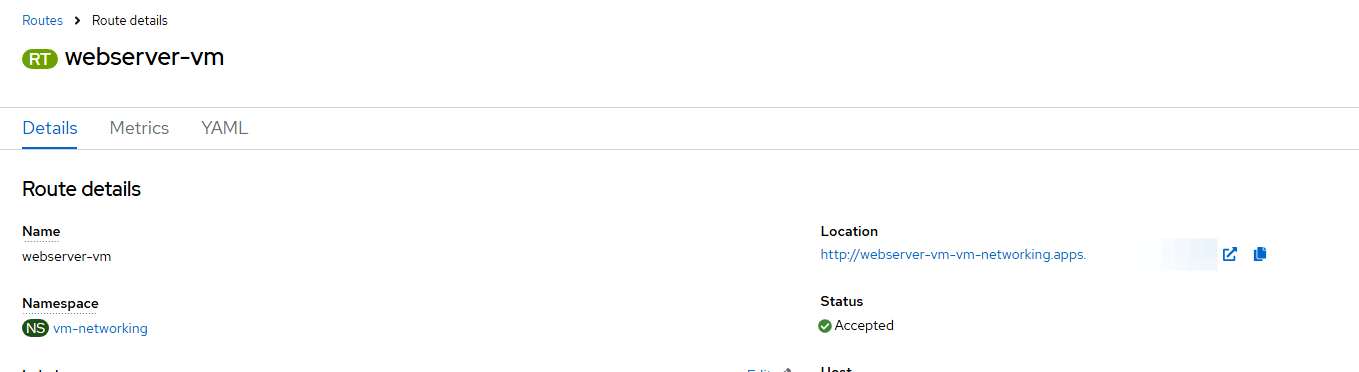

This will (by default) create a URL on the Openshift apps domain. This is <route-name>-<project-name>.apps.<cluster-fqdn>.

Click "Create".

The resulting page will show a location-name which can be put into your web browser assuming you can connect to the network that your Openshift cluster is on using port 80.

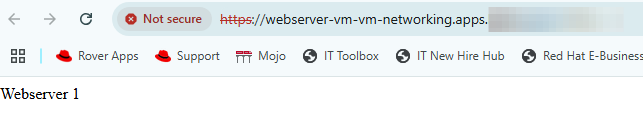

- Go to http://webserver-vm-vm-networking.apps.<fqdn> in your web-browser.

I am currently hitting the first web server (VM 1).

I know this article was long but I wanted to cover enough but not too much. There is a whole lot more I could get into but maybe a future article for that.