Quick-Start and Quick-Learn of Openshift Virtualization

Since the previous article was a lengthy introduction on Openshift Virtualization, I wanted to do something fun and productive for this next article. There will be some other articles later on deeper dives and more advanced stuff, but let's do some quick-learning on the most popular topics (in my opinion).

Creating a Basic VM Using Pre-Populated Templates

To make things exciting, we will first create a VM off one of the templates that is provided.

If this a new install and you have not created a VM yet, a quick-start page will appear that provides the option to "Create Virtual Machine". There are also some other quick-start guides from Red Hat on these processes. I will do some of the same things that are mentioned in the article but will add some other context that I feel is important.

Go back to the Openshift Web Console and select Virtualization --> Overview tab.

- Click "Create VirtualMachine".

On this screen, you will see two tabs. One is "InstanceTypes" which is the default page and "Template Catalog".

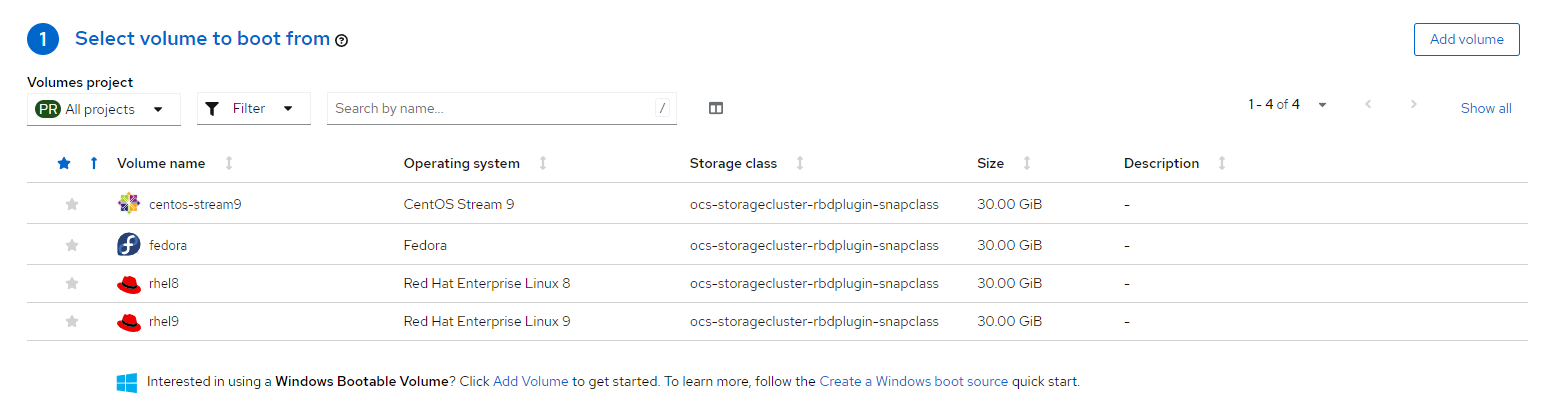

On the "InstanceTypes" page, the first thing to do is select a volume to boot from. There are 4 volumes that are avaiable immediately to boot from. These were based on snapshots that were taken of a base-install of these operating systems (I will show you how to create volumes to boot from later). The choices are currently:

centos-stream9

fedora

rhel8

rhel9

- Let's create a VM based off of Fedora. Click on the "Fedora" icon.

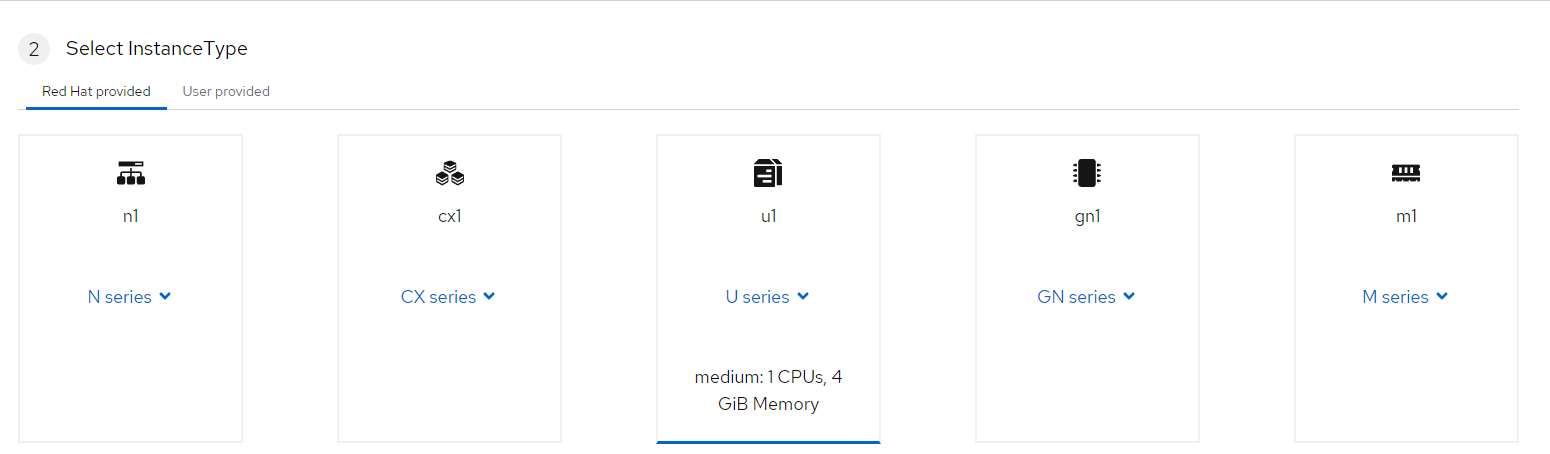

- By default, the instance-type is set to u1 which is for universal workloads. This means that these VMs equally share cpu memory time-slices with other VMs (no special priorities). Medium means 1 CPU and 4Gib of memory.

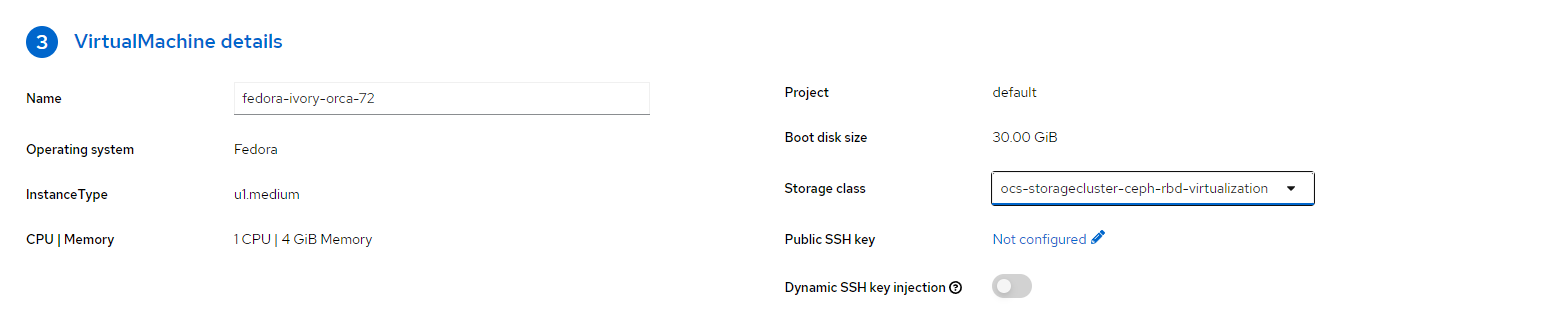

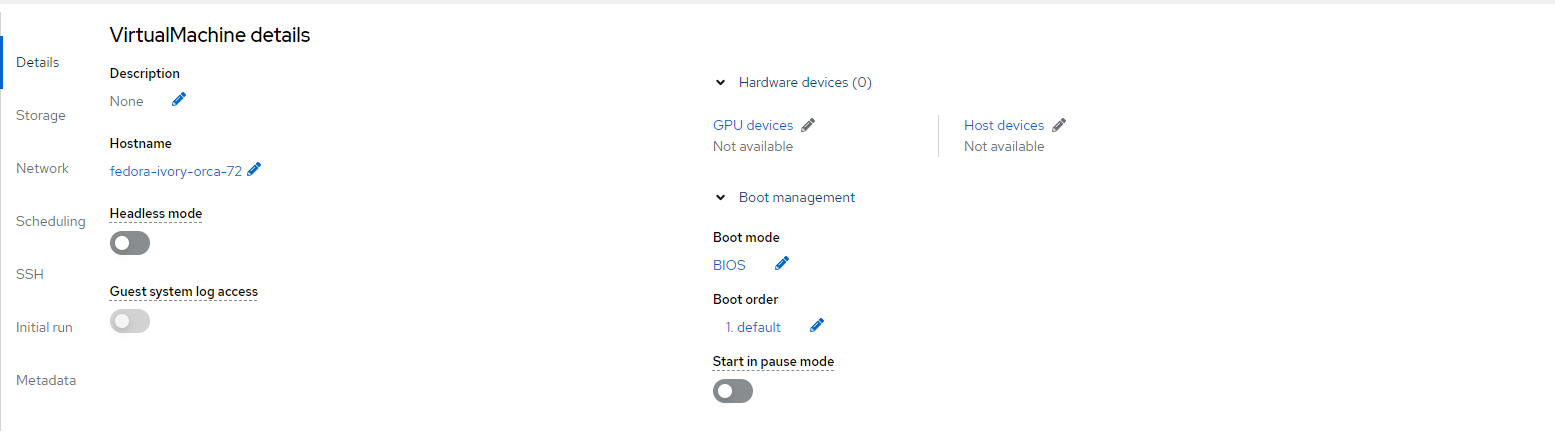

- The last step for this demonstration is setting any special virtual machine details. The default are sufficient but I want to explain this a little more.

This sample creates the VM in the default project/namespace for now. The boot disk-size is 30GB but is expandable (more on this later). The default storage class that is setup when Openshift Virtualization is installed is called ocs-storagecluster-ceph-rbd-viurtualization and provides rwo (read-write-once) and rwx (read-write-many) block devices.

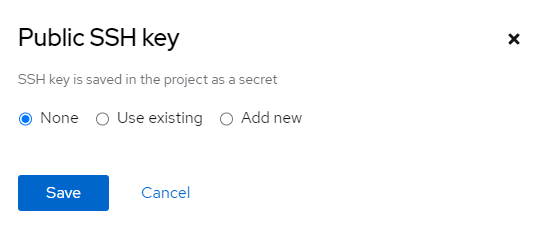

I am not going to set a public SSH (used to SSH into the VM using public-key) but here is the menu that appears if you decide to do this.

- No customizations will be done to for this demonstration but a high-level view of this screen is helpful. This will be built upon in a later post.

Some of the options that are not as self-explanatory, here is a short description:

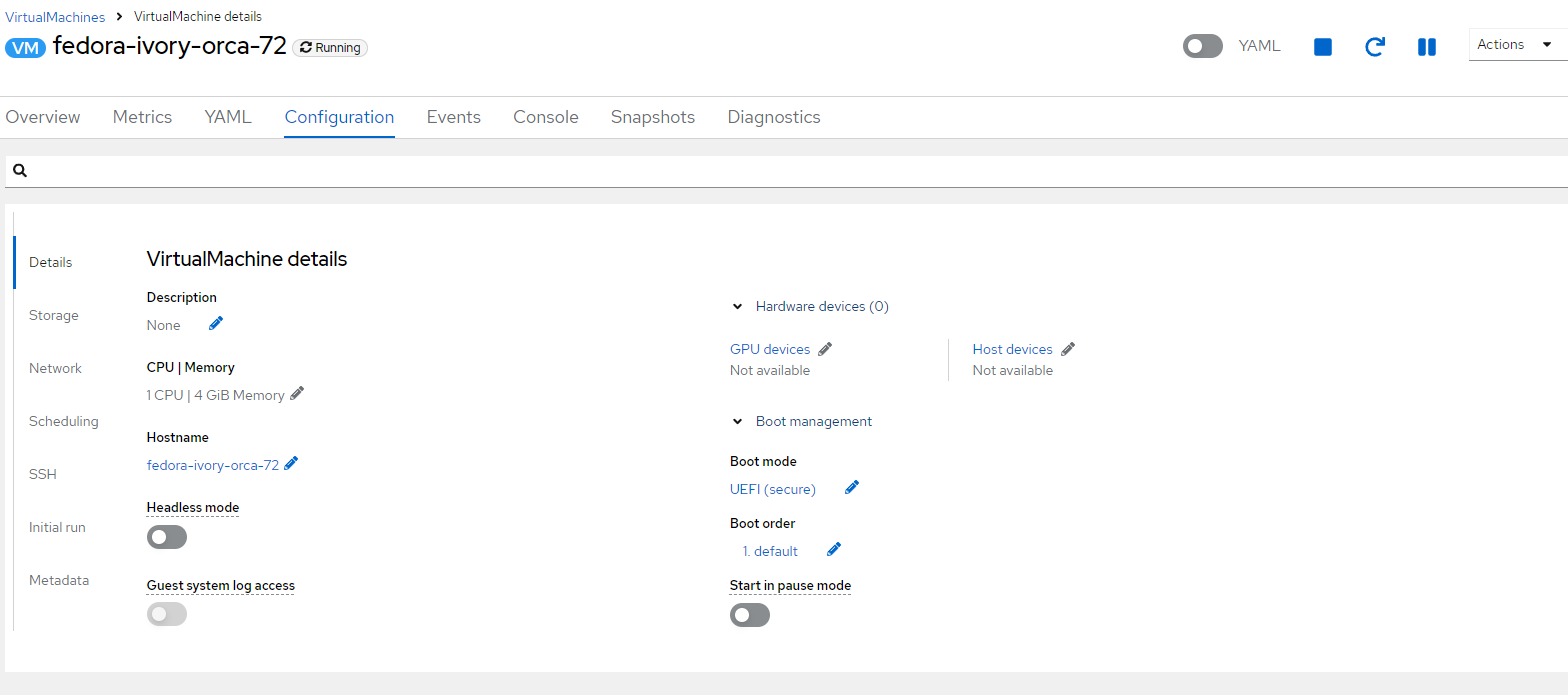

Headless Mode- A way to access the VM without attaching a default graphics device. This is useful for non-desktop systems when VNC access is not required.

Guest System Log Access - An easier way to see logs from the VM. This visibility is integrated with the Openshift Web Console.

Hardware Devices - Whether any hardware devices from the node will be passed through the VM. This is commonly done with GPUs.

Boot Mode - BIOS, UEFI, or secure UEFI are available.

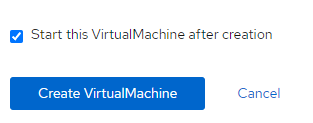

- Let's get to creating the virtual machine. Click "Create VirtualMachine" and leave the option set to start the virtual machine after creation.

What Happens Inside of Openshift

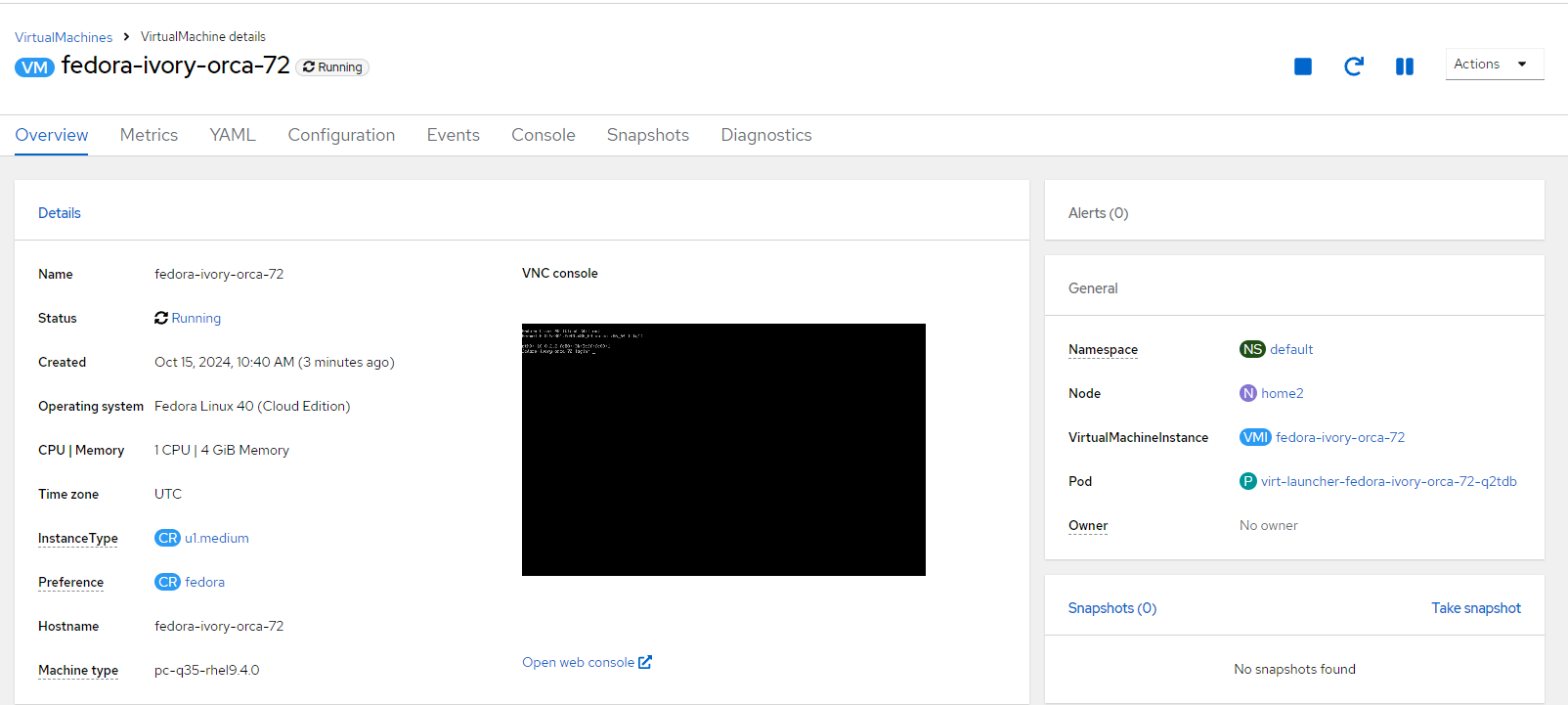

Within a few minutes of creating this virtual machine, it's status should change to "Running". Here are some screenshots and explanations for some of the items that appear on the screen that immediately appears after creating the VM. This is the "Virtual Machine" details screen. It can be accessed later by going to the Virtualization – > Virtual Machines tab on the Openshift Web Console.

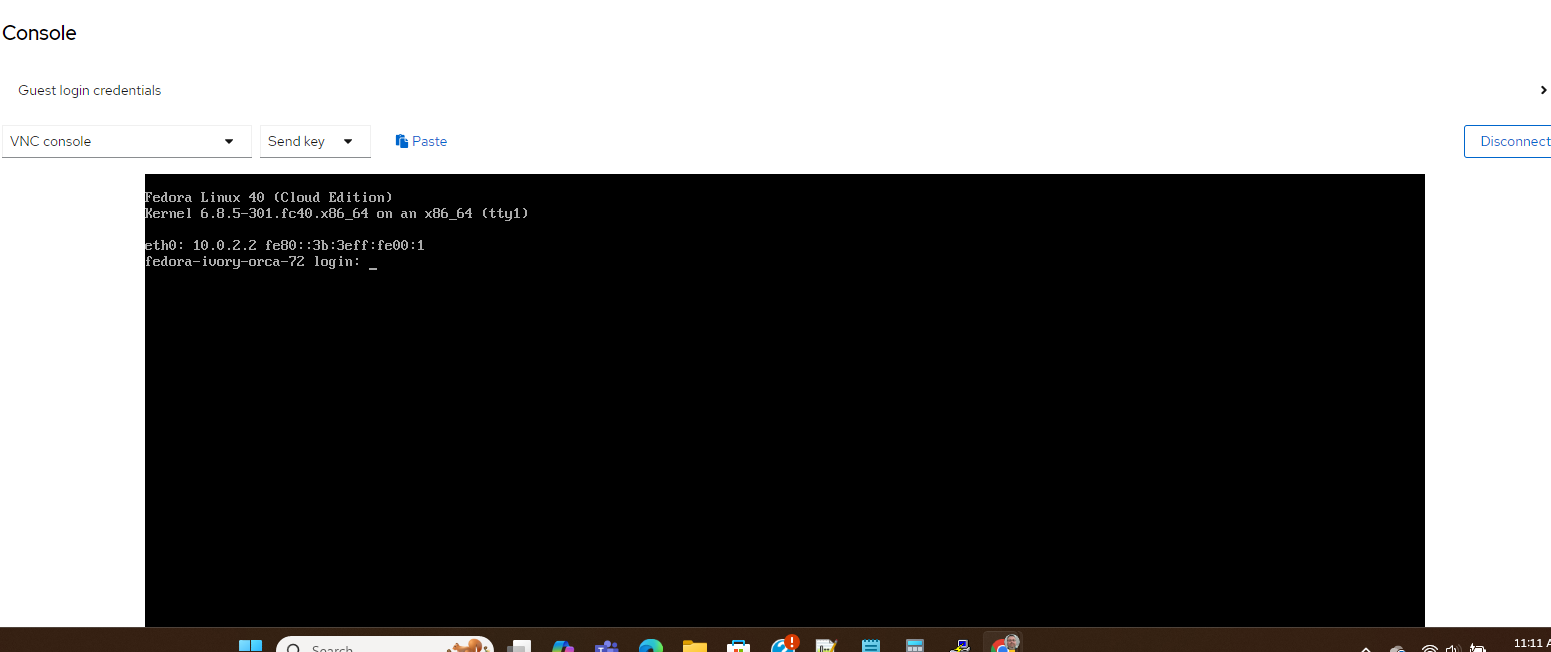

Once the VM starts up, a VNC console will appear (very small) with a login prompt.

Most of the information shown on this screen is self-explanator but one thing I want to mention is the virt-launcher pod that appears on the right side of the screen under "General".

I will provide a very brief and simple explanation on this for now. The virt-launcher pod ties the virtualization layer (libvirt) with Kubernetes constructs. Since Kubernetes/OCP is a container-based orchestration, there needs to be an interface between Kubernetes and libvirt. This is what ties the container-orchestration system and the hypervisor together. One important aspect to the virt-launcher pod is that it provides an easy means to troubleshoot startup issues from within Kubernetes. This is typically the first object to look at when troubleshooting is needed.

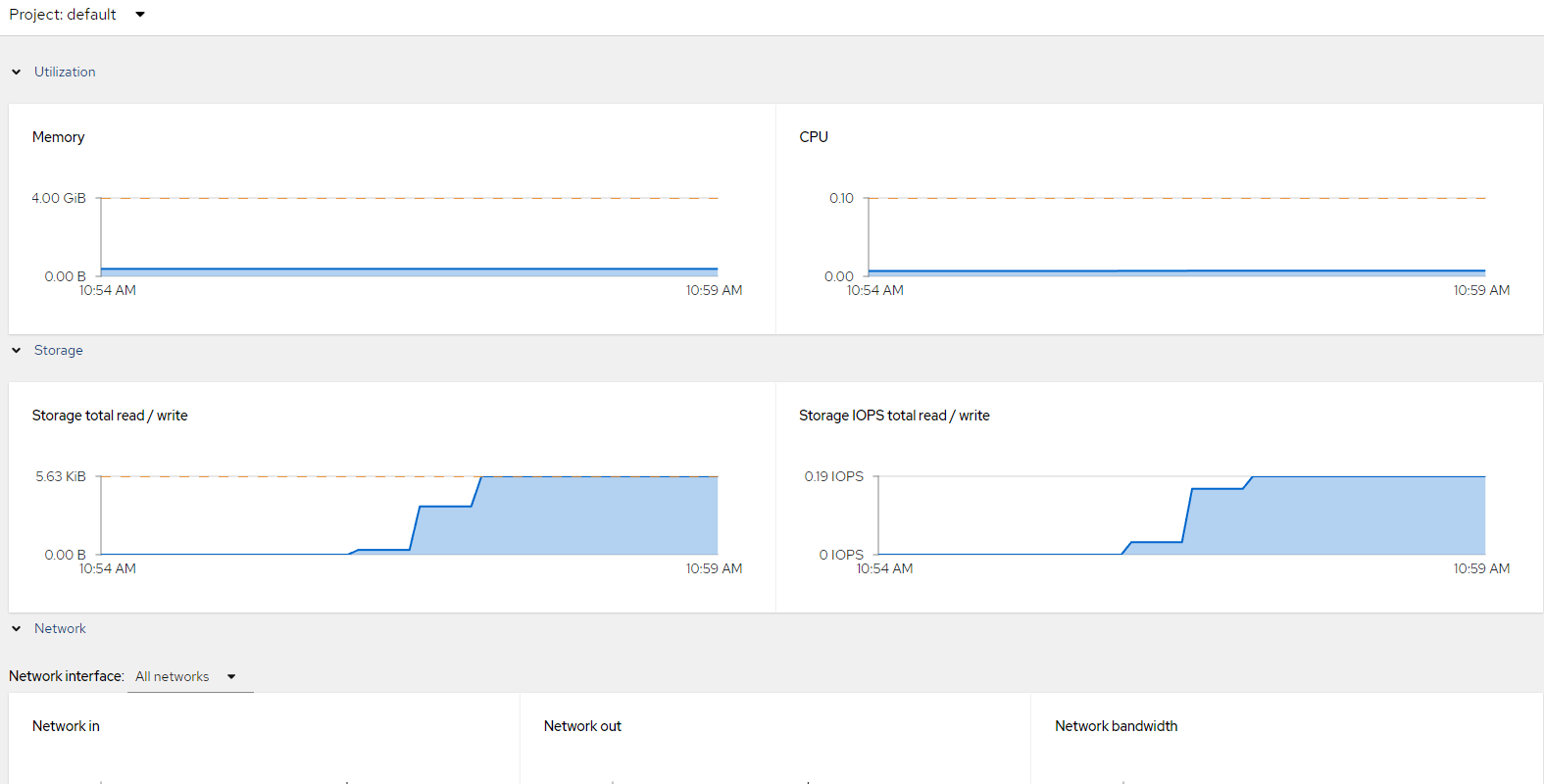

The Metrics tab shows memory, cpu, networking, and migration statistics.

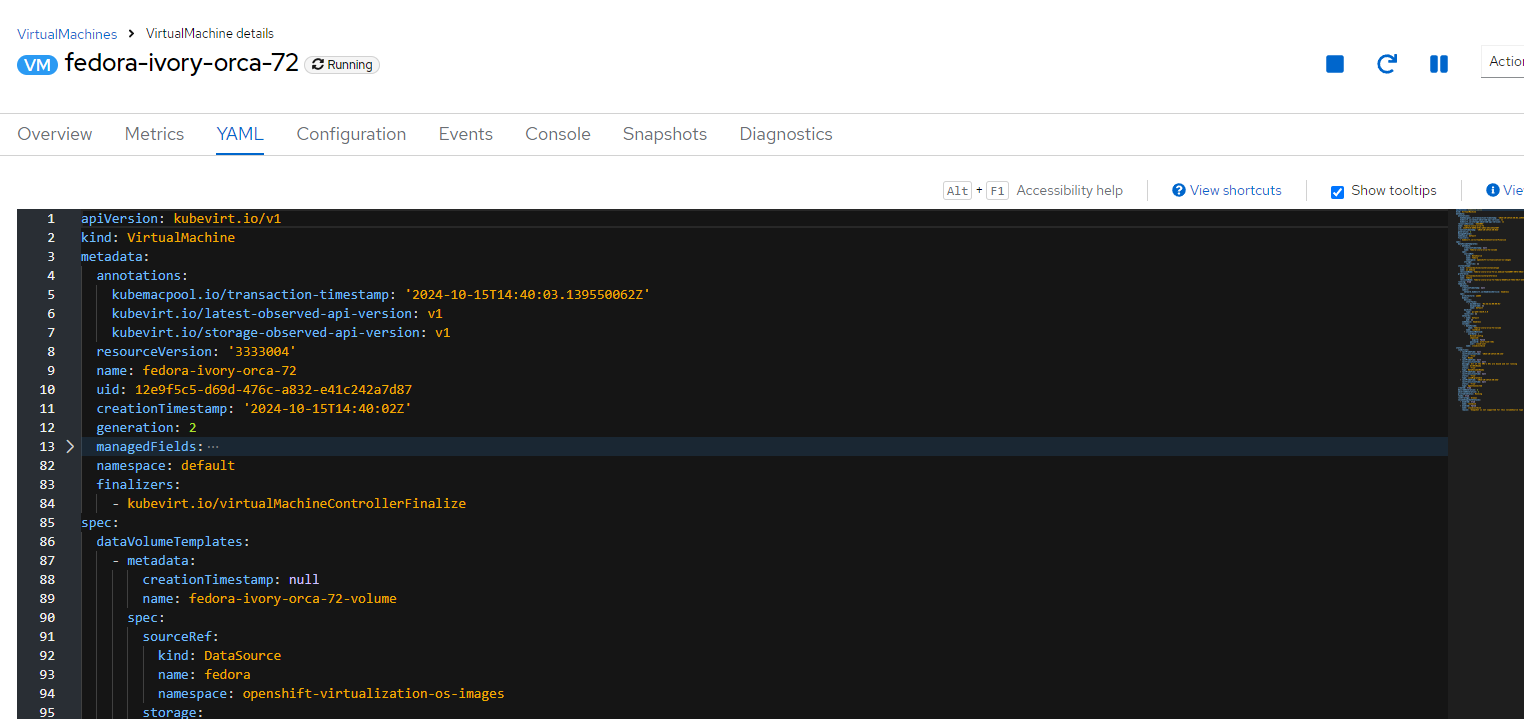

The YAML tab will show the definition of the VirtualMachine CR. More on what is contained in these CRs will be shown in the next section.

The Configuration tab is the same screen that was used earlier when the VM was created.

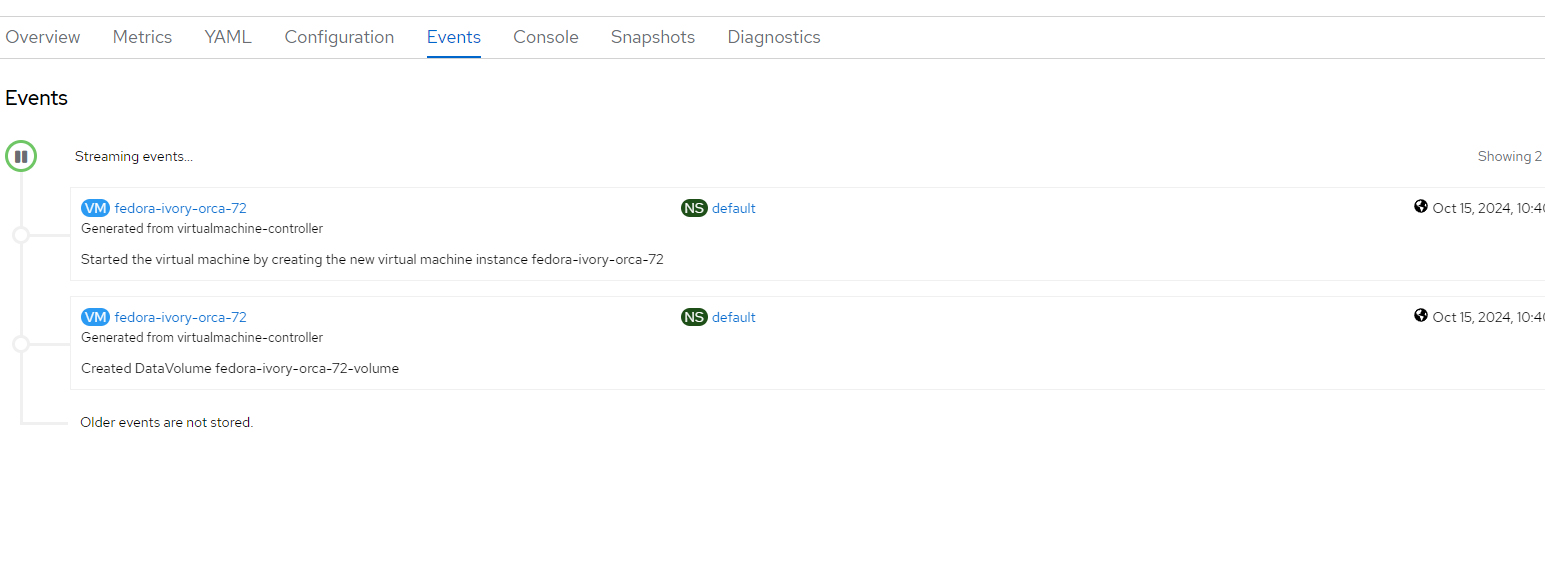

The Events tab for this creation shows two things that happened. The DataVolume object was created and the VM was started.

Just as the Virt-Launcher pod ties Kubernetes constructs to the VM layer, the datavolume is a sub-layer in some regards meaning that it ties all of the Kubernetes storage constructs together with the virtualization storage resources. Again, this is a simple explanation for now.

The console tab provides a way to access the console of the VM through a VNC console (similar to connecting a monitor to a physical machine) or serial which is a way to manage this out-of-band. In physical environments, this serial method would be used if networking did not come up on the physical machine and you needed a backdoor to access the machine.

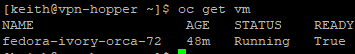

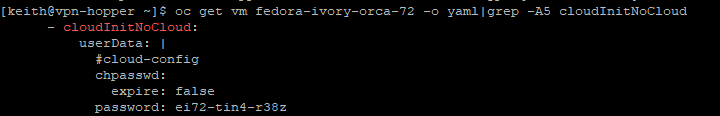

To get the username and password in order to login to the console of the VM, look at the VM object. This VM is located in the default project/namespace in this example.

oc get vm

oc get vm <name from above> -o yaml|grep -A5 cloudInitNoCloud

The username is cloud-user and password is ei72-tin4-r38z based on this example.

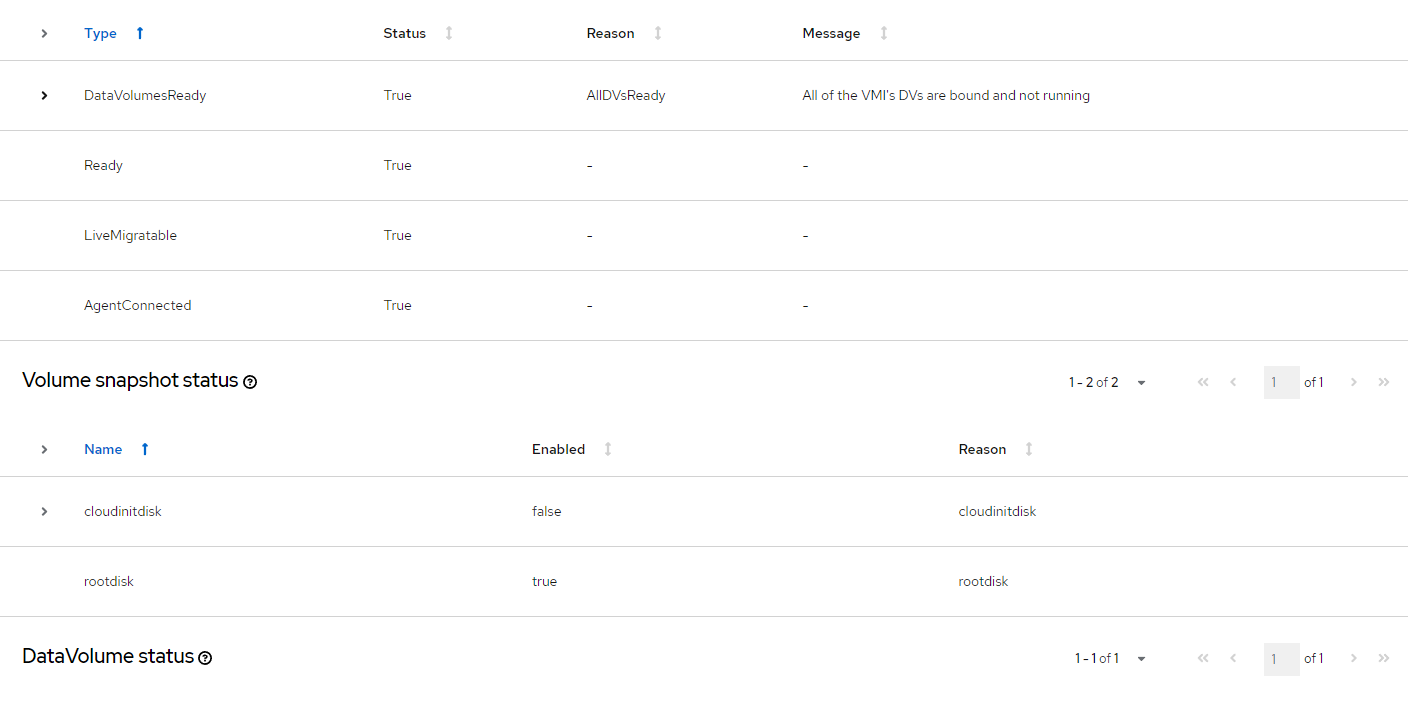

The Snapshots tab will allow you to take a snapshot of the rootdisk. You are unable to take a snapshot of the cloudinitdisk. Just for clarity, the cloudinitdisk was used to build this VM initially. This image was basically cloned to become the root disk of the Fedora VM we are using here.

You can do a snapshot and use this later to build another Openshift Virtualization based VM. This task will demonstrated in a follow-on article.

The Diagnostics tab provides a means to verify some status information on the VM and also a way to view system logs of the VM is this feature is enabled.

What Happens Inside of Openshift

After creating this Fedora VM, a bunch of CRs (custom resources), pods, and other objects are created to make this happen. Let's look through these objects and attempt to understand (at a high-level) the flow that happens to enable the creation of this VM.

The base operating system for these templates has a few objects. For example:

datasources- In the openshift-virtualization-os-images project/namespace, these resources exist for centos, fedora, rhel, and windows operating systems. One key difference with the windows VMs is that a boot source is not available. I will be writing some articles shortly that cover how to create custom boot sources.

To see this how the VM creation process works, let's look at some of the objects that are created. This is a very simplistic view but gets us started. The process starts with some CDI (containerized data importer) operations and concludes with the virt-launcher pod and vm/vmi resourcess being created.

- A datasources object exists for fedora (and some other operating systems) when Openshift Virtualization is first installed.

oc get datasources fedora -n openshift-virtualization-os-images -o yamlapiVersion: cdi.kubevirt.io/v1beta1

kind: DataSource

metadata:

annotations:

operator-sdk/primary-resource: openshift-cnv/ssp-kubevirt-hyperconverged

operator-sdk/primary-resource-type: SSP.ssp.kubevirt.io

creationTimestamp: "2024-10-14T15:17:44Z"

generation: 5

labels:

app.kubernetes.io/component: storage

app.kubernetes.io/managed-by: cdi-controller

app.kubernetes.io/part-of: hyperconverged-cluster

app.kubernetes.io/version: 4.16.3

cdi.kubevirt.io/dataImportCron: fedora-image-cron

instancetype.kubevirt.io/default-instancetype: u1.medium

instancetype.kubevirt.io/default-preference: fedora

kubevirt.io/dynamic-credentials-support: "true"

name: fedora

namespace: openshift-virtualization-os-images

resourceVersion: "1443315"

uid: ef0c8397-ad88-46c8-b549-863e4c77daac

spec:

source:

snapshot:

name: fedora-21a6f3e628cd

namespace: openshift-virtualization-os-images

status:

conditions:

- lastHeartbeatTime: "2024-10-14T15:21:06Z"

lastTransitionTime: "2024-10-14T15:21:06Z"

message: DataSource is ready to be consumed

reason: Ready

status: "True"

type: Ready

source:

snapshot:

name: fedora-21a6f3e628cd

namespace: openshift-virtualization-os-images- For this Fedora datasource, it is based on a snapshot:

oc get volumesnapshots.snapshot.storage.k8s.io -n openshift-virtualization-os-images fedora-21a6f3e628cd -o yamlapiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

annotations:

cdi.kubevirt.io/storage.import.lastUseTime: "2024-10-15T16:26:20.683466671Z"

cdi.kubevirt.io/storage.import.sourceVolumeMode: Block

creationTimestamp: "2024-10-14T15:20:38Z"

finalizers:

- snapshot.storage.kubernetes.io/volumesnapshot-as-source-protection

- snapshot.storage.kubernetes.io/volumesnapshot-bound-protection

generation: 1

labels:

app: containerized-data-importer

app.kubernetes.io/component: storage

app.kubernetes.io/managed-by: cdi-controller

app.kubernetes.io/part-of: hyperconverged-cluster

app.kubernetes.io/version: 4.16.3

cdi.kubevirt.io: ""

cdi.kubevirt.io/dataImportCron: fedora-image-cron

name: fedora-21a6f3e628cd

namespace: openshift-virtualization-os-images

resourceVersion: "3475369"

uid: 713246b2-3920-4a5e-9ab9-387f4c342d2f

spec:

source:

persistentVolumeClaimName: fedora-21a6f3e628cd

volumeSnapshotClassName: ocs-storagecluster-rbdplugin-snapclass

status:

boundVolumeSnapshotContentName: snapcontent-713246b2-3920-4a5e-9ab9-387f4c342d2f

creationTime: "2024-10-14T15:20:47Z"

readyToUse: true

restoreSize: 30Gi

- This snapshot is downloaded/cloned periodically based on this cronjob:

oc get dataimportcrons.cdi.kubevirt.io fedora-image-cron -n openshift-virtualization-os-images -o yamlapiVersion: cdi.kubevirt.io/v1beta1

kind: DataImportCron

metadata:

annotations:

cdi.kubevirt.io/storage.bind.immediate.requested: "true"

cdi.kubevirt.io/storage.import.lastCronTime: "2024-10-15T09:20:01Z"

cdi.kubevirt.io/storage.import.sourceDesiredDigest: sha256:21a6f3e628cde7ec7afc9aedf69423924322225d236e78a1f90cf6f4d980f9b3

cdi.kubevirt.io/storage.import.storageClass: ocs-storagecluster-ceph-rbd-virtualization

operator-sdk/primary-resource: openshift-cnv/ssp-kubevirt-hyperconverged

operator-sdk/primary-resource-type: SSP.ssp.kubevirt.io

creationTimestamp: "2024-10-14T15:17:44Z"

generation: 11

labels:

app.kubernetes.io/component: templating

app.kubernetes.io/managed-by: ssp-operator

app.kubernetes.io/name: data-sources

app.kubernetes.io/part-of: hyperconverged-cluster

app.kubernetes.io/version: 4.16.3

instancetype.kubevirt.io/default-instancetype: u1.medium

instancetype.kubevirt.io/default-preference: fedora

kubevirt.io/dynamic-credentials-support: "true"

name: fedora-image-cron

namespace: openshift-virtualization-os-images

resourceVersion: "2903588"

uid: cbb42f25-ae91-439a-8325-749e939d9548

spec:

garbageCollect: Outdated

managedDataSource: fedora

schedule: 20 9/12 * * *

template:

metadata: {}

spec:

source:

registry:

pullMethod: node

url: docker://quay.io/containerdisks/fedora:latest

storage:

resources:

requests:

storage: 30Gi

status: {}

status:

conditions:

- lastHeartbeatTime: "2024-10-14T15:20:38Z"

lastTransitionTime: "2024-10-14T15:20:38Z"

message: No current import

reason: NoImport

status: "False"

type: Progressing

- lastHeartbeatTime: "2024-10-14T15:21:06Z"

lastTransitionTime: "2024-10-14T15:21:06Z"

message: Latest import is up to date

reason: UpToDate

status: "True"

type: UpToDate

currentImports:

- DataVolumeName: fedora-21a6f3e628cd

Digest: sha256:21a6f3e628cde7ec7afc9aedf69423924322225d236e78a1f90cf6f4d980f9b3

lastExecutionTimestamp: "2024-10-15T09:20:01Z"

lastImportTimestamp: "2024-10-14T15:20:38Z"

lastImportedPVC:

name: fedora-21a6f3e628cd

namespace: openshift-virtualization-os-images

sourceFormat: snapshotThis snapshot is checked to see if it is up to date at 0920 and 1220 everyday. If it is not up to date, the OCP cluster will download a new snapshot.

These CDI objects (1 through 3 in the last) are the heart of what happens from an image-import standpoint before the VM is instantiated.

- Next, the virt-launcher pod takes over. It checks for the disk-image being available. If it not available, it works with the CDI layer to clone the source/boot volume. Next, it injects some of the cloud-init settings and generates domain.xml (for libvirt).

- After all of this, there are two objects. One is the VM object which provides some of the same high-level information that was used prior to instantiating the VM.

oc get vm fedora-ivory-orca-72 -o yamlapiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

annotations:

kubemacpool.io/transaction-timestamp: "2024-10-15T17:45:45.228338854Z"

kubevirt.io/latest-observed-api-version: v1

kubevirt.io/storage-observed-api-version: v1

creationTimestamp: "2024-10-15T14:40:02Z"

finalizers:

- kubevirt.io/virtualMachineControllerFinalize

generation: 8

name: fedora-ivory-orca-72

namespace: default

resourceVersion: "3582131"

uid: 12e9f5c5-d69d-476c-a832-e41c242a7d87

spec:

dataVolumeTemplates:

- metadata:

creationTimestamp: null

name: fedora-ivory-orca-72-volume

spec:

sourceRef:

kind: DataSource

name: fedora

namespace: openshift-virtualization-os-images

storage:

resources: {}

instancetype:

kind: virtualmachineclusterinstancetype

name: u1.medium

revisionName: fedora-ivory-orca-72-u1.medium-7a23d007-5073-40e2-a2a7-a4b4a0689617-1

preference:

kind: virtualmachineclusterpreference

name: fedora

revisionName: fedora-ivory-orca-72-fedora-65b8f1c5-7552-48cf-b350-59a4c34ad71e-1

running: false

template:

metadata:

creationTimestamp: null

labels:

network.kubevirt.io/headlessService: headless

spec:

architecture: amd64

domain:

devices:

interfaces:

- macAddress: 02:3b:3e:00:00:01

masquerade: {}

name: default

logSerialConsole: true

machine:

type: pc-q35-rhel9.4.0

resources: {}

networks:

- name: default

pod: {}

subdomain: headless

volumes:

- dataVolume:

name: fedora-ivory-orca-72-volume

name: rootdisk

- cloudInitNoCloud:

userData: |

#cloud-config

chpasswd:

expire: false

password: ei72-tin4-r38z

user: cloud-user

name: cloudinitdisk

status:

conditions:

- lastProbeTime: "2024-10-15T17:45:50Z"

lastTransitionTime: "2024-10-15T17:45:50Z"

message: VMI does not exist

reason: VMINotExists

status: "False"

type: Ready

- lastProbeTime: null

lastTransitionTime: null

message: All of the VMI's DVs are bound and not running

reason: AllDVsReady

status: "True"

type: DataVolumesReady

- lastProbeTime: null

lastTransitionTime: null

status: "True"

type: LiveMigratable

desiredGeneration: 8

observedGeneration: 8

printableStatus: Stopped

runStrategy: Halted

volumeSnapshotStatuses:

- enabled: true

name: rootdisk

- enabled: false

name: cloudinitdisk

reason: Snapshot is not supported for this volumeSource type [cloudinitdisk]The next object is the vmi which is only available when the VM is running and shows some of the parameters that were applied.

oc get vmi fedora-ivory-orca-72 -o yamlapiVersion: kubevirt.io/v1

kind: VirtualMachineInstance

metadata:

annotations:

kubevirt.io/cluster-instancetype-name: u1.medium

kubevirt.io/cluster-preference-name: fedora

kubevirt.io/latest-observed-api-version: v1

kubevirt.io/storage-observed-api-version: v1

kubevirt.io/vm-generation: "9"

vm.kubevirt.io/os: linux

creationTimestamp: "2024-10-15T17:57:03Z"

finalizers:

- kubevirt.io/virtualMachineControllerFinalize

- foregroundDeleteVirtualMachine

generation: 11

labels:

kubevirt.io/nodeName: home2

network.kubevirt.io/headlessService: headless

name: fedora-ivory-orca-72

namespace: default

ownerReferences:

- apiVersion: kubevirt.io/v1

blockOwnerDeletion: true

controller: true

kind: VirtualMachine

name: fedora-ivory-orca-72

uid: 12e9f5c5-d69d-476c-a832-e41c242a7d87

resourceVersion: "3597540"

uid: bdcc3a2e-b3f8-46dd-b7c3-2365e1f13302

spec:

architecture: amd64

domain:

cpu:

cores: 1

maxSockets: 4

model: host-model

sockets: 1

threads: 1

devices:

disks:

- disk:

bus: virtio

name: rootdisk

- disk:

bus: virtio

name: cloudinitdisk

interfaces:

- macAddress: 02:3b:3e:00:00:01

masquerade: {}

model: virtio

name: default

logSerialConsole: true

rng: {}

features:

acpi:

enabled: true

smm:

enabled: true

firmware:

bootloader:

efi:

secureBoot: true

uuid: 8db32afa-0681-5c29-aa5b-a26d9153f488

machine:

type: pc-q35-rhel9.4.0

memory:

guest: 4Gi

maxGuest: 16Gi

resources:

requests:

memory: 4Gi

evictionStrategy: None

networks:

- name: default

pod: {}

subdomain: headless

volumes:

- dataVolume:

name: fedora-ivory-orca-72-volume

name: rootdisk

- cloudInitNoCloud:

userData: |

#cloud-config

chpasswd:

expire: false

password: ei72-tin4-r38z

user: cloud-user

name: cloudinitdisk

status:

activePods:

1e23d897-8d71-4d54-9810-dd804b7de469: home2

conditions:

- lastProbeTime: null

lastTransitionTime: "2024-10-15T17:57:10Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: null

message: All of the VMI's DVs are bound and not running

reason: AllDVsReady

status: "True"

type: DataVolumesReady

- lastProbeTime: null

lastTransitionTime: null

status: "True"

type: LiveMigratable

- lastProbeTime: "2024-10-15T17:57:20Z"

lastTransitionTime: null

status: "True"

type: AgentConnected

currentCPUTopology:

cores: 1

sockets: 1

threads: 1

guestOSInfo:

id: fedora

kernelRelease: 6.8.5-301.fc40.x86_64

kernelVersion: '#1 SMP PREEMPT_DYNAMIC Thu Apr 11 20:00:10 UTC 2024'

machine: x86_64

name: Fedora Linux

prettyName: Fedora Linux 40 (Cloud Edition)

version: 40 (Cloud Edition)

versionId: "40"

interfaces:

- infoSource: domain, guest-agent

interfaceName: eth0

mac: 02:3b:3e:00:00:01

name: default

queueCount: 1

launcherContainerImageVersion: registry.redhat.io/container-native-virtualization/virt-launcher-rhel9@sha256:444191284ff0adb7e38d4786a037a0c39a340cfea6b3a943951c8a3dc79dacb2

machine:

type: pc-q35-rhel9.4.0

memory:

guestAtBoot: 4Gi

guestCurrent: 4Gi

guestRequested: 4Gi

migrationMethod: BlockMigration

migrationTransport: Unix

nodeName: home2

phase: Running

phaseTransitionTimestamps:

- phase: Pending

phaseTransitionTimestamp: "2024-10-15T17:57:03Z"

- phase: Scheduling

phaseTransitionTimestamp: "2024-10-15T17:57:04Z"

- phase: Scheduled

phaseTransitionTimestamp: "2024-10-15T17:57:10Z"

- phase: Running

phaseTransitionTimestamp: "2024-10-15T17:57:11Z"

qosClass: Burstable

runtimeUser: 107

selinuxContext: system_u:object_r:container_file_t:s0:c695,c973

virtualMachineRevisionName: revision-start-vm-12e9f5c5-d69d-476c-a832-e41c242a7d87-9

volumeStatus:

- name: cloudinitdisk

size: 1048576

target: vdb

- name: rootdisk

persistentVolumeClaimInfo:

accessModes:

- ReadWriteMany

capacity:

storage: 30Gi

filesystemOverhead: "0"

requests:

storage: 30Gi

volumeMode: Block

target: vda

Again, this a very simplistic view but will give you some familiarity with these objects/CRs to better understand the follow-on articles that will be written on this topic.

I hope you enjoyed this article. Many more to come for Openshift Virtualization topics